Third-Party Approach to Secure Communication Between Vendors

Info: 8940 words (36 pages) Dissertation

Published: 9th Dec 2019

Tagged: Information Systems

Abstract: Cloud computing is changing how companies operate their IT infrastructure. It has changed the usage of distributed computing and has introduced cost effective approaches for the IT services. Because of the increasing demand and functionality in cloud a lot of companies are moving their infrastructure. Security is one major aspect considered by the companies while moving to cloud. Security measures required in cloud computing are different than that of traditional infrastructure as many cloud services are open to all. In this paper, we will discuss reliability, availability and security issues (RAS) in cloud [1]. Along with this, we will also cover other most common security threats. We will then discuss few security measures and good practices to be considered to keep the cloud environment secure. We will discuss a trusted third-party approach which makes a secure communication channels between vendors using Public Key Infrastructure (PKI) with Single sign-on (SSO) and Lightweight Directory Access Protocol (LDAP) [2]. We will then make in depth analysis of Distributed Denial of Service (DDoS) and discuss an approach which involves Cloud Trace Back (CTB) to mitigate it [4].

Section I Introduction: In cloud computing resources are rented and shared majority of the times. User are moving their data to cloud and using the services in simple manner. There are three types of services offered in cloud namely, Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS). The responsibilities of involved parties vary under each model. In IaaS, consumer controls operating system, application and data. In PaaS consumer manages data, application hosting environment. Under SaaS everything including application and data is managed by the cloud provider [2]. Depending on type of model clients rent hardware or software services.

Cloud is classified into three major types: private, public and hybrid [1]. Under private cloud, the services are not for ordinary people. They are restricted to certain organization mainly government bodies. In public cloud, the services are open to all, while hybrid cloud is combination of public and private cloud. Multi-tenancy is generally practiced in public cloud. Under multitenancy different hosts are hosted on the same hypervisor. Multi-tenancy along with unknown data storage location introduces lots of security concerns. Due to increasing usage the concern of security, availability, performance and cost rises. We will discuss many such security threats in this paper.

It is said cloud computing is derived from grid computing. In grid computing, multiple computers are connected to each other who are working together to complete a task. When we add increased utilities and services to grid it serves as cloud computing. In short, cloud computing is higher level of grid computing. Grid requires users to create services whereas cloud will offer functionality that you can directly use in your application [2].

Cloud computing is a massive infrastructure with many virtual machines running on hypervisors. There are some additional hardware required to carry out specific tasks like storing objects or databases. Compared to traditional network, cloud computing is more flexible and easy to monitor. Compared to grid computing, virtualization and multi-tenancy characteristics of cloud computing makes it a better choice in many scenarios. In grid computing, many servers work together on single task whereas due to virtualization one server works on different tasks in cloud computing [2]. Characteristics like reliability, flexibility, scalability, economics of scale has made cloud computing one of most promising technology [2]. Services can be deployed in no time and can be expanded horizontally or vertically as per the requirement which improves flexibility and scalability. The data in cloud is mostly replicated at multiple location which improves redundancy and disaster recovery, thus making cloud computing reliable. Output more than double when input doubles which justifies economics of scale.

Homogenous architecture with centralized data has helped cloud providers to implement enhanced security measures [2]. Many of the traditional data center drawbacks are been overcome due to advancement in cloud computing, but many more are still to be addressed.

The RAS factor along with cloud flexibility is making cloud computing been adopted by numerous organizations. It’s important that proper understand is maintained between the client and provider. A proper guideline needs to be drawn to avoid confusion when it comes to shared security model. Each party needs to know what exactly is under their domain of security. Client and provider should work together in order to understand shared security model and to build a robust cloud environment. In this paper, we will discuss some security measures and practices recommended to enhance the security in cloud. Proper management of services and permission lowers the risks to a great extent. Proper management of access controls, patches, configuration and monitoring will improve the RAS factor [1].

The trusted third party proposed in this paper will reduced security concerns of client. The trusted third party is assumed to have some security characteristics which helps maintain a trust mesh forming interconnecting between different cloud providers. Proper software development and infrastructure architecture is needed to achieve this and is based on the system requirements and functionality [2]. Later, we will do in-depth analysis of DDoS attack. We will study a proposed methodology which uses Cloud Trace Back (CTB) with Flexible Deterministic Packet Marking (FDPM) [4]. This combination helps in finding the real source of the attack.

The flow of this paper is as follows: Section II covers the security threats, in section III we talk about solution and good practices to be considered while using cloud services. In section IV, we will discuss trusted third party approach. In section V we will talk details of CTB and Deterministic Packet Marking (DPM) used to mitigate DDoS attack. We will then summarize our leanings and findings.

Section II: Security Issues and Threats in Cloud Computing:

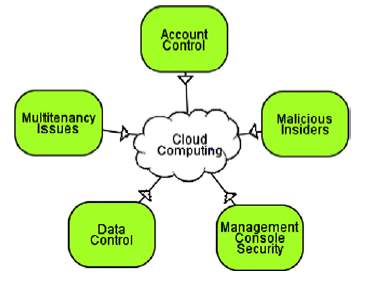

Customer and provider both play an important role in improving cloud security. This is where shared security model comes into picture. It is important for customer to understand how compatible his current management infrastructure is with the cloud manager and what additional control measures can be implemented on cloud provider’s security measures. Both of these points must be frequently checked in order to understand if the migration and usage of cloud services is proper. The architecture of cloud has advantages and disadvantages. Many threats are introduced in the process of migration or while using the cloud services. Improved confidentiality, integrity and availability leads to a secure environment [2]. Figure 1 shows some category of threats which customer face while using cloud services [2]. Let’s analysis these and few more threats in detail and study their implication on privacy, integrity and availability:

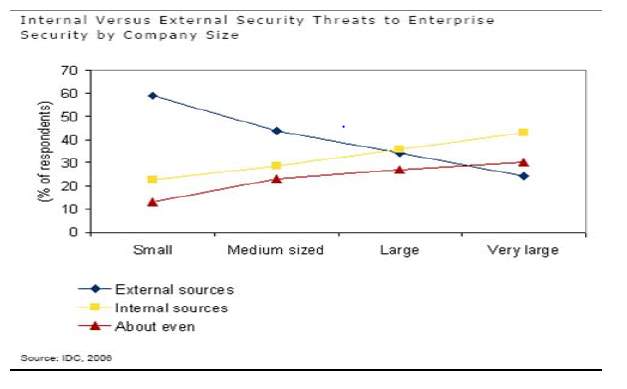

Figure 1: Few Types of threats [2]. Figure 2: Comparison of internal and external threats [4].

- Loss of data: When data is moved to the cloud, customer has no control over the location where it is stored. The data is no more on their private in-house infrastructure. After migration, the data is majorly on multi-tenant environment. Thus, the chances of data leakage increase. Improper access control, loose policies and access control lists (ACL), weak encryption and decryption techniques, system failure, improper back-up and replication of data are few primary factors responsible for this loss [4].

Although, Data Leakage Prevention (DLP) can be used in order to overcome this problem, this measure is not very much effective [1]. In public cloud the data needs to be available to the public and DLP might defeat this purpose. Data leakage is not a primary issue in private cloud as the client has dedicated hardware and is single tenant. Whereas in hybrid cloud for infrastructure as a service client can set DLP as the hardware might be shared [1]. Data leakage can cause lack of trust and hamper the reputation of the cloud provider [4].

- Threat of employees: There is a lack of transparency between cloud provider and clients with respect to many factors. Clients are not aware about the cloud provider’s protocols in handling data or services. They are also not aware about the hiring process followed by the provider, its contacts with other cloud providers or its protocols in federated cloud. This lack of transparency can encourage hacker or malicious insider. Cloud providers work with a lot of third party to provider specific services, for example in raising service tickets cloud provider might need application of Service NOW (SNOW). These third-party organization can leak the data to someone who’s in the similar business as that of client. Figure 2 compares the number of insider vs outsider threats that take place depending on the size of company. This information is obtained from a survey conducted by International Data Cooperation (IDC) [2]. The reading reflects that insider threats increase as the number of employees in company increase. We can also see that the internal threats are always above even threat sources, making them one primary concern for cloud providers and clients.

- Threat from outsiders: Hackers and malicious users are constantly looking for loopholes to access the system with an objective to gain confidential information or to introduce attacks and overwhelm the infrastructure. Because of the massive cloud infrastructure, the potential entry points for outsider attacks are many and thus it’s difficult to find the attacked port [4]. Like computer, virtual servers have IP addresses too. If the malicious user finds this IP address he can launch his own instance on that hardware [1]. If this machine is shared, there is a possibility that the hacker can access all the hosts on it. By the time, this is realized the hacker could have done enough damage. Due to number of interface from where the hacker can SSH using access keys or exploit the API weakness, the number of outsider’s threats are more in cloud environment.

- Interrupted or Denied Services: As mentioned earlier, a malicious user can get into the system and manipulate things before the provider or client realizes it. The attacker can transfer the legit users request to illegitimate sites or create fake traffic which overwhelms the server or launch Denial of Service (DoS) or Distributed Denial of Service (DDoS) attack [4].

What if the Alibaba’s servers get disrupted due to such attacks during Christmas? This can cause serious financial lose to both, the client and provider.

- DDoS Attacks in Cloud: DDoS attack is when huge network traffic is bombarded on a port [1]. These ports generally belong to internet facing device and are targeted using PCs, mobile or internet of things equipment which are operated by malware. These targeting infected devices are also called as botnets or auto dialers [4]. The purpose of doing this is to stop legit users from getting services or introducing delay in the services. DDoS attacks are difficult to figure out as traffic is generated from different hosts. The attacked devices can further act as attackers and multiply the intensity of the attack [4]. A server might completely shut down if hit hard by this attack. We will study method to prevent DDoS attacks in great details in section 4 of this paper.

- Threats due to shared environment: Multitenancy is a key factor for the cloud services to be so cost effective. Data, memory, hardware, CPU power is shared under this feature. Sharing the hardware opens primary security concerns. Many virtual machines are on the same hypervisor. An attacker on another virtual machine can bridge into the hypervisor and attack your VM too. This phenomenon is called VM to VM attack [4]. This can lead to information leakage and enhance further attacks.

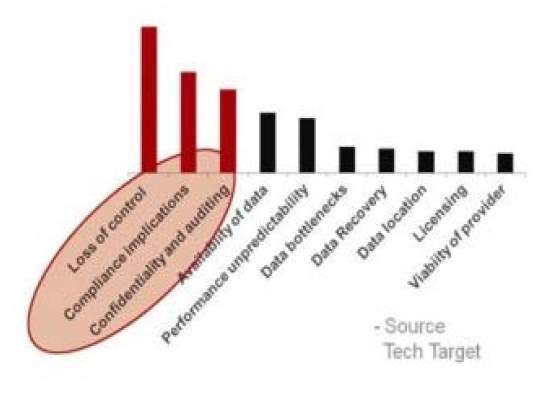

- Losing the control: Once the customers move their services to the cloud, they lose control over various factors. Customers have no control with whom they will be sharing their hardware or what’s the location of data storage. Consumers also have limited control over vulnerability scans and auditing too. They need prior permission from the cloud provider in order to run security scans. In some cases, the clients are not aware of the security measures taken by the provider to secure their data. This can create a lack of trust and make customers little unsecure. Figure 3 from tech target shows the concerns and their magnitude clients have while using cloud services [4]. Loss of control is the most concerning factor of all.

Figure 3: Client Concerns related to cloud computing [4]

- Lack of trust between vendors: As the customer loses control over data and services after migrating to cloud, trust plays a major role in reducing the customer’s loss of control concern. Entity A and B trust each other if their behavior is predicted the same by each other. In security aspect, trust is established when all the reasons for threats are eliminated or minimized. Trust in cloud computing depends on the deployment model which the customer chooses to use. The selection of model will say if the sensitive data is given to someone else for processing or storage [2]. In traditional environment, the trust is enhanced by security policies which applies restriction based on needs, function and data [2]. The scenario is different in cloud especially with public and community cloud. Here you are trusting security policies and environment of cloud provider. In private cloud the trust level is high as many processing tasks and data are on dedicated hardware.

In cloud the concept of perimeter security is not clear. Perimeter security can include firewalls or security policies which sits on endpoints in order to prevent the underlining infrastructure from attackers or illegal users [2]. Each of these endpoints are considered to be a potential gateway for malicious users to enter. Perimeter security can be referred as borderline security. The borderline security is assumed to be weak in cloud and all the services required for a client must be present within a certain boundary. This leads us to believe that third party vendors or outscored services should not be trusted, which is difficult because a particular organizations has to be dependent on other organizations for some or other services. Thus, it is important to build the trust among various vendors. This trust can be built with proper authentication systems, separation of bounder lines and monitoring to prevent information system from being attacked [2].

In order to achieve this objective where organizations can talk securely, the writer has proposed a solution which is trusted third party (TPP). We will study TTP in great details in section 4. In short, what if organizations willing to talk to each other first consult TTP. The TTP in return uses cryptography to provide secure communication for these organizations. Clients trust the TTP for providing security and consider their services to be secure, scalable and widely available [2]. To summarize, TTP is like a connecting hub for organizations willing to talk or use each other’s services without any fear. This thus reduces the fear of weak borderline security.

- Confidentiality and privacy: Confidentially is allowing valid users to access the services and data [2]. In cloud computing large number of parties are interacting with each other as well as with the available services. They are continuously sending requests and receiving response. Due to this high flow of traffic, security concerns regarding data and software confidentiality and privacy can arise.

Multitenancy, where there is very little separation of hardware can lead to threats which compromise confidentiality and privacy. It is important for organization to check if the hardware is properly decommissioned i.e. the data on it is erased properly before reusing it or while they stop using it.

Improper authentication can compromise user’s account credentials which can lead to illegal access to memory, data or other resources [2]. Software confidentiality means that the applications running on the virtual machine, which continuously talk with the data are not creating loopholes to lose confidentiality and privacy [2]. Improper software application and lose identification process are primary reasons for compromising user’s privacy and hampering confidently.

- Integrity: Integrity means to prevent the resources like data, software and hardware from being modified by unauthorized users [2]. Proper authorizations and permissions helps in building trust in data security and helps in figuring out unauthorized fabrication of data. Authorization introduces hierarchy in the permission structure which makes the misbehavior point out faster. It also helps in systematic allotment and expansion of permission in future.

Software integrity is similar to data integrity where modification to software is allowed by only authorized users. Generally, the company who has designed the application is responsible for maintaining software integrity. Preventing unauthorized modification in hardware is referred to hardware integrity, and this responsibility lies with the cloud provider in all types of cloud model.

Data leakage and loss of control can lead to data integrity, whereas outsider attacks can cause harm to both, data and software integrity. Insider threats can be primary reason for compromised hardware integrity.

- Availability: Availability refers to resources like software, hardware, data and network being available even under an attack or security breach [2]. It means to make services widely available across multiple geographical regions. Cloud systems are generally overwhelmed with multiple requests from multiple entities. DDoS attacks, multitenancy can affect the availability of services. ELMS canvas deployed on AWS was unavailable for certain time due to maintenance. Although the service not being available due to scheduled maintenance is extremely rare, they can occur at peak time for certain client.

Section III: Security measures and good practices:

Cloud infrastructure are complicated due to its size and amount of traffic flowing. As mentioned before, the physical boundaries are unclear in cloud infrastructure thus it can be challenging to secure such environment [4]. Security measure required for the traditional in-house network can be different than that of cloud infrastructure. With slight modification in these measures they can be implemented in cloud [1]. Let’s study these security measures, best practices and few challenges involved with these measures here:

- Controlled Permissions: It is important for right users to use right services. A proper hierarchy of permission and groups should be formed in order to authorize right users. It is a good practice not to make any entity root user. Unwanted users should be removed from the group or should be denied access. Proper monitoring of the permissions should be done regularly. Users must be encouraged to change their passwords at least thrice annually. Proper permission must be in place to avoid loss of network, software and data integrity [1].

- Restrictive User policies in cloud: Implementation of access control in cloud is based on SaaS, PaaS or IaaS. In SaaS, everything is controlled by cloud provider. Under such services, network based security implementation like access lists are been replaced by access control [1]. Customer thus can use multi factor authentication or federation authentication using popular social media or email account to confirm their identity. Customer can also use LDAP with Security Assertion Markup Language (SAML) in order to obtain access to services. In PaaS, access to server and network is cloud provider’s responsibility [1]. Customer is responsible for providing access to application and data using authentication techniques. In IaaS, customers are responsible for majority of security measure under access control umbrella. They need to implement access control for instances, network, databases and storage owned by them.

- Mitigating threats of insider: It is difficult to keep a tab on actions of all the employees. Thus, proper process should be followed while hiring them. Rigorous background checks must be performed on each employee. The flow in which the goods and services are carried out must be monitored from time to time. Access to core network and hardware should be given only to limited people. Proper authorizing and biometric must be implemented for employees, third party vendors and contractors. All the employees should sign an agreement stating legal actions will be taken if malicious activities are carried by them during their tenure or after [4]. All the permissions and accounts of a particular user must be blocked or transfer to a valid user if that user leaves the organization.

- Mitigating the threats of outside attacks: A successful outside attack hampers the reputation of the organization and indicates weak security system which can incur financial losses. Once the infrastructure of a cloud provider is attacked, clients usually tend to think before migrating to that provider. The security measure taken in the traditional network like firewalls, application security, network segmentation, IPS can be used in cloud as well. IPS and Intrusion Detection System (IDS) can be used to lower the risk of attacks having similar traffic [1]. A black hole can be created using honeypot and authentication, authorization and accounting system [4]. This can help to direct the unwanted traffic towards the black hole and provide effective network management. Cloud providers as well clients must continuously monitor the system even when there are no sign of attacks or risks. This will help them to narrow down the areas to research when attacks occur. Attackers are usually interested in specific part of infrastructure, for example cloud storages are highly prone to be attacked. Special monitoring should be done for such infrastructure. Trusted VPN provider like Cisco AnyConnect with extra layer of sign-in must be in place for remote employees. Proper firewall settings must be present between VMs to avoid the attackers from accessing multiple machines if one of them is compromised.

- Measures to avoid service disruption: A strong login process can reduce many security threats. A cloud provider must be flexible enough to modify login requirements as per the customer’s needs. Customer should not allow its employees to share login credentials [4]. They should use multi-factor authentication where a one-time password is sent to their personal device in order to login. The client must give all the network information to the provider so they understand the IP distribution and subnet information. This will help the provider to track down the request to the very end. Customer should monitor the globally available and provider’s blacklisted IP addresses to see if request are not coming from that pool [4].

- Cloud against DDoS attack: Flexibility in resources in cloud is an effective way to work against DDoS [1]. As the HTTP traffic hits the server from different hosts; monitoring tools can be used in cloud to set alarms for unusual behavior. Using auto scaling, more servers or instances can be launched instantly if there’s sudden increase in traffic. This fetches the provider and customer some more time to identify the attack and resolve it with minimal harm to the downtime.

- Acting against Multi-tenancy risks: Multi-tenancy has made cloud services very cost efficient but has also introduced the security concerns of confidentiality and privacy and risks of being attacked by another compromised VM on the same hardware. It is important to deal with these threats in order to use this functionality of cloud on a large scale. Defense in depth approach can be used to overcome the risk of attackers [4]. In this approach different processes, mechanism or policies are used on all the layers. These mechanisms depend on the properties and requirements of the layer [4]. Due to this security approach, the attack will have to deal with each layer uniquely and will have to hack different mechanism on each layer. This in turn fetches more time for the provider to act against the attack and make the environment secure. Defense in depth introduces delay in the attack and doesn’t stop the attack completely.

- Creating on premise like environment in cloud: As mentioned earlier the provider should be open to inculcate customer’s security measures to customer’s cloud domain [4]. Customers can inform the cloud provider if they have any specific security requirement. These requirements must be mentioned in the Service Level Agreement (SLA). Both the parties should understand the SLA properly. The customer can know the limitation and provider can know the customer’s expectations. If the provider can create customized security environment in cloud, the fear of loss of control can be reduced.

- Improved system performance: Services in cloud needs to be highly available. Cloud provider and client should sign an SLA which brings both the parties on the same page with respect to the services been offered. SLA mentions downtown which is allowed during a calendar year or during the time frame for which the bond is signed. Talking about performance, the system should both find and stop attacks. Active monitoring should be implemented which can introduce automation in the infrastructure to act against attacks [4]. This can be obtained from techniques mentioned before like IPS or IDS which is capable of understanding packet pattern and block them based on IP address. Furthermore, notification functionality like Simple Notification Service (SNS) or Simple Mail Transfer Protocol (SMTP) can be introduced in these systems to improve both availability and performance [4].

Section IV: Trusted Third Party:

Trusted third party helps the 2 parties by creating a secure communication channel. These 2 parties trust the third party to provide end to end communication security. These services are widely scalable, available and are built upon considering techniques and mechanisms used by both the parties [2]. The notion that borderline security is lost in cloud computing is reduced because of trusted third party. In short, TTP is a mediator who knows both the parties and vouches for each of them. TTP operates by distributing digital certificates to the servers. Various TTP are connected to each other using validation algorithms to approve their web trust with Public Key Infrastructure (PKI). PKI is set of policies or rules which are used to manage digital certificates enabling computers to talk securely. It helps in identification of public encrypted keys and provides assured identification of other party thus providing authentication and authorization to improve data confidentially and integrity [2]. PKI is widely used with protocols which interactive with directories. One such protocol is LDAP. The combination of PKI with LDAP is used to distribute certificates, maintain certificate revocation lists (CRLs) and grant private keys [2]. CRL is list of certificates revoked by the certificate authority before they expire since they cannot be trusted.

Apart from being used with directories, PKI can be used in Single Sign-On (SSO) as well. Using SSO, user gets access to connected systems with single login credentials. This feature makes a lot of sense in cloud computing as the infrastructure is massive with many outside systems connected to each other. PKI with LDAP and SSO provides improved security measures.

Let’s study security improvement offered by TTP in detail:

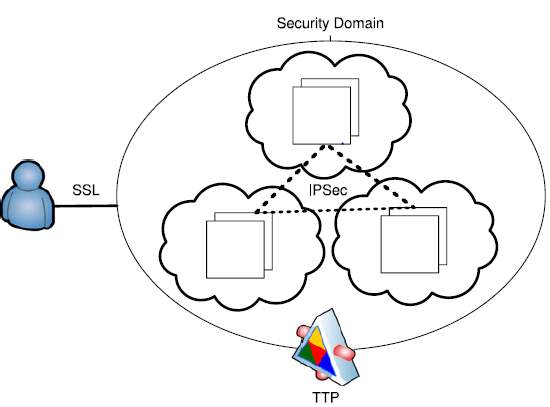

- Better confidentiality: Confidentiality was explained in previous sections. Let’s directly talk about how TTP helps to maintain it. PKI can be used to enable Internet Protocol Security (IPSec) and Secure Socket Level (SSL) which are used to provide a secure links for communication. IPsec is a network protocol majorly used in VPN. PKI certificate distribution can help the IPsec users to authenticate themselves [2]. IPsec can be used to protect data transmission of almost any type flowing between host to host or host to network. Talking about SSL, it is majorly used in creating a secure link between browser and web server. Few things need to be considered before finalizing which one of these you want to use. IPsec is compatible with IP compression which compresses the packet content. Although, IPsec is compatible with almost all type of packets, in order to use it each device needs to have its client installed. The client changes as per the operating system thus this can be a bit tedious and time consuming. This is not a requirement while using SSL since it is inbuilt in the browser and majority of cloud service can be access using console which uses SSL. Thus, the writer proposes that SSL can be used between host to cloud communication and IPsec between host to host communications [2].

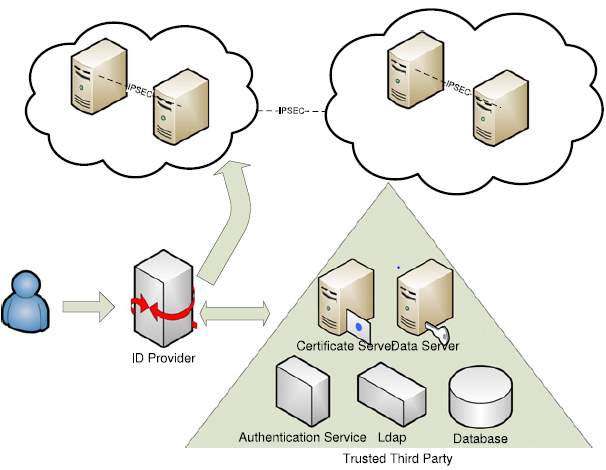

- Enhanced authentication: PKI generates certificates to be given to users. These certificates give users authority to carry out transmissions. These certificates eliminate the risk of unauthorized access and creates a strong boundary. PKI used with LDAP, SSO and its capability to distribute private keys are very effective in allowing the user to use multiple devices securely. At this point we know SSO, let’s understand Web SSO in order to understand Shibboleth. Web SSO allows users to access a cluster of web servers which requires authentication using a Single Sign On. Shibboleth is a free and open source software which is used for federated identification and connects users to application within or outside organization. In order to establish Shibboleth, user information needs to be fed into the Shibboleth using TTP LDAP. It uses SAML for transferring the user’s identity. One example of Shibboleth authentication is university students get directed to university’s Central Authentication System in order to access additional third party resources like IEEE magazines or free HBO subscription. Figure 4 shows the authentication process. The user first contacts the ID provider; he then navigates to the in-house network and authenticates himself [2]. Here, the permission is either granted or denied to the user. If permission is granted the user can proceed to authorization. TTP in support with these secure protocols and applications helps to enhance security.

- Separate Security Domains: We have studied the advantages of using PKI with LDAP, now let’s see how federation and forming security domain in TTP can be a good practice. Federation means different organization forms certain policies to grant authentications and authorization [2]. For example, in AWS user can get security token from Facebook after entering their credential there. With this security token, the user can carry out certain tasks in the cloud environment. Such applications which trust a common security token form federated clouds. In federated cloud, different independent internal or external clouds can communicate with each other to serve certain business need. Figure 4 shows the one such formation of security domain in which there are federated clouds communicating with each other over IPsec.

Figure 4: Authentication Process [2] Figure 5: Security Domain [2]

- Cryptographic Patters in Data: TTP uses cryptographic separation in which data is prevented with patterns, thus making it unreadable for outsiders. This can be done in two ways, symmetric cryptography and asymmetric cryptographic. In symmetric process same key is used to encrypt and decrypt data, whereas in asymmetric different keys are used. The combination of symmetric and asymmetric is called as hybrid cryptographic and this has efficiency of both the method, thus we obtain better confidentiality and integrity [2].

- Certified Access: In cloud computing, users and provider are in different security domains. The access granted is not similar to that of traditional environment where decisions are made based on identities fed to the system. Here decisions are based on characteristics and attributes passed. PKI can be used to distribute X.509 certificates which are used to bind identity contained in the certificate to public key. Thus, helping various security domains to communicate securely.

Section V: Methodology to prevent DDoS attack.

By now we know DDoS attacks are carried out to overwhelm the services and exhausted their CPU resources and network bandwidth without revealing the identity of the attacker [3]. It is easier to carry out such attacks in cloud environment especially because of shared resources compared to single tenant. Out of integrity, availability and confidently, availability has maximum threats to the providers and clients since it is easily noticeable and can stop the services. DDoS is one of the primary reason for this risk. Here, we will study an approach to order to mitigate DDoS attack. The writer has proposed use of Cloud Trace Back (CTB) to trace back the attacker and Cloud protector to filter traffic. CTB is used for marking the packets using Deterministic Packet Marking (DPM). Let study each of these components in detail.

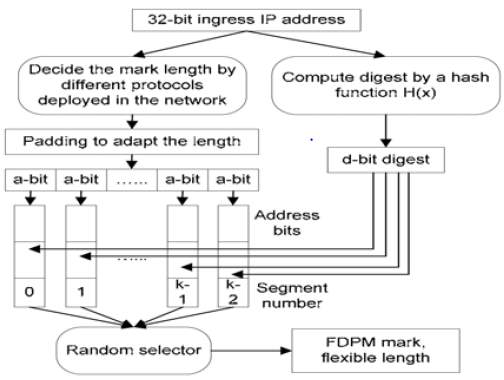

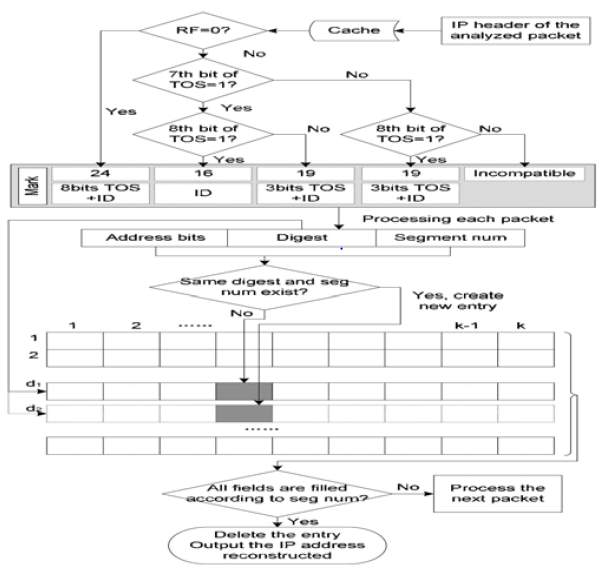

- Cloud Trace Back: In order to achieve the objective of finding the source of attack it important to first have access to the Domain Name System (DNS) records. Start of Authority is service offered DNS which stores such information. CTB is placed at the entry point of the cloud network, so that all the traffic passes through it. Then using Flexible Deterministic Packet Marking (FDPM) the type of service, fragment ID and reserved flag fields of packets are marked which is called as Cloud Trace Back Marking (CTM). This CTM removes the service providers tag and can be reconstructed during attack to find the origin [4].

Let’s understand how CTB can help to identify the source in case of attack. When an attack is discovered, the attacker will keep on sending SOAP requests to the CTB. CTB marks these requests and sends it to the server or victim. The server or victim then requests the reconstruction of the marks to find the origin. If the origin is known and if the message is normal the traffic is served. Thus, CTB can be used only to trace back the origin but not stop the DDoS. To filter the traffic, we use Cloud Protector. Figure 7 shows the simplified version of the architecture, the cloud protector and CTB are placed before the cloud network. All the requests are passed from these components in order to introduce the mitigation functionality.

Figure 6: Simplified Architecture [3]

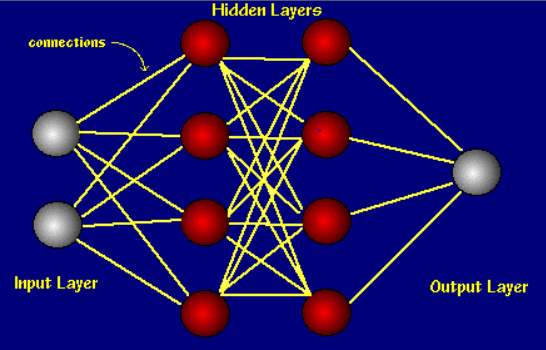

- Cloud Protector: Cloud Protector is a back propagating neural network (NN) [3]. In order to understand back propagating algorithm, first let’s know what neural network is. Neural network consists of interconnected processing elements which forms layers namely, input layer, hidden layer and output layer. Each connection between the layers has a weight. In some cases, errors are considered too while evaluating the sum and are called as bias. A threshold has been set for every network and the final decision is made if the summation of the weights and inputs is greater than the threshold. The sophistication of the decisions depends on the complexity of the cases considered while designing the layers.

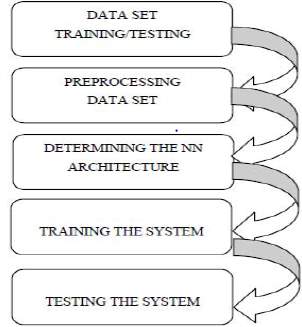

Figure 8: Neural Network [6] Figure 9: Steps to implement Cloud Protector [3]

Each of this network has a cost function associated with it and back propagating algorithm tells us how fast the cost changes when the weights and bias changes. In sort, back propagating algorithm is partial derivative of cost function with respect to weight or bias. Thus, using the algorithm, it becomes easier to study the behavior of the network faster. Now let’s analysis the five phases mention in figure 9.

- Building the Training Dataset: Training dataset is used to test the neural network for various cases. You can try and run the network by varying the weights. Training datasets are mostly for learning and are then tested against validation dataset to check if we are not overfitting the network. Building and cleaning the dataset is a complicated task and is done using real traffic, sanitized traffic or simulated traffic [3]. Testbed networks are widely used in order to generate traffic and test the system. The traffic can be generated via mimicking other network or via using traffic generator like Harpoon or the Swing. This traffic has no confidential information and is safe to use. The writer has proposed to use DARPA 1999 attack detection evaluation dataset to avoid the cost of the traffic generator and simulation. After testing it against these dataset, the test dataset is used for testing the final solution and to find the actual predictive power of the network.

- Standardizing the Dataset: Learning algorithms benefits from standardization of datasets. Preprocessing helps the dataset achieve this objective and for algorithm to work efficiently with minimum errors of incompatible data. The numeric and symbolic content of the dataset is converted to numeric form and is further used as input to the neural network [3]. Regular expression, pandas, numpy and Scikit are famous libraries in Python used for preprocessing and standardizing datasets.

- Finalizing the NN architecture: As mentioned before, the sophistication of network depends on number of hidden layers. First column which is input layer and last column which is output layer are constant, but the number of hidden layers can be varied. There is no such mathematical approach to finalize these columns and nodes. Many cases are tested and the one with proper readings is selected.

- FDPM Marking Scheme:

- Encoding Process: A total of 25 bits are available to store the mark, 8 TOS bits, 16 fragment ID and 1 reserved flag. Reserved flag is actually used to imply if TOS is available for storage or no. In case of TOS unavailable for storage 16 bits are used for the mark.

Figure 10: FPDM Marking Process [3] Figure 11: FDPM Reconstruction Process [3]

FDPM can adjust the mark length depend on the protocol. We know that the source IP address in a packet has 32 bits, but our mark has the storage of 25 bits only, thus we need to use segment number to overcome this issue i.e. multiple segments will carry information of one source IP [5]. Further, while reconstructing the source IP address, in order to understand which packets are carrying the mark for the same source address, a hash of source address is added in the mark known as digest. The length of the mark is determined based on the protocol and can have 3 values: 24 bits, 19 bits or 16 bits. Figure 10 shows the encoding process of the mark. The ingress address is divided into k IP packets and each has a digest with it. Padding is done to divide the source IP address equally. For example, if k=5 then 3 zeroes are added at the end, making it 35 bits and dividing it among 5 segments of 7 bits each.

- The Reconstruction Process: The reconstruction process has two steps: mark recognition and finding the source IP [3]. While doing the reconstruction, the IP packets are sourced in cache for some time as the reconstruction speed is less than the packet arrival rate [5]. If the control filed i.e. RF is 0, the mark is 24 bits, if RF is 1 and TOS 7th and 8th bit is one then mark length is 16 else it is 19. The address recovery process uses a linked list where the rows are variables and columns are number of segments the source IP has used during encoding process [3]. Entries are created in the linked list table depending on the digest. Each digest has one source IP address. When all the columns in a row are filled, it means the source address is obtained. That entry is deleted from the recovery table. Figure 11 illustrates flow diagram of this explanation.

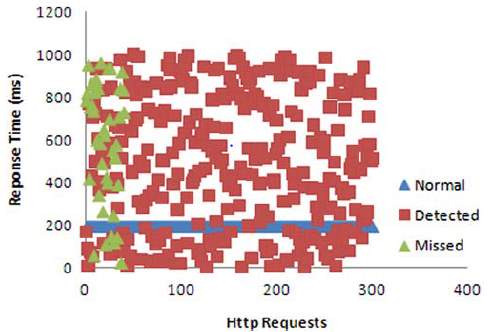

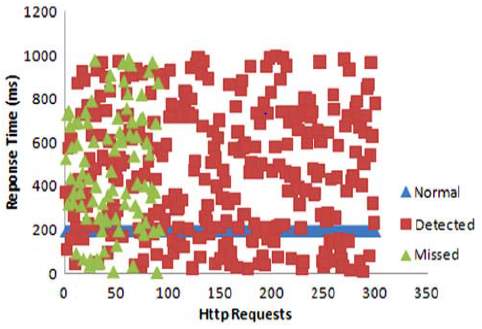

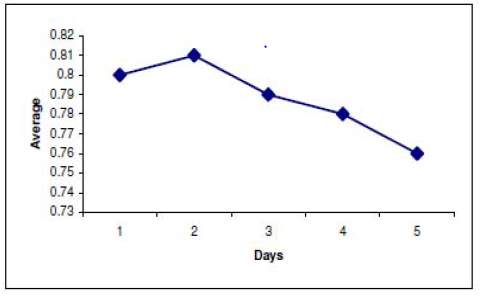

- Results obtained from this methodology: The writer has obtained the results illustrated in scatter diagram in figure 12 and 13. On training dataset 91% of the attack traffic was detected and 9% was missed in comparison to 88% detection on testing dataset. These results are for 4 Neuron layers, 0.2 learning rate, 0.6 momentum and 0.1 threshold [3].

Figure 12: Result of training dataset [3] Figure 13: Result of testing dataset [3]

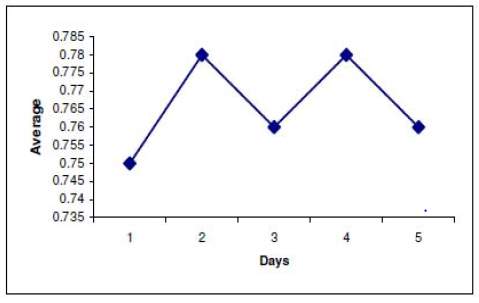

Against the DARPA (KDD99) Dataset 75-79% legal traffic was detected and 76-81% of attacks were detected [3].

Figure 14: Legitimate Traffic Detected [3] Figure 15: Attacks Detected [3]

Section VI: Conclusion

Cloud computing has no doubt provided IT industry number of benefits, but it’s not for all. Security is the first thing client think of while moving to cloud. The security measure in this paper will reduce the threats in the environment but won’t make it 100% secure. The paper has highlighted all the major issues which customers consider before migrating to cloud. The availability, integrity and confidentiality are major threats which are address using TTP along with PKI, SSO and LDAP. The machines on the same hardware needs to be properly isolated with firewalls to solve the issue of multitenancy. Provider and client need to properly understand the SLA and stick to it. DDoS attack has been haunting providers since a long time. The proposed solution which used CTB and Cloud Protector can reduce the risk of DDoS to a great extent. The result shows about 91% and 88% of the attacks were detected on training and testing dataset. Cloud providers need to follow proper hiring process to decrease the number of insider’s attacks. Proper software patch, security groups and networks ACL should be in place protecting the application. At last, security is the primary factor which needs to be considered while migrating to cloud.

Reference:

[1] F. Sabahi, “Cloud computing security threats and responses,” 2011 IEEE 3rd International Conference on Communication Software and Networks, Xi’an, 2011, pp. 245-249.

doi: 10.1109/ICCSN.2011.6014715

[2] Dimitrios Zissis, Dimitrios Lekkas, “Addressing cloud computing security issues,” In Future Generation Computer Systems, Volume 28, Issue 3, 2012, Pages 583-592, ISSN 0167-739X, https://doi.org/10.1016/j.future.2010.12.006.

[3] B. Joshi, A. S. Vijayan and B. K. Joshi, “Securing cloud computing environment against DDoS attacks,” 2012 International Conference on Computer Communication and Informatics, Coimbatore, 2012,pp.1-5.doi:10.1109/ICCCI.2012.6158817

[4] A. Behl, “Emerging security challenges in cloud computing: An insight to cloud security challenges and their mitigation,” 2011 World Congress on Information and Communication Technologies, Mumbai, 2011,pp.217-222.doi:10.1109/WICT.2011.6141247

[5] Xiang, Yang, Zhou, Wanlei and Guo, Minyi 2009, Flexible deterministic packet marking: an IP traceback system to find the real source of attacks, IEEE transactions on parallel and distributed systems, vol. 20, no. 4, pp. 567-580

[6] Maureen Caudill, “Neural Network Primer: Part I”, AI Expert, February 1989

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Information Systems"

Information Systems relates to systems that allow people and businesses to handle and use data in a multitude of ways. Information Systems can assist you in processing and filtering data, and can be used in many different environments.

Related Articles

DMCA / Removal Request

If you are the original writer of this dissertation and no longer wish to have your work published on the UKDiss.com website then please: