User Modelling Framework for Research-Paper Recommender Systems

Info: 11994 words (48 pages) Example Research Project

Published: 14th Apr 2021

Tagged: Information Systems

Table of Contents

3.1 Phase 1: Foundational Research

3.2 Phase 2: Formulation of a User Model and Data Capture Interface

3.3 Phase 3: Implementation of the User Model and Data Capture Interface

3.4 Phase 4: Evaluation of the User Model and Data Capture Interface

1.0 Introduction

Research has shown that students researchers have difficulty in finding relevant research materials, this is amplified by their inability to find a manageable area of interest and the overwhelming abundance of available resources (Head, 2007). To mitigate this and to provide researchers with current peer-reviewed and credible resources, a research paper recommender system should be used. However, the present landscape of research paper recommendation systems research is plagued with the inability to reproduce the findings of other researchers (Beel, Gipp, Langer, & Breitinger, 2016); and thus impossible to find the most compelling approaches to implementing such a system. The factors that contribute to this are (Beel et al., 2016; Beel, Langer, et al., 2013):

- Neglect of User Modelling

- Focus on Accuracy

- Translating Research into Reality

- Persistence and Authorities

- Cooperation

- Information Scarcity

Table 1: Tiers of Research-Paper Recommender System Shortcomings

| Category | Shortcoming | Level |

| Implementation | Neglect of User Modelling | Researcher |

| Evaluation | Focus on Accuracy | Researcher |

| Publication | Persistence and Authorities

Cooperation Translating Research into Reality Information Scarcity |

Community |

Through analyzing the causes of these factors we were able to we were able to identify the levels at which these shortcomings could be potentially solved.

Of these shortcomings, the one that is fundamental to the field is user modelling, as it is the process that is used to identify the user’s informational needs (Ricci, Rokach, & Shapira, 2011). The more accurate the virtual representation of a user at any given point in time, the more useful that the recommendation provided to a researcher will be.

1.1 Background

Beginning in 1665, the total volume of scientific research papers published have surpassed the 50 million mark in 2009, and approximately 2.5 million new papers are published yearly (Jinha, 2010). This is due to the approximately 28,100 active scholarly peer-reviewed journals and the rising number of fake scientific journals which produce high volumes of subpar research (Boon, 2016). This overwhelming abundance of scientific papers has lead researchers to skim through as much as possible and be the judges on what is deemed appropriate. This is made even more difficult for those who have no experience doing research as they quickly become frustrated.

As the volume of research papers grows in a particular field, it becomes highly impractical for the researcher to find relevant material in the deluge of resources. This is because it is humanly impossible to review all of the literature, as more is continually being created.

To alleviate this burden, recommendation systems are being used to make predictions as to what papers a researcher may be interested in to guide his research process. Research paper recommender systems are a niche of the recommender systems field, as most recommender systems are consumer focused in the area of movies, books, music and other consumer items and services.

Recommendation systems are not a one size fits all type of solution as each type of recommender system need to be tailored to what is trying to be accomplished and the resources that are available such as CPU power, memory, disk storage and network traffic (Beel et al., 2015). The two most widely used approaches are content based filtering (CBF) and collaborative filtering (CF).

In the field of research paper recommender systems, content-based filtering is the most widely used and researched recommendation approach (Beel et al., 2016). The content-based filtering method is reliant on user preferences and item descriptions. The system generates a profile of what the user likes, rated and interacted with and it recommends similar items that it deems most relevant.

Collaborative filtering (CF) is the second most widely used approach in the field of research paper recommender systems (Beel et al., 2016). This method is based on the assumption that persons that agree on something in the past will continue to do so in the future. Collaborative filtering is reliant on large amounts of user data which it uses to find other users with similar preferences and behaviours. This approach does not rely on a machine’s understanding of an item but rather makes its predictions based on the similarity of users and their interactions with the system.

Hybrid recommender systems are also a very promising approach as systems that utilises one will be able to reap the benefits of the systems that inspired its design without the drawback of those individual systems. However, due to the problems faced by the research paper recommender community, it makes it increasingly difficult to determine whether an approach is right, has promise or whether it will be the new state-of-the-art.

1.2 Statement of Problem

Research in the area of recommender systems for research papers have become convoluted with unreproducible research findings (Beel et al., 2016; Beel, Langer, et al., 2013) due to the shortcomings within the field and therefore, modern systems are not utilising such research.

The neglect of user modelling in the area of research paper recommender system has disregarded the wealth of unique information generated by an individual. To mitigate this, a user model framework should be designed/adapted to suit the quirks of research-paper recommenders and researchers. Doing so will generate a more accurate virtual representation of a user, their needs and goals as it relates to their research.

1.2.1 Current Situation

It is currently not possible to determine the most effective recommendation approaches to used when developing research paper recommender systems as there is no baseline for researchers to compare their work to (Beel et al., 2016). It is also tough for researchers in the field to test their findings effectively as they usually don’t have access to functional research paper recommender systems and they rely on offline testing in most cases (Beel et al., 2016). Offline testing methods are unsuitable in many cases (Beel, Genzmehr, Langer, Nürnberger, & Gipp, 2013) as they aren’t reflective of real world use.

`

1.2.2 Ideal Situation

The ideal situation will be to deployed solution based on a research paper recommender framework to mitigate the current problems, and that will be used as a data gathering apparatus. If it is open to researchers globally, it will allow them to share their research freely with the rest of the world and create the potential to capture real world user interaction. As an open-source project, a solution based on the framework, become a self-sustaining ecosystem as researchers from all fields can contribute their papers freely and the data generated by all users’ interaction with the system will be used to improve research paper recommenders. This will be done through improvements in the investigation and testing approaches employed in the field by utilising data captured using the framework.

2.0 Literature Review

Bollacker et al. introduced the first research paper recommender system as part of the CiteSeer project (Bollacker, Lawrence, & Giles, 1998) which helped to automate and enhanced the process of finding relevant research papers and paved the way for the systems that followed. CiteSeer, however at the time it did not focus on gathering statistical information (Bollacker et al., 1998). The field has since grown to over 200 publications (Beel et al., 2016) based on research paper recommender systems. The field is also not without drawback as it is more plagued with problems than the other subfields of the recommender systems. This is due to a lack of standardisation across the areas that are required to enable grow and to give a sense of direction to as to where researchers should focus their efforts.

Beel, Gipp, Langer, & Breitinger (2016), who are the developers of Docear, surveyed over 200 papers and resources published during 1998-2013 that were related to research paper recommender systems. Firstly, they found that several evaluations methods had limitations because they were based on datasets that were cleaned using criteria set by the researchers, they were few participants in user studies, or did not use appropriate baselines. Secondly, some authors provided very little information about their algorithm design, and as such, it was difficult for other researchers to re-implement. This led to different implementations of the approaches, which could have caused variation in the results. Beel, Gipp, Langer, & Breitinger (2016) speculated that slight changes in datasets, algorithms, or user populations inevitably lead to a significant discrepancy in the performance of the approaches. Researchers should not be allowed to set forth the criteria to clean their dataset as it could lead to bias, to have the system perform more favourably. Instead, the community should determine the criteria so as to begin the process of establishing a reasonable baseline. Researchers should only specify their criteria when testing and optimising their algorithmic approach.

The field of research paper recommender systems is comparable to a game of Jenga, with each block that makes up the tower representing a different aspect (i.e. evaluation methods, datasets, architecture, etc.) of the overall system. Currently, most researchers have a very unstable tower and continuing the analogy the game must be played in reverse for the field to make significant progress in the near future as the gaps need to be filled.

The success of recommender system research is dependent on the evaluation approaches and the ability of the researchers to propose effective methods of establishing a baseline, not just as a means of evaluating the effectiveness of their work but as a means for other researchers to do so as well. This could have the secondary benefit of guiding and identifying the most promising research areas and algorithmic approaches. To thoroughly evaluate the appropriateness of evaluation methods, they are a few essential requirements which are, a sufficient number of study participants, and a comparison of the novel approach against one or more state-of-the-art approaches (Rossi, Lipsey, & Freeman, 2003). Of the 96 recommendation strategies identified from the resources surveyed by Beel, Gipp, Langer, & Breitinger (2016), they determined that their authors did not evaluate 21 (22%), or the methods were questionable and were insufficiently described to be understandable or reproducible. 53 (71%) were assessed using offline evaluations, 25 (33%) using quantitative user studies, 2 (3%) using qualitative user studies, and 5 (7%) using an online evaluation.

The role of an evaluation method plays the most integral part of the success of an algorithmic approach used by research-paper recommenders. Each role has its set of benefits and drawbacks. However, there seems to be a misconception by the community as a whole, that the best approach is an offline evaluation. Offline evaluations were initially meant to identify some promising recommendation strategies (Matejka, Li, Grossman, & Fitzmaurice, 2009; Rashid et al., 2002; Ricci et al., 2011; Shani & Gunawardana, 2011). The community’s dependency on offline evaluations has led to a disconnect between algorithms that perform well in the lab against ones that satisfy user’s needs. Said et al. consider “on-line evaluation [as] the only technique able to measure the true user satisfaction” (Said et al., 2012). Rashid et al. observed that biases in the offline datasets may cause bias in the evaluation (Rashid et al., 2002). The reason for the criticism is that the offline evaluation is more focus on accuracy. This is not a bad thing, but the way it is utilised is. The human factor is of utmost importance, and it should play an integral part in the evaluation process as not all humans are the same and may react differently to a system’s recommendations. For example, some users may not need a recommender system to find relevant research material easily, whereas some users may not know where to begin. Therefore, the system should not display the same resources to the users even if it is based on the same query. There is also criticism due to the assumption that offline evaluation could predict an algorithm’s effectiveness in online evaluations or user studies (Beel et al., 2016). Researchers have shown that results from offline evaluations do not necessarily correlate with results from user studies or online evaluations (Cremonesi, Garzotto, Negro, Papadopoulos, & Turrin, 2011; Cremonesi, Garzotto, & Turrin, 2012; McNee et al., 2002). Approaches that are useful in offline evaluations are not necessarily practical in real-world recommender systems (Beel et al., 2016). This human element can only be truly tested in an online environment.

The second evaluation used are user studies. This assessment method is done to measure user satisfaction through explicit ratings. In this approach, the user is given recommendation results generated by different recommendation approaches, and the approach with this highest average rating is considered most effective (Ricci et al., 2011). They are two forms of user studies, quantitative and qualitative. However, only the quantitative approach is beneficial to the overall improvement of the recommender system approach, as users rate individual aspects of the system and their overall satisfaction with the recommendations. As such, qualitative feedback is rarely used as an evaluation method for research paper recommendation systems (Stock et al., 2013; Stock et al., 2009). This type of user study may, however, be useful in getting a sense of the usefulness of the system and how users react to it.

Beel, Gipp, Langer, & Breitinger (2016) found that all the recommendation approaches that relied on a user study had participants who were aware of they were a part of the survey. This has the potential to affect user behaviour and thus the evaluation results (Gorrell, Ford, Madden, Holdridge, & Eaglestone, 2011; Leroy, 2011) as compared if they were obvious to the fact they were participating in a study. When users are unaware that they are involved in research and thus oblivious to the nature of the survey, they may be far more likely to generate data that will enhance recommendation system prediction. It will be far more useful to have the participants of these user studies also to be engaged in research themselves as they will be able to quantify how useful the recommender approach is to their individual research projects and not just how useful it is in recommending papers in the area of interest. The outcome of user studies often depends on the questions asked by the researcher (Beel et al., 2016) and the number of required participants, to receive statistically significant results (Loeppky, Sacks, & Welch, 2009).

Online evaluations are the final assessment approach that is being utilised by research-paper recommenders. This method was first employed by the advertising and e-commerce industry and is very reliant on by click-through rates (CTR) which are the ratio of clicked recommendations to displayed recommendations. This is used to measure the acceptance rate of recommendations (Beel et al., 2016). The acceptance rate is typically interpreted as an implicit measure for the user and the assumption behind this is that when a user clicks, downloads, or purchases a recommended item that the user likes the item. CTR is not always reliable metric for online evaluation of recommender systems should be used with precaution (Zheng, Wang, Zhang, Li, & Yang, 2010). Using online evaluations requires significantly more time and resources than offline evaluations, as they are more expensive, and can only be conducted by researchers who have access to a real-world recommender system (Beel et al., 2016).

Beel, Gipp, Langer, & Breitinger (2016) found that even though there is active experimentation in the field with a large number or evaluations being performed on research paper recommender systems, many researchers did not have access to a real-world system to evaluate their approaches. In stark contrast to this, researchers who have access to real-world research paper recommender systems often do not make use of it to conduct their evaluations. They could have chosen to use an offline evaluation method because of the time savings it brought from the moment the evaluation began to the time they got back the result. Using an offline evaluation could achieve benefits when it comes to tweaking and optimising their algorithmic approach before utilising a standardised online method to evaluate their approach against other researchers and thus establishing a benchmark. Using an offline method to set such a reference point should not be attempted as it will not be reflective of real world use.

Other factors that contribute to whether an algorithmic approach is a success or not is very dependent on is coverage and the baseline. Coverage refers to the number of papers in the recommender’s database that might potentially be proposed by the system (Good et al., 1999; Herlocker, Konstan, Borchers, & Riedl, 1999). As such, a high coverage is an important metric to judge the usefulness of a recommender system, because it increases the number of recommendations a user can receive.

The most optimal use of these evaluation approaches might be to use a tiered approach to testing of the recommender systems. The assessment procedure will begin with an offline approach to identify the promising algorithmic approaches identified by the researchers. This will then be followed by the user studies to get quantitative feedback from the participants. Based on the feedback from the user studies the researchers will decide whether to go back to the offline method for further changes and optimisations. When both the offline and user studies return positive results, the researchers should move to the final phase which would be online testing. This last phase of the tiered evaluation model should conclude with the novel approaches being compared against a baseline representative of the state-of-the-art methods. This way it is possible to quantify whether, and when, an innovative approach is more efficient than the state-of-the-art and by what margin (Beel et al., 2016). By using the tiered evaluation model the weaknesses of each model individually is strengthened by each other.

Beel, Gipp, Langer, & Breitinger (2016) suggested that the publicly available architectures and datasets would be beneficial to the recommender system community. For the most part, standardised datasets are available for most areas of recommender systems such as movies, music, books and even jokes. However, there is no such dataset for research papers. Datasets enable the evaluation of recommender systems by allowing researchers to evaluate their systems with the same data. CiteULike and Bibsonomy which are academic services, published datasets that eased the process of researching and developing research paper recommender systems. Although the datasets were not intended for recommender system research, they are frequently used for this purpose (Huang et al., 2012; Jomsri, Sanguansintukul, & Choochaiwattana, 2010). CiteSeer also made its corpus of research papers public (Bhatia et al., 2012) and researchers have frequently used this dataset for evaluating research paper recommender systems (Huang et al., 2012; Torres, McNee, Abel, Konstan, & Riedl, 2004). Jack et al. also compiled a dataset based on the reference management software Mendeley (Jack, Hristakeva, Garcia de Zuniga, & Granitzer, 2012). Due to the lack of a standardised dataset, recommendation approaches perform differently (Gunawardana & Shani, 2009). This has significantly contributed to the differing results of researchers and needs to be addressed urgently.

They are many primary components that contribute to the designing of a recommendation algorithm. The most important of which is the user modelling process. User modelling is used to identify a user’s information needs (Ricci et al., 2011). An effective recommender system should be able to automatically infer the user’s needs based on their interactions with the system (Beel et al., 2016). When inferring information automatically, a recommender system should determine the items that are currently relevant for the user-modeling process (Tsymbal, 2004) as not all of the data generated by the usage of the system will be useful. When user modelling is not properly done, it leads to a problem called “concept drift” in which the system is unable to adapt to changes in user behaviour over time and results in less and less useful predictions. According to Beel, Gipp, Langer, & Breitinger (2016) the research paper recommender community widely ignores “concept drift.

Beel, Gipp, Langer, & Breitinger (2016) have acknowledged that user satisfaction might depend not only on accuracy but also on factors such as privacy, data security, diversity, serendipity, labelling, and presentation. Recommender systems researchers outside of the research paper community seem to agree. They also noted that most of the real-world recommender systems apply simple recommendation approaches that are not based on recent studies. The reason for this may be because that there is no way to tell which approaches are most promising and as such the developers of the systems do not invest in the resources to make the upgrades.

User Models

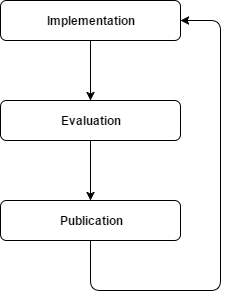

Figure 1: Research-Paper Recommender System Lifecycle

Recommender systems can be abstracted to two models: a user model and an item model (Zanker & Jessenitschnig, 2009). The user model provides all the information that is used to personalise the user’s experience by capturing the user’s interaction with items in the user profile (Jawaheer, Weller, & Kostkova, 2014).

Recommender systems rely on user ratings to continually determine the user interest in a particular item. These ratings are an expression of user preference and consist of both explicit and implicit data based on the user’s interaction with the system. The system infers user preferences from the use of clickstream data which is generated by the sequence of links they click on (Jawaheer et al., 2014).

User feedback is an indispensable part of most recommender systems as it helps generate a virtual representation of the user by understanding of the user. However, several recommendation algorithms do not account for the variability in human behaviour and activity (Jawaheer et al., 2014).

The recommender systems literature shows that ratings are the de facto method of generating explicit user feedback (Jawaheer et al., 2014) and research is focused on explicit user feedback rather than implicit user feedback (Hu, Koren, & Volinsky, 2008). These problems need to be addressed. The reason is because various forms of explicit user feedback are hard to quantify when they are represented as numerical values, due to each individual having different internal scales on which they rate items of interest, and should instead be modelled as ordinal data (Koren & Sill, 2011). Research done by (Amatriain, Pujol, & Oliver, 2009; Cosley, Lam, Albert, Konstan, & Riedl, 2003; Hill, Stead, Rosenstein, & Furnas, 1995) showed that explicit user feedback was not 100% accurate. Future recommender systems will therefore need to be less intrusive, thus relying more on implicit user feedback, which is inferred from user behaviour, to provide recommendations (Adomavicius & Tuzhilin, 2005).

Information is growing so rapidly that there is continual demand for improved search technology (Oard & Kim, 2001). Due to this, Oard & Kim (2001) developed a framework that integrated diverse set of techniques into a coherent whole. They based their framework on the way searching was done; where the informational need of the user was modelled along with the information content, using a chosen technique. In order to find relevant information, the information need model would be matched against available information content models.

Table 2: Matrix of Observable Behaviours (Oard & Kim, 2001)

| Behavior Category | Scale | ||

| Segment | Object | Class | |

| Examine | View Listen | Select | |

| Retain | Bookmark

Save Delete Purchase |

Subscribe | |

| Reference | Copy/Paste

Quote |

Forward

Reply Link Cite |

|

| Annotate | Mark up | Rate

Publish |

Organize |

The framework includes four categories of potentially observable user behaviours (Oard & Kim, 2001):

- Examine – information systems often provide brief summaries of several promising items, so users can determine whether further examination of the item will be of use to them, in satisfying their needs.

- Retain – this suggest to some degree that the user intends to make future use of an object.

- Reference – each activity has the effect of establishing some form of link between two objects.

- Annotate – these are actions that intentionally add to the value of an information object.

The scaling of the framework is broken into three levels (Oard & Kim, 2001):

- Segment level – includes operations whose minimum scope is a portion of an object

- Object level – includes behaviours whose minimum scope is an entire object

- Class level – includes behaviours whose minimum scope includes more than one object

It is crucial to identify the characteristics of the two forms of user feedback in recommender systems so as to make the right design decisions when having to choose between explicit user feedback and implicit user feedback or a combination (Hu et al., 2008). To accomplish this, Jawaheer et al. (2014) proposed a classification framework to determine how user feedback should be utilised recommender systems. They classified recommender systems by utilising explicit and implicit user feedback as key indicators for modelling users’ preferences.

Table 3: Properties of User Feedback in Recommender Systems (Jawaheer et al., 2014)

| Properties | Explicit User Feedback | Implicit User Feedback |

| Cognitive effort | Yes | No |

| User model | Preference | Confidence |

| Scale of measurement | Ordinal | Ratio |

| Domain relevance | Irrelevant | Relevant |

| Sensitivity to noise | Yes | Yes |

| Polarity | Positive and negative | Positive |

| Range of users | Subset of users | All users |

| User transparency | Yes | No |

| Bias | Power users | No bias |

Cognitive Effort: Acquiring implicit user feedback is seamless, whereas explicit user feedback. In a study of MovieLens users, Harper et al. [2005] found that users rate movies for a variety of reasons, namely to make a list for themselves, to influence others, for their enjoyment of the activity, or because they think that it improves their recommendations. Improving our understanding of the explicit behavior of users in recommender systems can help us improve recommendations.res some cognitive effort [Gadanho and Lhuillier 2007].

User Model: . In this case, we highlight only one aspect of the user model, namely how we represent user feedback in the recommender system. Hu et al. [2008] argued that the numerical values of explicit user feedback denote user preference, whereas numerical values of implicit user feedback denote confidence.

Another school of thought is that preference is complex and converting user preference to a numerical value is nontrivial [Lichtenstein and Slovic 2006]. There is substantial literature in psychology on the notion of preference. According to one school of thought, users do not really know what they prefer; instead, they construct their preferences as the situation evolves [Lichtenstein and Slovic 2006].

Scale of Measurement: Explicit feedback is usually expressed as ratings (e.g., Likert scale) that have an ordinal scale [Field and Hole 2003]. Implicit feedback is typically measured as some form of counting of repeatable behaviors

Thus, typically implicit user feedback will have a ratio scale of measurement. This makes it difficult to include explicit and implicit feedback in the same user model [Liu et al. 2010]. Furthermore, having different scales of measurement makes it impossible to compare explicit and implicit feedback.

Domain Relevance: Interpretation of explicit user feedback is irrelevant to the domain under study, whereas domain knowledge is essential to interpret implicit user feedback.

Sensitivity to Noise: Intuitively, by its nature, implicit user feedback is sensitive to noise [Gadanho and Lhuillier 2007]. Noise in implicit user feedback may be caused by the user, the model used for inferring the user preference, and the system noise, which includes noise generated by the tools used for capturing the user feedback. Amatriain et al. [2009a] have shown that explicit user feedback is also sensitive to noise. In the latter case, noise in explicit user feedback is caused by the user and the system.

Polarity: Explicit user feedback can be positive and negative, whereas implicit user feedback can only be positive [Hu et al. 2008]. In explicit user feedback, users can express what they like and don’t like. In implicit user feedback, the recommender system can only infer what the users may like. Hu et al. [2008] argue that using implicit user feedback without accounting for the missing negative user feedback will misrepresent the user profile.

Range of Users: In explicit user feedback, only a subset of the users of a recommender system expresses user feedback, whereas in implicit user feedback, all users express user feedback. Thus, expressions of implicit user feedback are likely to have less data sparsity than explicit user feedback.

User Transparency: Explicit user feedback is transparent to the user, which has its advantages and disadvantages. The user knows that user feedback may change recommendations, thus providing reasons for providing user feedback. On the other hand, the user may also manipulate the user feedback such that it alters the recommendations [Herlocker et al. 2004]. In contrast, in implicit user feedback, the user may not know which observable behavior leads to recommendations. This makes recommender systems less likely to be manipulated, but at the same time, makes it difficult to explain the recommendations to users

Bias: Explicit user feedback may be biased toward users who are more expressive than others. The Harper et al. [2005] study of MovieLens users who rate movies found that a disproportionate number of power users contribute ratings. Hence, a recommender system based solely on explicit user feedback may be biased toward a particular subset of users

earlier that explicit user feedback and implicit user feedback have different properties. But there is no common scale to compare these two forms of user feedback, which gives rise to this open question: which is the better form of user feedback? This is reflected in the paper by Hu et al. [2008], where the view was taken that explicit user feedback refers to user preference, whereas implicit user feedback refers to the confidence in that user preference. On the other hand, Cosley et al. [2003], Hill et al. [1995], and Amatriain et al. [2009a] have shown that explicit user feedback has a degree of uncertainty as well.

User feedback constitutes the key input in the user model. Improvements in user feedback imply a better fidelity of the user model and hence better performance of the recommender systems.

Gadanho and Lhuillier [2007] and Bell and Koren [2007] have suggested that combining the two forms of feedback would yield the best performance out of recommender systems. In fact, Koren [2010] has shown that using both explicit and implicit user feedback together provides better performance than explicit user feedback on its own

Herlocker et al. [2004] cite the following reasons that encourage users to provide user feedback: (a) users believe that providing user feedback improves their user profiles and the recommendations, (b) users like to express themselves, (c) users believe that other users will benefit from their contributions, and (d) users believe that they can influence other users’ opinions.

. Other interesting approaches could include economic models based on utility theory [Harper et al. 2005] or models based on information theory.

Combining Explicit and Implicit User Feedback into a Single User Model for Recommender Systems

There is evidence that performance of recommender systems will improve if we use a combination of explicit and implicit user feedback [Bell and Koren 2007; Koren 2010].

Herlocker et al. [2004] have extensively reviewed the literature on the evaluation of recommender systems. Offline evaluations are useful for assessing accuracy of recommendations. They have the advantage of providing quick, repeatable, and cheap evaluations on large datasets. However, the lack of richness (implicit user feedback) and diversification in datasets limits the generalization of results and consequent comparisons

Furthermore, offline evaluations cannot assess less tangible attributes of recommendations such as the usefulness, satisfaction, or quality of recommendations [Herlocker et al. 2004].

First, users often perceive their online behaviors and preferences differently from their actual behaviors [Madle et al. 2009; Roy et al. 2010].

the need to combine these two forms of feedback in a single framework, as they are not readily comparable at this time.

such as a unified user model combining explicit and implicit user feedback in the same user model.

New User Modelling

The user model is a representation of information about an individual user that is essential for an adaptive system to provide the adaptation effect.

To create and maintain an up-to-date user model, an adaptive system collects data for the user model from various sources that may include implicitly observing user interaction and explicitly requesting direct input from the user. This process is known as user modeling.

According to the nature of the information that is being modeled in adaptive Web systems, we can distinguish models that represent features of the user as an individual from models that represent the current context of the user’s work

This section focuses on the five most popular and useful features found when viewing the user as an individual: the user’s knowledge, interests, goals, background, and individual traits

Knowledge

The user’s knowledge of the subject being taught or the domain represented in hyperspace appears to be the most important user feature

The user’s knowledge is a changeable feature. The user’s knowledge can both increase (learning) or decrease (forgetting)

The simplest form of a user knowledge model is the scalar model, which estimates the level of user domain knowledge by a single value on some scale – quantitative (for example, a number ranging from 0 to 5) or qualitative (for example, good, average, poor, none). Scalar models, especially qualitative, are quite similar to stereotype models. The difference is that scalar knowledge models focus exclusively on user knowledge and are typically produced by user self-evaluation or objective testing, not by a stereotype-based modeling mechanism

User knowledge of any reasonably-sized domain can be quite different for different parts of the domain

A scalar model effectively averages the user knowledge of the domain. For any advanced adaptation technique that has to take into account some aspect of user knowledge, the scalar model is not sufficient. The structural models assume that the body of domain knowledge can be divided into certain independent fragments. These models attempt to represent user knowledge of different fragments independently.

In its modern form, an overlay model represents the degree to which the user knows such a domain fragment. This can be a qualitative measure (good-average-poor), or a quantitative measure, such as the probability that the user knows the concept.

Interests

User interests always constituted the most important (and typically the only) part of the user profile in adaptive information retrieval and filtering systems that dealt with large volumes of information. It is also the focus of user models/profiles in Web recommender systems

User interests are now competing with user knowledge to become the most important user feature to be modeled in AHS

Concept-level models of user interests are generally more powerful than keyword-level models. Concept-level models allow a more accurate representation of interests.

Goals and Tasks

The user’s goal or task represents the immediate purpose for a user’s work within an adaptive system.

the goal is an answer to the question “What does the user actually want to achieve?” The user’s goal is the most changeable user feature: it almost always changes from session to session and can often change several times within one session of work.

The job of the user modeling component is to recognize this goal and to mark it as the current goal in the model.

Background

The user’s background is a common name for a set of features related to the user’s previous experience outside the core domain [36]

these systems can distinguish users by their profession (student, nurse, doctor) which implies both the level of knowledge and responsibilities [53; 198].

Background information is used most frequently for content adaptation. By its nature, user background is similar to the user’s knowledge of the subject However, the representation and handling of user background in adaptive systems is different. Since detailed information about the background is not necessary, the common way to model user background is not an overlay, but a simple stereotype model.

Individual Traits

The user’s individual traits is the aggregate name for user features that together define a user as an individual Similar to user background, individual traits are stable features of a user that either cannot be changed at all, or can be changed only over a long period of time

current work on modeling and using individual traits for personalization focuses mostly on two groups of traits – cognitive styles and leaning styles

Feature-Based Modeling vs. Stereotype Modeling

Feature-based user modeling reviewed above is currently the dominant user modeling approach in adaptive Web systems. Feature-based models attempt to model specific features of individual users such as knowledge, interests goals, etc. During the user’s work with the system, these features may change, so the goal of feature-based models is to track and represent an up-to-date state for modeled features.

Stereotype user modeling is one of the oldest approaches to user modeling. It was developed in the works of Elaine Rich [163; 164] over a quarter of century ago and elaborated in a number of user modeling projects. Stereotype user models attempt to cluster all possible users of an adaptive system into several groups, called stereotypes.

Naturally, each stereotype groups together users with specific mixture of features. However, stereotype modeling ignores the features and uses the stereotype as a whole. More exactly, the goal of stereotype modeling is to provide mapping from a specific combination of user features to one of the stereotypes.

A promising direction for the future application of stereotype models is their use in combination with feature-based models. One of the most popular combinations is the use of stereotypes to initialize an individual feature-based model [3; 5; 192]. This approach allows to avoid a typical “new user” problem in feature-based modeling where effective adaptation to new user is not possible since user modeling is started “from scratch.”

These stereotypes, in turn, are used to initialize the feature-based model of user knowledge and interests

As in any modeling process, the first important step is to specify aspects of the world being modeled which should be represented in the model, in order to achieve the defined goals

The more user features are represented in the model the more complete it will be.

2.1 Research Objectives

Research in the area of recommender systems for research papers has become convoluted with unreproducible research findings (Beel et al., 2016; Beel, Langer, et al., 2013). The neglect of user modelling in the area of research paper recommender system has disregarded the wealth of unique information generated by an individual. To mitigate this, a user model framework should be designed/adapted to suit the quirks of research-paper recommenders and researchers. Doing so may generate a more accurate virtual representation of a user, their needs and goals as it relates to their research. The research objective is to determine the best way to design, develop and implement an interface for capturing data points to generate user model for use in the research of research paper recommendation systems. We seek to answer the following questions:

- What are the informational requirements of recommender systems, regardless of implementation approach?

- What are the factors that prevent a recommender system from fulfilling its primal function of recommendation?

- What are user expectations of a recommender system?

- Are users more likely to engage with a recommender system/information system given a certain user interface?

- How can the information gathering layer of a user model be modelled?

2.2 Proposed Solution

The proposed solution is to design and implements a web framework for research paper recommender systems that can be used by researchers in the field. The framework will utilise a modular design approach to allow researchers to create components to extend functionality and test novel approaches.

2.3 Significance of Study

The study has the potential to help with the standardisation of the way research is done with research paper recommendation systems. As it will contribute to the development of a framework and dataset to enable researchers to test their algorithms and novel approaches. This is a far cry from the current approaches in which researchers use different approaches, varying testing methods and different datasets which they clean using different criteria (Beel et al., 2016). As a side effect, the current recommender systems of research papers utilise approaches that are not based on current research (Beel et al., 2016).

This successful implementation of the user modelling framework for research paper recommender systems may provide realistic predictions result set for researchers as it could generate a more accurate representation of users.

the indirect effect of improving research generally as they system will be able to recommend much more relevant resources thus building stronger research.

The significance of the project is to generate a more comprehensive understanding of a researcher (the user who interacts with research paper recommender system). To do this the system needs to gather both implicit and explicit data from a user. These data points are in turn use to generate a virtual representation of the user (user model). This accuracy of this model depends on the data that is provided. To generate accurate data, a user needs to interact with a system that is not intrusive but works unobtrusively. This aim is to develop a model representation of a researcher to aid in improving the accuracy of research paper recommendations.

3.0 Methodology

The project will be divided into four phases:

- Foundational research

- Formulation of a user model and data capture interface

- Implementation of the user model and data capture interface

- Evaluation of the user model and data capture interface

3.1 Phase 1: Foundational Research

The first phase of the project will focus on user modelling, recommender systems and user interface design. The objectives of this phase are to identify the core requirements of user models in research paper recommender systems and the best way to design a user interface to capture data to generate a unique representation of a user. The findings will be used as the software requirements to develop a user model and user interfaces for data capture.

3.2 Phase 2: Formulation of a User Model and Data Capture Interface

The findings from phase one that were discovered will be used in the formulation of a user model of a researcher. In this phase, we will look at the different ways a user model could be designed and the model that most efficiently satisfies the software requirements will be chosen.

3.3 Phase 3: Implementation of the User Model and Data Capture Interface

In this phase, we will identify the most appropriate technologies to implemented the chosen model. The objective of this phase is to develop a working prototype of a user interface to enable data capturing so as to populate the user model with the most up-to-date representational state of a user.

3.4 Phase 4: Evaluation of the User Model and Data Capture Interface

The evaluation will be done via usability testing in which participants will use a deployed version of the data capture interface to ascertain whether it succeeded on its design guidelines and meets usability objectives. This form of evaluation was chosen because it is human-focused. Systems that are reliant on user-generated data should be pleasing and simple to use. If they fail at this requirement and it is a burden to users, the data generated may not be a genuine interaction with the systems but a frustrated attempt to complete the process as fast as possible.

4.0 Project Schedule

4.1 Key

| Planning | |

| Best Case | |

| Worst Case | |

4.2 Gantt Chart

| Months | |||||||||||||||||

| Activity | March | April | May | June | |||||||||||||

| Weeks | Weeks | Weeks | Weeks | ||||||||||||||

| 1 | 2 | 3 | 1 | 2 | 3 | 4 | 4 | 1 | 2 | 3 | 4 | 1 | 2 | 3 | 4 | ||

| Phase 1 | |||||||||||||||||

| Research Recommendation System Properties | |||||||||||||||||

| Research User Modelling | |||||||||||||||||

| Research Framework Development | |||||||||||||||||

| Research Factors That Prevent Reproducibility Of Research Findings | |||||||||||||||||

| Research The Data Requirements Of Recommendation Systems | |||||||||||||||||

| Additional Research: User Modelling (Implicit and Explicit) | |||||||||||||||||

| Phase 2 | |||||||||||||||||

| Design User Interface | |||||||||||||||||

| Design Data Models | |||||||||||||||||

| Design Database Schema | |||||||||||||||||

| Refine Designs | |||||||||||||||||

| Phase 3 | |||||||||||||||||

| Identify the technologies to be used in the development of the framework | |||||||||||||||||

| Build Basic Prototype (Authentication, User Interface) | |||||||||||||||||

| Implement the user model framework | |||||||||||||||||

| Debugging | |||||||||||||||||

| Phase 4 | |||||||||||||||||

| Evaluation | |||||||||||||||||

| Submission | |||||||||||||||||

5.0 References

Adomavicius, G., & Tuzhilin, A. (2005). Toward the next generation of recommender systems: A survey of the state-of-the-art and possible extensions. IEEE transactions on knowledge and data engineering, 17(6), 734-749.

Amatriain, X., Pujol, J. M., & Oliver, N. (2009). I like it… i like it not: Evaluating user ratings noise in recommender systems. Paper presented at the International Conference on User Modeling, Adaptation, and Personalization.

Beel, J., Genzmehr, M., Langer, S., Nürnberger, A., & Gipp, B. (2013). A comparative analysis of offline and online evaluations and discussion of research paper recommender system evaluation Proceedings of the international workshop on reproducibility and replication in recommender systems evaluation (pp. 7-14): ACM.

Beel, J., Gipp, B., Langer, S., & Breitinger, C. (2016). Research-paper recommender systems: a literature survey. International Journal on Digital Libraries, 17(4), 305-338.

Beel, J., Langer, S., Genzmehr, M., Gipp, B., Breitinger, C., N, A., . . . rnberger. (2013). Research paper recommender system evaluation: a quantitative literature survey. Paper presented at the Proceedings of the International Workshop on Reproducibility and Replication in Recommender Systems Evaluation, Hong Kong, China. http://dl.acm.org/citation.cfm?doid=2532508.2532512

Beel, J., Langer, S., Genzmehr, M., Gipp, B., Breitinger, C., & Nürnberger, A. (2015). Research Paper Recommender System Evaluation: A Quantitative Literature Survey. International Journal on Digital Libraries, 1-34.

Bhatia, S., Caragea, C., Chen, H.-H., Wu, J., Treeratpituk, P., Wu, Z., . . . Giles, C. L. (2012). Specialized Research Datasets in the CiteSeer˟ Digital Library. D-Lib Magazine, 18(7/8).

Bollacker, K. D., Lawrence, S., & Giles, C. L. (1998). CiteSeer: An autonomous web agent for automatic retrieval and identification of interesting publications. Paper presented at the Proceedings of the second international conference on Autonomous agents.

Boon, S. (2016). 21st Century Science Overload. Retrieved from http://www.cdnsciencepub.com/blog/21st-century-science-overload.aspx

Cosley, D., Lam, S. K., Albert, I., Konstan, J. A., & Riedl, J. (2003). Is seeing believing?: how recommender system interfaces affect users’ opinions. Paper presented at the Proceedings of the SIGCHI conference on Human factors in computing systems.

Cremonesi, P., Garzotto, F., Negro, S., Papadopoulos, A. V., & Turrin, R. (2011). Looking for “good” recommendations: A comparative evaluation of recommender systems. Paper presented at the IFIP Conference on Human-Computer Interaction.

Cremonesi, P., Garzotto, F., & Turrin, R. (2012). Investigating the persuasion potential of recommender systems from a quality perspective: An empirical study. ACM Transactions on Interactive Intelligent Systems (TiiS), 2(2), 11.

Good, N., Schafer, J. B., Konstan, J. A., Borchers, A., Sarwar, B., Herlocker, J., & Riedl, J. (1999). Combining collaborative filtering with personal agents for better recommendations. Paper presented at the AAAI/IAAI.

Gorrell, G., Ford, N., Madden, A., Holdridge, P., & Eaglestone, B. (2011). Countering method bias in questionnaire-based user studies. Journal of Documentation, 67(3), 507-524.

Gunawardana, A., & Shani, G. (2009). A survey of accuracy evaluation metrics of recommendation tasks. Journal of Machine Learning Research, 10(Dec), 2935-2962.

Head, A. J. (2007). Beyond Google: How do students conduct academic research? First Monday, 12(8).

Herlocker, J. L., Konstan, J. A., Borchers, A., & Riedl, J. (1999). An algorithmic framework for performing collaborative filtering. Paper presented at the Proceedings of the 22nd annual international ACM SIGIR conference on Research and development in information retrieval.

Hill, W., Stead, L., Rosenstein, M., & Furnas, G. (1995). Recommending and evaluating choices in a virtual community of use. Paper presented at the Proceedings of the SIGCHI conference on Human factors in computing systems.

Hu, Y., Koren, Y., & Volinsky, C. (2008). Collaborative filtering for implicit feedback datasets. Paper presented at the Data Mining, 2008. ICDM’08. Eighth IEEE International Conference on.

Huang, W., Kataria, S., Caragea, C., Mitra, P., Giles, C. L., & Rokach, L. (2012). Recommending citations: translating papers into references. Paper presented at the Proceedings of the 21st ACM international conference on Information and knowledge management, Maui, Hawaii, USA.

Jack, K., Hristakeva, M., Garcia de Zuniga, R., & Granitzer, M. (2012). Mendeley’s open data for science and learning: a reply to the DataTEL challenge. International Journal of Technology Enhanced Learning, 4(1-2), 31-46.

Jawaheer, G., Weller, P., & Kostkova, P. (2014). Modeling user preferences in recommender systems: A classification framework for explicit and implicit user feedback. ACM Transactions on Interactive Intelligent Systems (TiiS), 4(2), 8.

Jinha, A. E. (2010). Article 50 million: an estimate of the number of scholarly articles in existence. Learned Publishing, 23(3), 258-263.

Jomsri, P., Sanguansintukul, S., & Choochaiwattana, W. (2010, 20-23 April 2010). A Framework for Tag-Based Research Paper Recommender System: An IR Approach. Paper presented at the 2010 IEEE 24th International Conference on Advanced Information Networking and Applications Workshops.

Koren, Y., & Sill, J. (2011). OrdRec: an ordinal model for predicting personalized item rating distributions. Paper presented at the Proceedings of the fifth ACM conference on Recommender systems.

Leroy, G. (2011). Designing user studies in informatics: Springer Science & Business Media.

Loeppky, J. L., Sacks, J., & Welch, W. J. (2009). Choosing the sample size of a computer experiment: A practical guide. Technometrics, 51(4), 366-376.

Matejka, J., Li, W., Grossman, T., & Fitzmaurice, G. (2009). CommunityCommands: command recommendations for software applications. Paper presented at the Proceedings of the 22nd annual ACM symposium on User interface software and technology.

McNee, S. M., Albert, I., Cosley, D., Gopalkrishnan, P., Lam, S. K., Rashid, A. M., . . . Riedl, J. (2002). On the recommending of citations for research papers. Paper presented at the Proceedings of the 2002 ACM conference on Computer supported cooperative work.

Oard, D. W., & Kim, J. (2001). Modeling information content using observable behavior.

Rashid, A. M., Albert, I., Cosley, D., Lam, S. K., McNee, S. M., Konstan, J. A., & Riedl, J. (2002). Getting to know you: learning new user preferences in recommender systems. Paper presented at the Proceedings of the 7th international conference on Intelligent user interfaces.

Ricci, F., Rokach, L., & Shapira, B. (2011). Introduction to recommender systems handbook: Springer.

Rossi, P. H., Lipsey, M. W., & Freeman, H. E. (2003). Evaluation: A systematic approach: Sage publications.

Said, A., Tikk, D., Stumpf, K., Shi, Y., Larson, M., & Cremonesi, P. (2012). Recommender Systems Evaluation: A 3D Benchmark. Paper presented at the RUE@ RecSys.

Shani, G., & Gunawardana, A. (2011). Evaluating recommendation systems Recommender systems handbook (pp. 257-297): Springer.

Stock, K., Karasova, V., Robertson, A., Roger, G., Small, M., Bishr, M., . . . Korczynski, L. (2013). Finding science with science: evaluating a domain and scientific ontology user interface for the discovery of scientific resources. Transactions in GIS, 17(4), 612-639.

Stock, K., Robertson, A., Reitsma, F., Stojanovic, T., Bishr, M., Medyckyj-Scott, D., & Ortmann, J. (2009). eScience for sea science: a semantic scientific knowledge infrastructure for marine scientists. Paper presented at the e-Science, 2009. e-Science’09. Fifth IEEE International Conference on.

Torres, R., McNee, S. M., Abel, M., Konstan, J. A., & Riedl, J. (2004). Enhancing digital libraries with TechLens. Paper presented at the Digital Libraries, 2004. Proceedings of the 2004 Joint ACM/IEEE Conference on.

Tsymbal, A. (2004). The problem of concept drift: definitions and related work. Computer Science Department, Trinity College Dublin, 106(2).

Zanker, M., & Jessenitschnig, M. (2009). Case-studies on exploiting explicit customer requirements in recommender systems. User Modeling and User-Adapted Interaction, 19(1), 133-166.

Zheng, H., Wang, D., Zhang, Q., Li, H., & Yang, T. (2010). Do clicks measure recommendation relevancy?: an empirical user study. Paper presented at the Proceedings of the fourth ACM conference on Recommender systems.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Information Systems"

Information Systems relates to systems that allow people and businesses to handle and use data in a multitude of ways. Information Systems can assist you in processing and filtering data, and can be used in many different environments.

Related Articles

DMCA / Removal Request

If you are the original writer of this research project and no longer wish to have your work published on the UKDiss.com website then please: