Development of Chatbots and Natural Language Processing

Info: 7086 words (28 pages) Dissertation

Published: 21st Dec 2022

Tagged: Technology

Looking back into the development of Chatbots and Natural Language Processing.

Abstract

Chatbots have become increasingly popular, partially due to the large amount of tools available to developers to setup a conversational agent, for popular platforms, such as Facebook, in just minutes. However, the development of conversational entities is not new, and there are many approaches to provide Natural Language Processing capabilities to such entities, in order to avoid falling into the trap of implementing chatbots that will not add value and to prevent maintaining a chatbot that relies only on if-then-else structures. The present work collects influent work in the field of creating conversational entities, or chatbots and talks about some approaches for implementing them.

Keywords: chatbot, Natural Language Processing, core, nlp, machine learning, conversational entity, computers, thinking.

Introduction

Chatbot, chatter bot system, conversational agent, entity or simulator. The idea that may first come to mind is one of a program or technology with the capability of having some sort of conversation with a human. One definition states that a chatbot is a computer program aimed at stimulating a conversation with a human being (Abu Shawar & Atwell, 2002). Either by text or most recently through voice with Spoken Dialog System (SLDs) or Personal Assistants like Apple’s Siri, Google Assistant, Microsoft Cortana, and others such as ChatGPT; the topic has taken more relevance in recent times. Even though the concept of a chatbot could seem something relatively new, a chatbot is in fact, not a new idea. However, the current level of technological advancement is more favorable to develop a feasible implementation.

One of the first attempts to develop a conversational entity was ELIZA, a computer program aimed to the study of Natural Language Communication, capable of maintaining a conversation assuming the role of a psychotherapist and that could simulate, to a certain degree having a conversation with a human being. It was published by Joseph Weizenbaum in 1966, laying the foundation to develop sophisticated entities that could make possible the establishment of conversations that would flow naturally with humans. (Weizenbaum, 1966)

In 1950, Alan Mathison Turing published its work titled: “Computing Machinery and Intelligence”, in which he described “The Imitation Game”, a dynamic where a person is asked to identify the genre of its out-of-sight interlocutor, whether it is a man or a woman, based solely on a conversation sustained via terminal, and the formulation of questions, other than those that would reveal the nature of its interlocutor immediately, like “are you a woman?”. But Turing’s paper went further from that and considered the possibility of switching the place of one of the players with a machine and then asking a person to determine who was the human and who was the machine. (Alan, 1950). Such has been referred as “The Turing Test”.

With the limited computing capabilities of its time, Turing was capable of conceiving the idea of a digital computer that could do well in “The Imitation Game” by impersonating a human and finally fooling the person to actually believe it. Turing believed that around fifty years after his article was written, computers could be capable of reducing the chances of making the right decision to no more than a 70% by the average person (Alan, 1950).

More than half a century later, a tremendous amount of work has been done in implementing, with different approaches, the idea of having conversations with computers, in a way that seems natural to humans. In the present paper, we make a review of some of the most relevant literature, related to the development of chatbots and give special attention to those sources that provide information about the use of Natural Language Processing Systems in languages other than English, and specifically Spanish. The purpose is to use such information in further research to implement a chatbot as a technical support agent, and the use of applied Natural Language Processing in a way that it is easily replicable. Further work is focused towards the integration of Sentiment Analysis, which would provide a way not just to respond accordingly to the structure of the input, but to infer the feelings that the user may have while interacting with the chatbot.

Methods

With the purpose of identifying the state of the art related to Natural Language Processing and one of its most common applications, named chatbots, a literature review was conducted. The method for selecting the sources of information was based on performing searches in Google Scholar and EBSCOHost databases, the keywords used were the following: chatbot, Natural Language Processing, core NLP, machine learning, conversation, computers and think.

The main selection of sources was based on the results of the previous keywords, sorted starting by the most cited, that were considered more relevant to get a general idea about the epistemology and the state of the art. Finally, a filter on those publications more specifically related to the Spanish application of Natural Language Processing was used to start on those most relevant and recent.

When proceeding with the review of the literature, if a specific topic required more sources to support an idea o statement, keywords about that specific topic were used to perform a search on the databases already mentioned. For instance: ontology chatbot.

The scope of the present work has been limited to the epistemology behind the development of chatbots, the use of Natural Language Processing and its application to the Spanish language, does not go into details about Spoken Dialog Systems. Furthermore, although some chatbot systems are considered more thorough than others, since there are currently thousands of chatbots, only those more relevant to our objectives have been included. The work is limited to text-based conversational entities, thus, assistants like Siri and Cortana are not analyzed and mega programs like IBM Watson are not discussed.

Results

Imitation game and the Loebner Prize

It could be said that the interest in having thinking machines started with the “imitation game” presented by Alan Turing (Alan, 1950). Turing’s idea inspired Dr. Hugh Loebner[1] and the Cambridge Centre for Behavioral studies, to create a competition in the pursue of implementing the Turing test. The first contest was directed by Dr. Robert Epstein[2] in 1991. Such competition acquired the name of Loebner Prize due to the pledge of Dr. Hugh Loebner who offered a prize of $100,000 for the first computer program to pass the test. Since then, the competition has been hosted annually in different institutions around the world and currently is administered by the Society for the Study of Artificial Intelligence and Simulation of Behaviour (AISB). According to the AISB’s website[3]:

“From 2014, the contest has been run under the aegis of the AISB, the world’s first AI society (founded 1964) at Bletchley Park where Alan Turing worked as a code-breaker during World War 2.”

Results from the competitions are available on the AISB’s website, and it could provide interesting input for anyone interested in developing natural language communication entities, or simply a program “smart” enough to fool the judges in pretending to be a human.

The Loebner Prize has proven to provide some benefits like having an annual Turing test open to anyone interested in submitting an entry, to stimulate the interest in the field of Artificial Intelligence, the competition, and discussion that carry the possibility of generating new techniques to pass the Turing Test. However, some authors, consider that despite the benefits of the Loebner prize, the contest was not advancing the field of Artificial Intelligence because the reward was not large enough to attract serious research groups (Jason L Hutchens, 1996).

One of such authors even went so far as to actually compete for the Loebner Prize (and he won)[4] in order to prove that little effort was required to fool the judges. Jason Hutchens published his paper “How to Pass the Turing Test by Cheating” in December 1997.

On its paper, he pointed out the skepticism and deemed enthusiasm about computers being able to solve many previously insoluble problems. After reviewing the details in the implementation of some early systems: Weizenbaum’s ELIZA, Colby’s PARRY and Terry Winograd’s SHRDLU. Hutchens proceeds to review the work of Douglas Hofstadter in maybe the first documented Chatbot to Chatbot communication between Weizenbaum’s DOCTOR and Colby’s PARRY. (Jason L Hutchens, 1996).

With the mission of winning the Loebner Prize to prove his point, Hutchens reviewed some programs that had entered the competition and succeeded to some degree before. (Jason L Hutchens, 1996). Joseph Wintraub’s PC Therapist had won the contest four times, a work of five years led to a program that does the key matching for the retrieving of information from a database, with the interesting characteristic of replying to complex inputs purposely submitted by the judges in the same complex and even confusing terms.

TIPS from Thomas Whalen, a system that provides information on a particular topic, winner of the 1994 contest, performs queries in Natural Language with a probably more sophisticated approach that refuses to use non sequitur and contradictions. In order to win the contest, Whalen created a base of information about a single character so judges could ask personal questions and receive answers that seem natural.

FRED, a Functional Response Emulation Device in which according to Hutchens, Robby Garner invested fifteen years. Capable of learning from the conversations sustained with users, and of trying to provide responses to the user based on phrases from past interactions.

Using a Third order Markov Chain, Hutchens created MegaHAL, a program aiming to spot nonsense and meaningless writing also called gibberish from judges, by looking for all the keywords in the input then following arcs created by analyzing lots of text or corpora, and then use probability to construct the most suitable answer base on the individual relevance of each of the words submitted by the user. Hutchens competed with MegaHal in the Loebner Prize of 1996 with no intention of winning, “I submitted it only as a bit of fun” stated Hutchens. MegaHAL had the capability of learning from conversations what allowed it to be fluent in around six languages.

For his second program HeX to win the competition Hutchens decided to limit his time to only one month of work, obtaining the transcripts of previous Loebner contests and gather some insight from previous contestants. Like Whalen’s restriction of the conversation by using drama, Weintraub and the simulation of meaningless capricious conversation, PARRY being paranoid and unresponsive, DOCTOR reformulating the user input, slowing the typing speed and some other tricks. However, to simulate natural conversations Hutchens realized the need to use more than just tricks, for instance, to provide the program with apparent human emotions.

By that time to win the Loebner Prize was a complicated endeavor, still criticized by the unnecessary amount of elements that some authors consider irrelevant to beat the original Imitation Game (Lenat, 2016). At that time, some of such elements were related to having to simulate typing speed, pauses for thought, errors in typing, and so on. Hutchens’ bot also had to be prepared to deal with questions that no human would ordinarily ask a stranger, to adapt to a new topic abruptly introduced, and to avoid answering with an “I do not know”.

Hutchens ended up winning the Loebener competition in 1996[5], after that much work and study to fulfill that specific purpose, concluded that the Loebner contest was doomed to failure, and was nothing more than an amusing game more attractive for the short term engineering rather than science (Jason L Hutchens, 1996). The most recent modification of MegaHAL is available on GitHub[6] and has been made available to work with an API (Application Programming Interface) to make calls to it and being integrated to other applications, it has been built over Sooth, a stochastic predictive model and now uses Ruby instead of C.

Although the Loebner Competition and the Turing Test still have supporters and detractors, both are still methods to consider when evaluating chatbot systems (Shawar & Atwell, 2007). Results from previous Lobner Competition are available at the AISB website.

MUD and chatbots

A Multi-User Dungeon or Multi-User Dimension, MUD, is a text-based video game, that goes way back to 1979 at Essex University, UK. The students Roy Trubshaw and Richard Bartle developed a game that could be multiuser, and work as an interpreter for a database definition language. [7] In August 1989, Jim Aspnes opened TinyMUD, a reimplementation of the original MUD that included multiplayer conversations, the simulation of physical spaces through textual scenery and the ability for the player to create their own subareas within a world model.

TinyMUD became very popular, to the point that still there are websites dedicated to the topic and servers running the game. By the time of its popularity, the idea of having computer controlled players called “bots” became a possibility, and even ELIZA was connected to a robot. In the beginning, it was a way of providing information on a shortest-path basis, and the conversational abilities were managed like simple if-then-else rules and some variable matching. Taking the advantages provided by TinyMUD, Michael L. Mauldin created a CHATTERBOT under the rationale that such virtual world was the perfect for an “unsuspected Turing test” since the players could not easily tell if they spoke to a real person or a bot (Mauldin, 1994).

PARRY a Paranoid Simulator designed by Colby that used some sort of memory for tracking the interactions (Colby, Weber, & Hilf, 1971), inspired Mauldin in considering the use of memory about the chats and interactions with users. Also, presented different achievements in understanding that users felt better of talking to a robot than to no one. Having the goal of being able to answer any sort of question or conversation, information retrieval engines were integrated to perform searches and then constructing an answer hoping to be able to satisfy the user or judge. (Mauldin, 1994)

Some elements from Eliza and PARRY were also considered in the form of tricks. In the case of Eliza, talking about the user and keep the conversation about himself giving the impression of listening. In the case of PARRY, admitting ignorance, changing the level or topic of the conversation, making reference to a previous topic and introducing completely new topics. The CHATTERBOT included such tricks and also some other like, having many fragments of a directed conversation, using controversial statements, agreeing with the user and using a site of news as input to give new information (Mauldin, 1994), one the most interesting tricks from Mauldin’s chatterbot was the simulation of typing, that would be much later used in some implementations like the Facebook chats.

Elizabeth and Alice

Elizabeth and Alice, are chatbots systems adapted later, based on the fundamental ideas from the ELIZA program. However, such adaptations implied different approaches to the problem of Natural Language Communication. The comparison between them provides interesting insight about the relationship that the implementation has with the potential for growth, in the achievement of the final goal, of providing conversations with computers in a way that seems natural for humans.

On one side we have ALICE, implemented by Dr. Richard S. Wallace in 1995. Being ALICE, an acronym for Artificial Linguistic Internet Computer Entity implements the idea of storing knowledge, becoming, more than a simple listener and pretender, in a source of information. On the other hand, we have Elizabeth which presents some improvements to the ELIZA chatterbot and was implemented by Dr. Peter Millican in the University of Leeds. (Abu Shawar & Atwell, 2002)

Elizabeth is based on input and output rules relying on identifying the first possible keyword pattern to generate a response. It utilizes the base mechanisms for selections, substitution and phrase storage from Eliza, but enhanced and generalized to make it more flexible and potentially adaptable. It implements a rule only one, thus, it does not apply recursion since it may cause cycles that inclined to the generation of loops. For the same reason, the combination of multiple responses is not available. ALICE, uses an extension of the XML, Extensible Markup Language named AIML for Artificial Intelligent Markup Language, it uses simple pattern matching based on templates and its matching algorithm that uses depth-first search, applies recursive techniques, is able to combine two answers and storages a huge corpus text (Abu Shawar & Atwell, 2002).

ALICE became one of the most successful chatbots of its time, since its publication in 2002, becoming a three-time winner of the Loebner Prize. It improves and allows a variety of interfaces in different programming languages. By 2005, Pandorabots[8], a web service that promoted the use of ALICE and the AIML reported support for over 20,000 different chatbots (Heller, Procter, Mah, Jewell, & Cheung, n.d.).

Freudbot

Similar to the way players could learn how to find the shortest path in the MUD game, after considering the possible benefits of using a chatbot to aid in the learning process, seeing the conversation as a natural skill learned effortlessly at an early age, based on the theory about the existence of linguistic rules governing the conversational exchanges, and relying on the predisposition of people to treat object like televisions, computers and other media as people, and the social rules that govern the human-computer interactions in a similar way that human-human interactions occur; Freudbot was developed at Athabasca University of Canada, a chatbot with the intention of giving the experience of having a conversation with Sigmund Freud, not to analyze or to help users, but to discuss theories, concepts and biographical events (Heller, Procter, & Mah, 2005).

Freudbot’s content was developed in AIML but also included various ELIZA-like features like the recognition of certain keywords or the combination of words and provide responses. When no input was recognized different strategies will enter in operation randomly like asking for clarification, suggesting or asking for new topics, and finally admitting ignorance. The purpose was to explore the experience that students could have from having conversations about theories, concepts and historical events in the life of Sigmund Freud in a 10-minute chat with a bot simulating to be him. Results provided insight about the persistent difficulty to maintain a detailed conversation with a computer, but also mildly positive evidence of the utility of using chatbots for online education, and the potential of the chatbot technology in education (Heller et al., 2005).

Something that can be also remarked from Freudbot is the displaying of an image of the theorist in this case Freud, to personify the chatbot. It is obvious to the users that they are not actually having a conversation with such a famous personality, but it seems to provide some positive value compared with a similar chatbot, Emile, develop at the University of Huddersfield by Gibbs and colleagues in 2003, to discuss social theorists in first and third person (Heller et al., 2005). Another highlight is the use of names to identify the chatbots, compared with Mauldin’s chatterbot, the use of a more human-like name seems to provide a more appealing experience.

Evaluation of chatbot systems

The Loebner prize it is still the most famous recognitions that a chatbot can hope to achieve in the evaluation of a more natural human-machine communication. As Loebner stated on 1994[9]:

“The initial Loebner Prize was the first time that the Turing Test had ever been formally tried.”, helped in the development of Artificial Intelligence, also triggering the conversation about the social implications of machines doing the work of humans, unemployment and the prize itself as a social experiment.

However, authors have noted the need for alternative methods to evaluate chatbots. Among such measurements, the use of the ALICE chatbot system as a base for a chatbot-training-program to read from a corpus and convert the text to AIML format was considered. The idea was first to generate prototypes of chatbots with different applications, and then evaluate the results individually base in the particularities of each system. One was an Afrikaans chatbot in the Afrikaans language, a Qur’an chatbot and an FAQ prototype (Shawar & Atwell, 2007).

In the search for measurement metrics for chatbots, Shawar and Atwell presented some interesting elements in the construction of the prototypes, one remarkable step was the construction of a knowledge base independent to a specific language. Using a Java program gathered information from a corpus, a way of referring to a machine-readable text, and then convert it to the format used by ALICE, the AIML.

This program allows working with no restrictions of language, the domain of knowledge and the structure in which the information is presented. Languages other than English that was tested include Arabic, Afrikaans, French, and Spanish. Also, one of the chatbots presented is used as an Information Retrieval System as a way to answer Frequently Asked Questions (FAQs) about a specific website. The evaluation for each chatbot was different, one was evaluated based on the dialogue efficiency, quality, and satisfaction; another was evaluated by the ability to access and information source; and for the last one, the information provided by the chatbot was compared with that of a search engine (Shawar & Atwell, 2007).

Results in the application of different methods to evaluate chatbots showed that, although ALICE and other chatbots use linguistic expressions more similar to a dialogue to support the idea of having a conversation with a human, chatbots can be useful even if they do not fool the user to believe being human. For that reason, it does not seem appropriate to always use a methodology like the one followed in the Loebner prize to evaluate every chatbot. Instead, the evaluation should be adapted to the problem that the specific chatbot is aiming to solve.

Discussion

Hutchens stated that even after decades from the creation of ELIZA, the advancement in computational power, storage space and memory size, the blossom of Artificial Intelligence in areas like image and speech recognition, no program had passed the Turing test. (Jason L Hutchens, 1996). Such a claim seems still valid. However, what cannot be denied is the utility that chatbots can provide other than fooling humans to believe they are not computer programs.

The utility of chatbots in education

Chatbots are computer programs capable of using natural language, however, their different applications have proven that such programs are not built solely to mimic human conversations. Applications extend to education, retrieval of information, business and e-commerce (Abu Shawar & Atwell, 2007).

Even though the Turing Test and the Loebner Prize have provided an intellectual incentive in the development of Natural Language Processing, it should not be the only way to evaluate chatbots efficiency in solving problems and becoming part of the tools humans use daily.

Being the case that chatbots that communicate using natural expression are more likable by the users, and that the development of domain-specific chatbots seems to be the way to go, it will be important to identify new approaches to develop those kind of chatbots that could be of some aid to users, even if they do not fool people to believe they are humans. In fact, the is some advantages in knowing that one is communicating with a computer, like not getting angry if there is a misunderstanding.

For our interest it has been proven the utility of chatbots for more than just entertainment, remarkably in learning, prove of that is the case of Lucy, a commercial chatbot with speech recognition and the use of an avatar that performs different characters in tutoring students for the learning of new languages (Fei & Petrina, 2013), CSIEC, a Computer-assisted English learning chatbot to aid Chinese students by using natural language (Jia, 2009), or even further as an advisor for undergrads in helping them making decisions and answering frequently asked questions in a specific domain (Ghose & Barua, 2013).

Is easy to create a chatbot?

The process of creating a functional chatbot can be seen as either very simple and quickly or a difficult task that may require a lot of effort and work. The first perception comes with the variety of tools available to create a chatbot from scratch, or using an assistant like the commercial Chatfuel[10], that allows anyone to easily set up a chatbot with an interface based on buttons, and boxes that follow a predefined conversation flow, but does not uses Natural Language Processing or a specific engine, neither an implementation model like AIML, MegaHAL or similar.

Since it is really simple to link Chatfuel chatbots with Facebook, one could get tempted to try on manually writing all the possible interactions or limiting the conversation flow set by the buttons and boxes without taking the user input. However, after some interactions it will be evident the need for a more sophisticated way to create a knowledge base and elaborate the answers to different inputs using the computational power and not having to write down manually the answers to all the possible inputs, which other than being nearly impossible would take much valuable time and miss the opportunity of taking advantage from the work of previous authors like those cited in this paper.

In other to validate the statement that setting up a chatbot is fairly simple but adding Natural Language Processing requires a more detailed work, for the present paper four chatbots were created using different methods, from those two were deployed on Facebook, one was used only inside the Pandorabots[11] platform and another one was linked to a Discord server.

For building quickly deploying a Chatbot for the first intent a program written in Node.js was used and a localhost to link it to a server on the Discord[12] platform. This initial bot relied solely on the use of cases for the key matching and then providing answers, users will have to reference the bot with a special character or reserved word followed by the user input. The purpose was to provide information to the server members about a specific topic related to different user inputs, however, the experience was not much natural in spite of the entertainment provided to users.

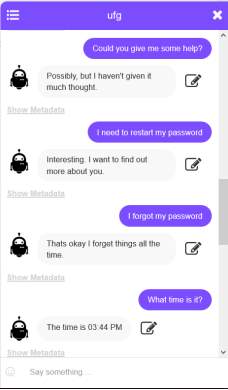

For the second chatbot still, the program was written using Node.js along Git for versioning control but this time the Heroku Platform was used for hosting. It took just a bit of time more to build it and finally deployed it onto Facebook but it was not more than two days and although the bot was functional, it depended only on an if-then-else structure and was evidently lacking the use of Natural Language Processing and a well-defined model for the creation of the knowledge base and the formulation of answers that seem natural and pertinent.

After that, a bot was created using the Chatfuel platform, it was named SoporteUFG and only provided limited interaction based on boxes and predefined text. It was deployed on Facebook and users had the opportunity of testing it and some potential for its use even without Natural Language Processing was identify. Chatfuel offers the use of Natural Language Processing, but such will be part of a further work.

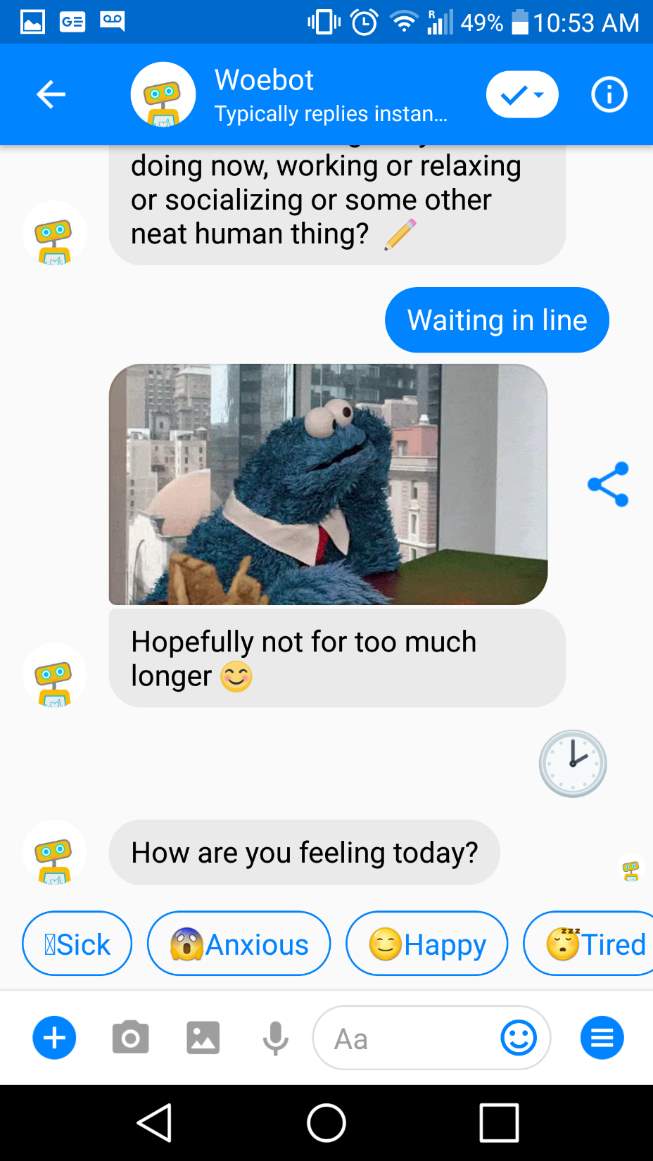

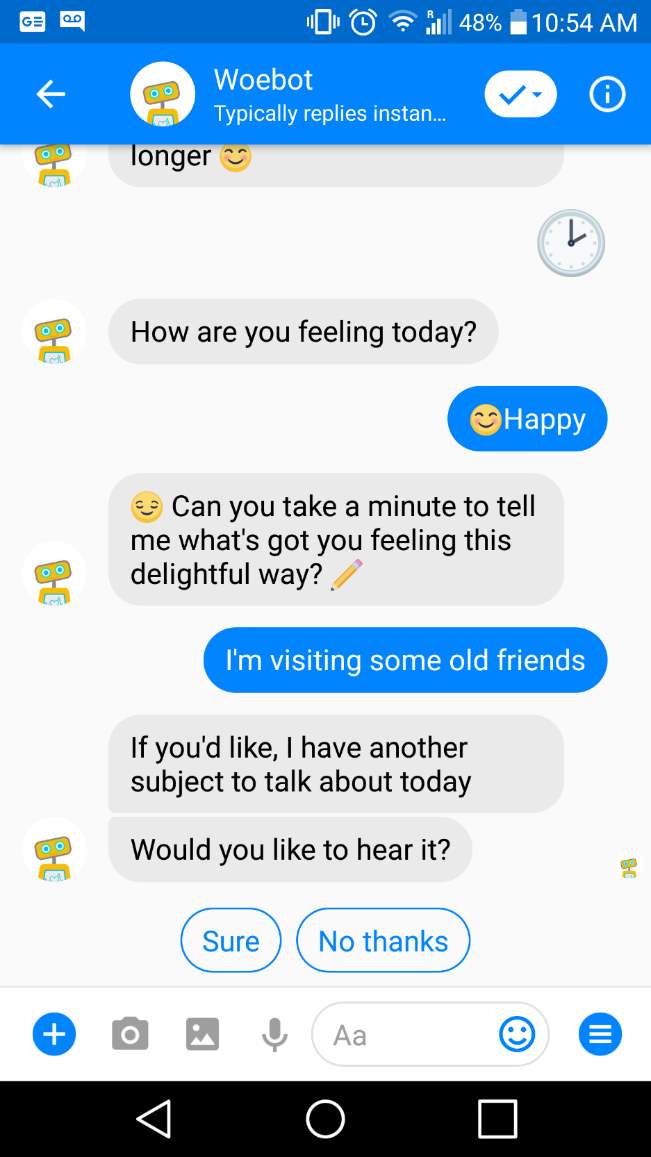

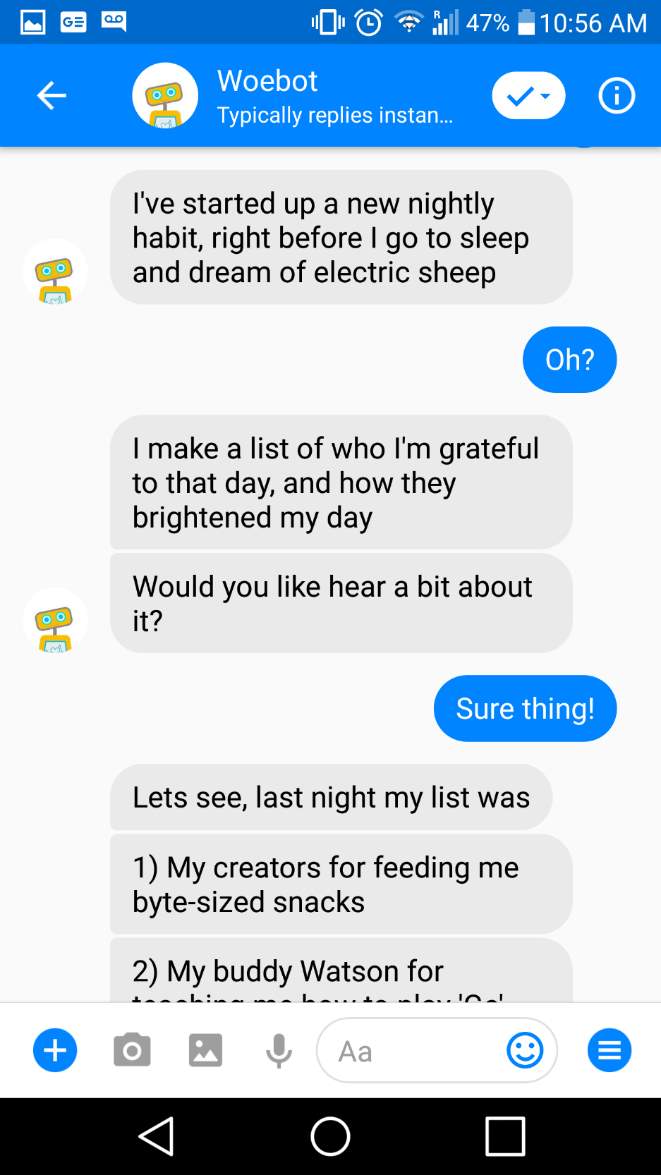

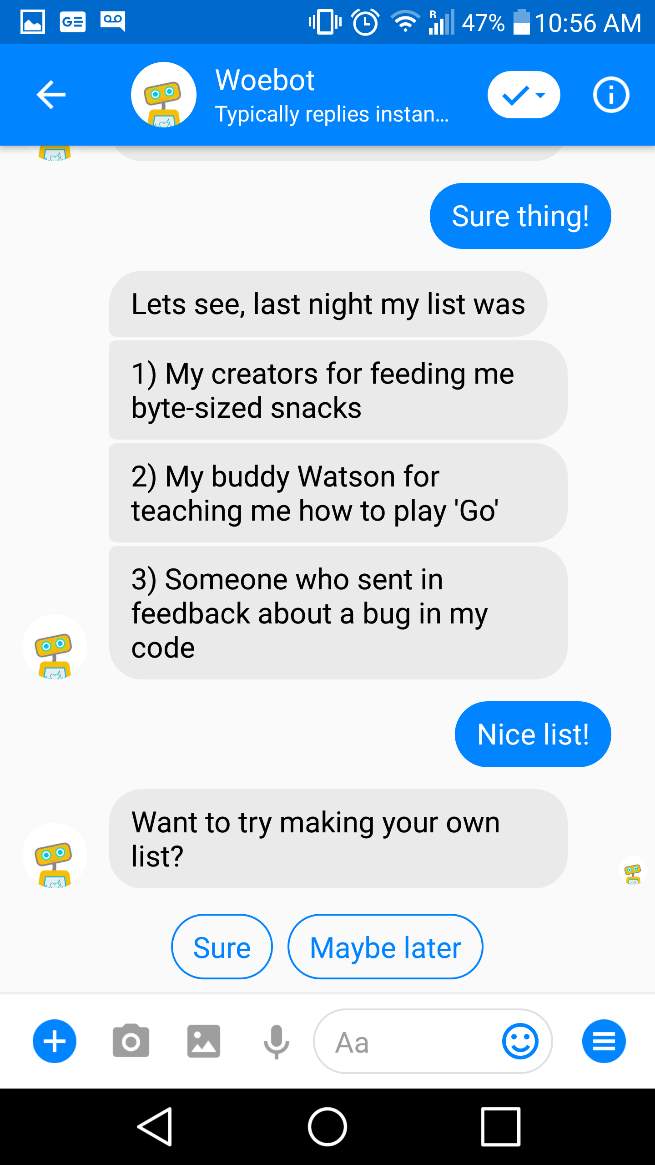

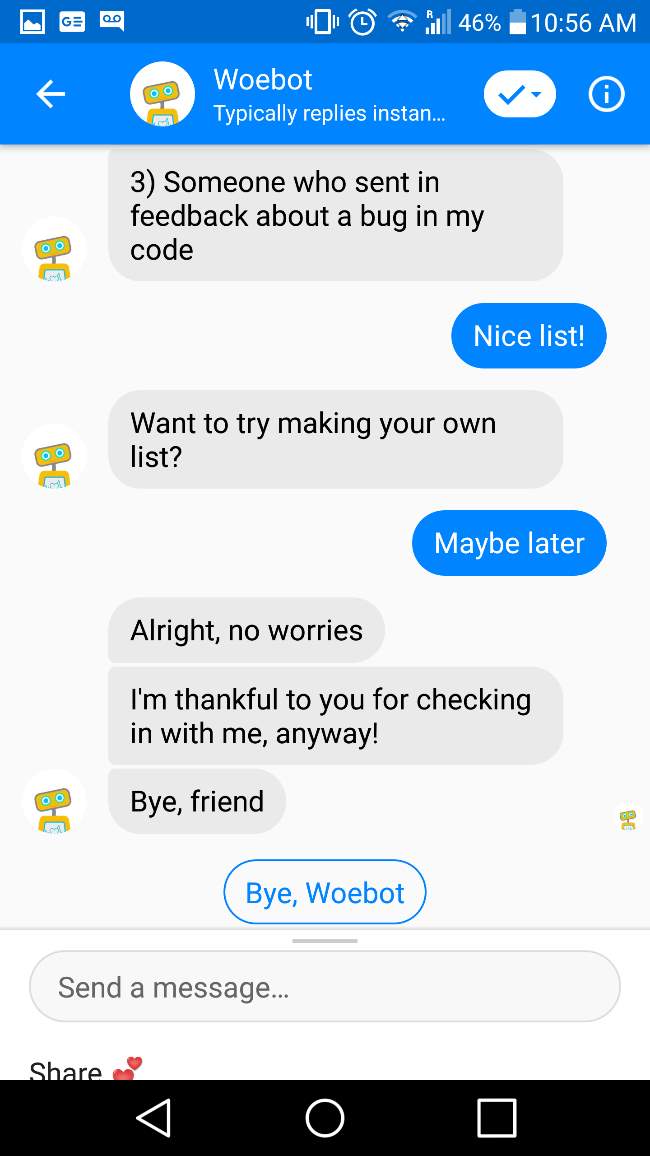

There are in fact chatbot implementations that do not use Natural Language Processing and still provide some useful information and interesting interactions with users. One of such is the case of Woebot, a fully automated conversational agent, aiming to help cope with depression. For the last year one researcher, Wilfredo Aleman, has been interacting with Woebot in a way that although makes no use of natural language inputs, buts its constructed in a way that provides semi-natural responses from the agent and empowers the user to interact with it using predefined options.

Pandorabots.com offers a free tool to create quickly create a small talk bot, after singing in, one can choose the language and the kind of chatbot intended to deploy giving the options of a blank bot, or a small talk bot.

For this case two chatbots were deployed, the blank bot had to be written from scratch and demanded more time and to be familiar with the AIML, the small talk, however, provides interesting aid in giving a working bot that can be modified to satisfy the needs. It includes part of Rosie’s knowledge base; Rosie is a fork of ALICE2 optimized for its use on the Pandorabots platform.

Pandorabots platform uses AIML and can support very complex chatbots, on its database one can find some very popular chatbots and even recreations of famous winners of the Loebner prize, offers aid in the deployment of chatbots for many platforms and, at the time of writing this article even permitted the link of chatbots for Whatsapp among others popular social networks. Some of the advanced tools are paid but a free tier is provided for up to 1,000 interactions monthly.

Among the Pandorabots directory, some chatbots written for the Spanish language were found. This platform is a good candidate for further work in the design, development, and deployment of a chatbot in Spanish as a Technical Support agent for a Latin-American University. However further work is required to determine alternatives to the AIML, the construction of the knowledge base and the evaluation of cores for Natural Language Processing that support Spanish in the aim of experimenting with Sentiment Analysis.

Creation of a Knowledge Base

In the aim for facilitating the process of constructing a knowledge base for chatbots, previous works have revealed different possibilities, for instance, taking advantage of the question-answer structure of the discussion forums to extract information from them, ranking the pairs, using Support Vector Machines for classification and using some human help to identify the relevance of the answers given in the forum to create a ranking in the construction of responses (Huang, Zhou, & Yang, 2007).,>

Other authors have succeeded in using ontologies to construct knowledge bases, Jia and his chatbot Computer Simulation In Educational Communication, CSIEC, proposes the use of a Natural Language Markup Language, NLML as annotation language for natural language intended to provide a structure to storage such knowledge in a way that becomes easy to construct responses from it (Jia, 2009). Ontobot authors use another approach while still using AIML, that is using an ontology that is converted to a relational database providing the capability of handling changes in the domain of knowledge and the ability to deal with questions about such domains as long as they are available on its knowledge base, including also a module for Natural Language Processing (Al-Zubaide & Issa, 2011).

Conclusion

Nowadays, the advancement in computational power, the development of frameworks for development and the existence of platforms for the deployment of chatbots creates the ideal environment for multiple projects towards the creation of conversational entities that not necessarily will fool humans into believing they are humans too, but into providing aid in carrying out tasks that require some sort of communication that can be designed to friendly bots that will not get tired, can be always assertive and can be linked to huge knowledge bases in a way that sometime in the future any question can be answered in natural language by bots that are easily and immediately acceded thru mobile devices.

Further work in our research will be directed towards the implementation of a domain-specific chatbot, the construction of its knowledge base in a way that requires little maintenance from the botmaster, and the integration of natural language processing in Spanish.

Figures and tables

Figure n.º 1: MUD example, a Telnet session to IgorMUD from igormud.org. In 1994 Mauldin published his work about Chatterbots connected to the TinyMUD and entering the Loebner competition. Thanks to the nature of the MUDs it was possible to use chatterbots to provide information about the Dungeons and to chat in general. Source: self on a Telnet session.

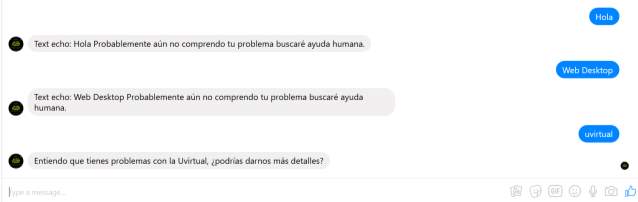

Figure n.º 2: Chatbot created from scratch using Node.js, using Git for versioning control and hosting it on Heroku. It was later linked to Facebook and interacted with it, however it was created just using key matching and the interactions were really poor, although it was set in a relatively short time of around three hours. Source: self.

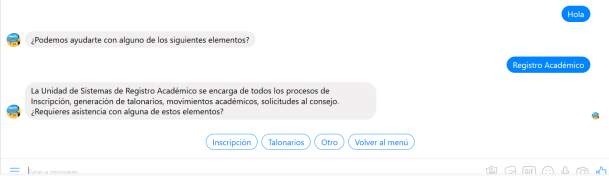

Figure n.º 3: Chatbot created using Chatfuel, it allows the user to interact with it using boxes and preset answers instead of using Natural Language Processing. It can be easily deployed but lacks the potential for understanding the user inputs. Source: self.

- b) c)

d) e) f)

Figure n.º 4: Woebot, an example of a Chatbot that does not process inputs using Natural Language Processing but that proves to be useful initiating a conversation, providing information and tracking moods of the user, among other things. a) A daily greeting received from Woebot, asking a question with fixed options to answer, b) Open question that Woebot makes after the checking but that does not provide an elaborated answer for the user input, c) Woebot introducing additional information to help the user improve its habits, d) Woebot asking the user to interact with it, e) The invitation to interact was declined but in case it was accepted the user could write its answers, although no information is processed to provide feedback, f) An example of the log that Woebot saves about the recent moods so user can review it and reflect on it. Source: self.

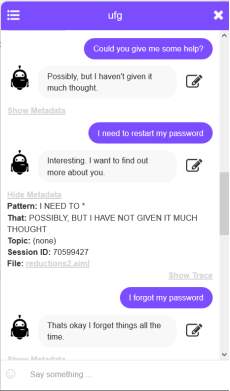

Figure n.º 5: Chatbot created using the Pandorabots platform. It provides small talk capabilities based on the Knowledge base of Rosie. It allows the maintainer to see the metadata of the responses and also to train the chatbot for new responses by editing it right away when used.

References

Abu Shawar, B., & Atwell, E. (2002). A Comparison Between Alice and Elizabeth Chatbot Systems. School of Computing Research Report Series.

Abu Shawar, B., & Atwell, E. (2007). Chatbots: are they really useful? LDV-Forum: Zeitschrift Für Computerlinguistik Und Sprachtechnologie. https://doi.org/10.1.1.106.1099

Al-Zubaide, H., & Issa, A. A. (2011). OntBot: Ontology based Chatbot. 2011 4th International Symposium on Innovation in Information and Communication Technology, ISIICT’2011, 7–12. https://doi.org/10.1109/ISIICT.2011.6149594

Alan, M. (1950). Turing. Computing machinery and intelligence. Mind. https://doi.org/http://dx.doi.org/10.1007/978-1-4020-6710-5_3

Colby, K. M., Weber, S., & Hilf, F. D. (1971). Artificial Paranoia. Artificial Intelligence, 2(1), 1–25. https://doi.org/10.1016/0004-3702(71)90002-6

Fei, Y., & Petrina, S. (2013). Using Learning Analytics to Understand the Design of an Intelligent Language Tutor – Chatbot Lucy. International Journal of Advanced Computer Science and Applications. https://doi.org/10.14569/IJACSA.2013.041117

Ghose, S., & Barua, J. J. (2013). Toward the implementation of a topic specific dialogue based natural language chatbot as an undergraduate advisor. 2013 International Conference on Informatics, Electronics and Vision, ICIEV 2013, 0–4. https://doi.org/10.1109/ICIEV.2013.6572650

Heller, B., Procter, M., & Mah, D. (2005). Freudbot: An investigation of chatbot technology in distance education. Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications, (March 2016), 3913–3918. Retrieved from http://www.editlib.org/index.cfm?fuseaction=Reader.ViewFullText&paper_id=20691

Heller, B., Procter, M., Mah, D., Jewell, L., & Cheung, B. (n.d.). Freudbot: An Investigation of Chatbot Technology in Distance Education. Retrieved from http://www.pandorabots.com

Huang, J., Zhou, M., & Yang, D. (2007). Extracting chatbot knowledge from online discussion forums. In IJCAI International Joint Conference on Artificial Intelligence.

Jason L Hutchens. (1996). How to Pass the Turing Test by Cheating. School of Electrical, Electronic and Computer .

Jia, J. (2009). CSIEC: A computer assisted English learning chatbot based on textual knowledge and reasoning. Knowledge-Based Systems, 22(4), 249–255. https://doi.org/10.1016/j.knosys.2008.09.001

Lenat, D. B. (2016). WWTS (what would Turing say?). AI Magazine, 37(1), 97. https://doi.org/10.1609/aimag.v37i1.2644

Mauldin, M. L. (1994). ChatterBots, TinyMuds, and the Turing Test: Entering the Loebner Prize Competition. Aaai.

Shawar, B. A., & Atwell, E. (2007). Different measurements metrics to evaluate a chatbot system. Proceedings of the Workshop on Bridging the Gap: Academic and Industrial Research in Dialog Technologies, (April), 89–96. https://doi.org/http://dx.doi.org/10.3115/1556328.1556341

Weizenbaum, J. (1966). ELIZA — A Computer Program For the Study of Natural Language Communication Between Man And Machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.5100/jje.2.3_1

[1] Home Page of The Loebner Prize in Artificial Intelligence. Retrieved from https://web.archive.org/web/20171201175728/www.loebner.net/Prizef/loebner-prize.html

[2] Biography. Retrieved from http://drrobertepstein.com/index.php/biography

[3] Al-Rifaie, M. M. AISB Web Page. Retrieved from http://aisb.org.uk/events/loebner-prize

[4] DUNN, A. (1996, May 29). Machine Intelligence, Part I: The Turing Test and Loebner Prize. Retrieved August 15, 2018, from http://movies2.nytimes.com/library/cyber/surf/0529surf.html#1

[5] Ibid.

[6] Available at https://github.com/kranzky/megahal

[7] Bartle, R., & Cox, A. (1991, November 15). Early MUD history. Retrieved from http://www.linnaean.org/~lpb/muddex/bartle.txt

[8] For more information, see http://www.pandorabots.com

[9] Hugh Loebner, In Response https://web.archive.org/web/20171102210735/http://loebner.net:80/Prizef/In-response.html

[10] More information on their website: https://chatfuel.com/

[11] More information about Pandorabots can be found in their website: https://pandorabots.com

[12] Discord is a social network that allows free chat and text usually preferred for gaming: https://discordapp.com/

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Technology"

Technology can be described as the use of scientific and advanced knowledge to meet the requirements of humans. Technology is continuously developing, and is used in almost all aspects of life.

Related Articles

DMCA / Removal Request

If you are the original writer of this dissertation and no longer wish to have your work published on the UKDiss.com website then please: