Data Center Virtualization Management and the use of Scripting for Automation

Info: 21899 words (88 pages) Dissertation

Published: 16th Dec 2019

Tagged: Computer Science

A study into Data Center Virtualization Management and the use of Scripting for Automation.

Abbreviations

Data Centers – DC

Virtual Machine – VM

Virtual Machine Monitor – VMM

Operating Systems – OS

System Center Virtual Machine Management – SCVMM

Graphical User Interface – GUI

Small and medium Enterprises – SME

Application programming interface – API

Virtual Core Processor – CPU

PowerShell Integrated Scripting Environment – PowerShell IS

Abstract

Virtualization of hardware is a challenge that any size enterprise has to overcome at some point. Data center hardware is historically designed for the hosting of a single operating system and application, a rising trend to reduce the amount of hardware and utilization of said hardware is the use of virtualization. Virtualizing a data center, creating a larger number of virtual machines on a single item of hardware, provides a platform for enterprises to successfully, cost effectively and efficiently manage their resources and hardware.

The objective of the project is to research, review, and evaluate virtualization management solutions and automation methods for a data center environment. Providing a beneficial overview into types of virtualization management that would be used in an enterprise level environment. A summary of enterprise level virtual machine monitors has been conducted revealing an overall insight into the systems. Following on from this the project identifies the need for automation, creating and critically reflecting on some essential scripts to support a virtualized data center and its administrators or managers.

Proving a general view into the benefits, advantages and need for virtualization within a data center environment, and why enterprises should consider this approach. The project will additionally provide areas for future work and improvements.

Chapter 1 – Introduction

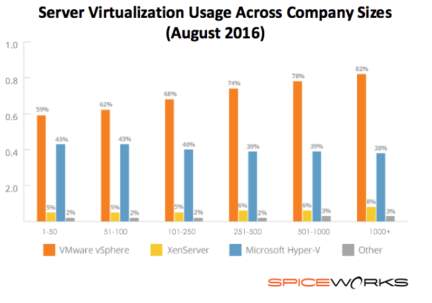

If managed correctly virtualization can solve or dramatically reduce data center problems within any enterprise. With the growth in data centers within enterprises, virtualization offers a large number of solutions and benefits to the enterprise. Increasing needs and demand from more resources within enterprises are producing larger physical data centers, this comes with increases in costs, energy and physical space. Virtualizing these machines, reduces the amount of physical machines needed, reducing the costs and energy supplies. However, to get the greatest benefit, an enterprise needs to ensure these virtual machines are managed correctly. There is a wide selection of management applications and methods, with the virtualization market rapidly growing due to the increasing demands “The market has matured rapidly over the last few years, with many organizations having server virtualization rates that exceed 75 percent, illustrating the high level of penetration.”(Warrilow, 2016).

Virtualization has changed the way enterprises use data centers, providing a fully utilized resourceful source, enabling scalable and flexible deliverables for desired needs. Virtual machine management, VMM, can provide a system in which IT administrators are provided with a clear, understandable and full overview of all the virtual machines, virtual resources and hosts. But due to the large scale of virtual data centers, manually managing these may not be the best solutions, automation will here come into its own. This project will review and analyze several of the greatest and well known products for VMM, and review several scripts that can be used as a prototype for essential automation.

1.1 Research Goals and Aims.

Managing virtual data centers has a number of challenges, depending on the complexity and the enterprise. Our goal is to provide a clear overview and awareness into virtual machine management within a data center, producing a review on current literature from within the industry. Creating a broad view into the area, creating an indication into what currently is available both software and technically.

Consequently, this research aims to critically evaluate and review three of the most common and popular virtual machine management programs available. Focusing on how well they work as a centralized management tool, and how well they perform in a complex data center scenario. Researching into there costs, versions and features, to gain a complete understanding of how well they will perform and identifying the benefits to an enterprise.

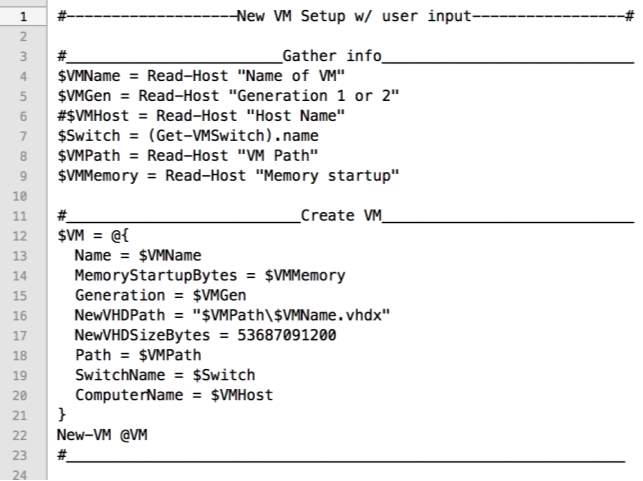

Secondly the production of basic automation scripts, will demonstrate a basic understanding and academic base of various automation scripts, that have the ability to be implemented as is or developed on to creating more advance automatic procedures for an enterprise. Resulting in a standard platform in which recommendations can be produced for further development.

1.2 Objectives

To successful accomplish the above aims and goals, the project will fulfil the below objectives:

- Understand virtualization, its management and how this successfully works within a data centre environment.

- Research current literature, creating a broad overview of the industry, identifying what is available in terms of virtual machine management and their performance factors.

- Investigate and critically evaluate current top market VMM applications.

- Produce automation prototype scripts.

- Critically reflect on how these can work within VMM applications to support a virtual data centre and its management.

1.3 Approach / Organisation

The project will follow an organization, very similar to the previously mentioned items. Firstly, the project identifies some background information, in Chapter 2, designed to give even the least technical readers a clear definition of the topic, terms used, along with identifying key subject areas that will be used and mentioned throughout the whole project. To then expand the reader’s knowledge, the project identifies current literature that is available, intensifying and detailing concepts within the industry.

Chapter 4, reviews and evaluates current available VMM applications, chosen due to the high demand they have in the current market. Identifying the methodology used, presenting the need and identification for automation scripts is presented in Chapter 5. With the implementation and testing of the scripts described in Chapter 6. A critical evaluation is then conducted in chapter 7, with a overall conclusion and further research identified in the final Chapter, 7.

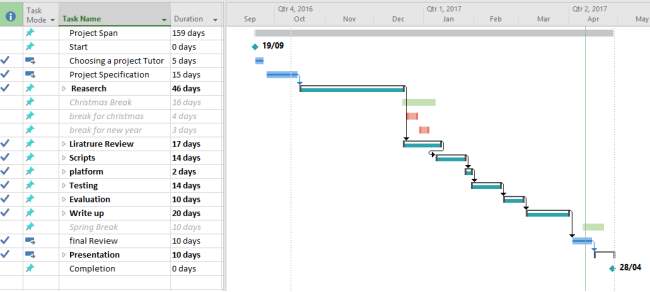

1.4 Project Plan

The project itself is identified below in figure 1, this is the schedule that was used to ensure tasks and milestones throughout are met and accomplished to thoroughly identify and progress in each areas producing a promising and usable project and report. A more detailed version is attached in the appendix of the report.

Chapter 2 – Background Research

In this chapter, we provide a broad overview of the background required to understand the topics used throughout this project.

2.1 Data Centers

A common theme growing between businesses, is the used of data centers, these are seen in some of the largest and most profound businesses around the world. A data center as it sounds is dedicated space, where essential IT infrastructure is stored. This central source can be either physical or virtual, here this infrastructure, such as servers and can be used to support and operate daily tasks of the business. (Rouse, 2010) With the Growth in popularity as businesses grow out of their own infrastructure. Data centers (DC) here have grown common to use to provide computation, processing and storage to meet these needs of private and business applications. Depending on the business here may own and operate its own in-house data center, or use a DC provided from a service provider specializing in this area.

The demand and growth has fueled an increasing demand in the computation that required within DC’s. Growth can be down to the rapid increase in the affordability and access of personal DC in a cloud format, this will increase business need due to the demand and progression to gain access online to services 24-7.

DC’s have several challenges that they need to overcome, one of the first problems that is need to overcome is the amount of hardware. Safeguarding the reliability, cool and energy needs of what sometimes can be thousands of servers, in both a responsible style and controlled manner. Many known that large DC such as google or Microsoft cool and generate power from the ocean as a more radical approach (Rosoff, 2011) (Khandelwal, 2016).

Alongside this, in order to efficiently allocate and distribute resources to applications to a business. The DC hardware itself has to have limited flexibility, ensuring that the DC has a high availability, minimal downtime, with suitable networking and security requirements and a high fault tolerance, alongside ensuring that resources are thoroughly monitored and managed.

Many problems can occur due to lack of planning in relation to resources and capacity needed for the specific use, improvements here can be made in order to make use of all the severs and what they can offer. Here severs consolidation can be used to ensure that applications are running the correct performance level and are using the correct amount of resources. Consolidation is either horizontally, distributing workload across several servers, or vertically, running multiple workloads on a combined OS. (Jayaswal, 2006) (Iams, 2005). Virtualization should be used to effectively run and utilize server hardware to the best ability, ensuring VM has the correct resources allocated in a separate, secure and isolate manner (Bhuvan Urgaonkar, 2002).

Resiliency and high availability are critical concerns for DC managers, to ensure that the DC has a minimal as possible downtime; any down time can cost the business. Many large DC will have many hardware failures; the DC needs to have a resilient system with a fault-tolerant system, so the DC can operate in a full or partial matter (Jayaswal, 2006).

2.2 Virtualization

Virtualization refers to the concept of a virtual representation of software such as an operating system, run concurrently on a single item of hardware. Virtualization is a virtual representation rather than a physical one, of a single physical hardware item, partitioning it into multiple concepts. In place of utilizing a certain aspect of the hardware infrastructure. Each Virtual application created on a single item, is then known as a VM, virtual Machine. A Key use is for Sever virtualization which contains the use of a Virtual Machine Monitor, VMM also known as a hypervisor, the VM is ran directly on the hardware. Where the hypervisor and the guest OS on the VM presents an imitated version of the hardware environment, often unaware that it is ran on a virtualized hardware. Other uses can be virtualized with the use of a host operating system with the VMM running on the OS.

The techniques first developed by IBM in the 1960’s to provide simultaneous interactive contact to a mainframe computer (Susanta Nanda, 2005), but with a recent resurgence in popularity, due to the ability to improve resources consumption. Vitalization is becoming a necessity, when implemented there are a large number of benefits, such as utilizing hardware, reduction in physical space, lower energy costs, and reduced capital and administration costs. Virtualization can be applied in serval ways, some of the main examples are applications, desktop, hardware, storage and networking (VMWare, n.d.) to improve resources and hardware throughout. There are several classifications of virtualization, and how virtualization is preformed, and the combination of physical resources. These different approaches seen categorized below:

Full virtualization

Full virtualization is a technique in which a ‘bare metal’ hypervisor sitting directly on the hardware, where each virtual operating system is unaware and running an unmodified system. With the guest OS unware that the system is virtualized, commands are issued to what is believed to be the hardware, without knowing this simulated hardware created by the hypervisor. VMware and Microsoft Virtual sever are prime examples of this. Performance of full virtualization as a complete system is not always ideal, I/O intensive systems struggle, and many critical commands can be lagged due to a binary translation being used (Kai Hwang, 2012). This system is the only selection that involves no hardware or OS altercations or modifications, which is one of the largest advantages and significant value this has.

Para Virtualization

Para virtualization a method in which the guest OS is aware of the virtualization, this modified OS includes drivers that communicate directly to the hardware or host OS via the use of a simple hypervisor layer. The hypervisor provides commands that also include memory management and interrupt handling. The guest OS here needs altercation to support this method, modifying only the guest OS Kernel to improve performance. The ability to operate in this manner, reduced overhead and can optimize privilege commands compared to full virtualization, where the host OS and hypervisor work more efficiently together. Para virtualization offers better support for I/O Device handling, without labor intensive emulation required in the modification of the guest OS (Jun Nakajima, 2007). Providing a faster service compared to full virtualization. Xen uses a para-virtualization method (Paul Barham, 2003).

Hardware Assisted virtualization

This being a type of full virtualization, this enchantment developed from vendors and enchantments in virtualization technology, targeting privilege instructions that can be run directly on the processor without out affecting the host. These privilege calls are handled by the hardware directly, removing the need for para virtualization or binary translation. This system is currently only supported on 64-bit, intel processors. (Vmware, 2008). This hardware assisted virtualization provides the VMM or hyper visor with an easier strong implementation, where performance can be improved compared to full virtualization. (Jun Nakajima, 2007)

Partial Virtualization or Hybrid Virtualization

Additionally to the three previously mentioned types, there is a combination method of two of the above types of virtualization, known as partial or hybrid virtualization. For specific hardware driver’s para virtualization is used, with the host OS using full virtualization for all other features. The two types can also be merged with hardware assisted virtualization. As a result, the OS and applications will need a modification but this overall can merge some of the benefits of both styles into one.

2.3 Virtual Machine Management (VMM)/ Hypervisors

Virtual machine manager or hypervisors are designed to enable communication between the hardware and VM within the abstraction layer. Typically, a VMM is responsible for monitoring and enforcing policies to the VM’s, this can be done via software on a host OS, or directly on the hardware, known as bare metal. There are a number of different programs available that are designed to keep track of all that occurs in a VM. This software is designed to manage and configure the use of resources, such as CPU, Memory and I/O transferee’s. Additionally, supporting the host and network resources, in order to deploy efficient and suitable VM’s. (Rouse, 2016) (Andreas Blenk, 2015)

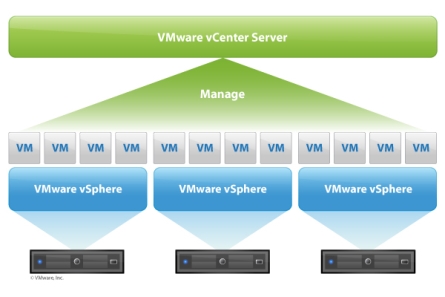

The hypervisor acts as a gateway to the hardware for the VM, isolating the guest OS’s and applications from the hardware. This allowing the host to operate one or more different VM’s with different OS’s, allowing them to work on and share the same single piece of hardware. Whilst being independent and un-reliant on each other, just simply on the hardware and hypervisor. This isolation results in the hardware being unware that there are multiple VM’s running. With many additional benefits of hypervisors, such as the ability to migrate and move a VM at a moments notice, with minimal disruption, causing very little down time to the business or use.

There are several types of VMM tools available each with different techniques and strengths depending on requirements. Each with different levels of applications based on what type is needed, these are divided into Type one and Type two. Where as type two mostly used for client-based systems and type one commonly used in enterprise versions.

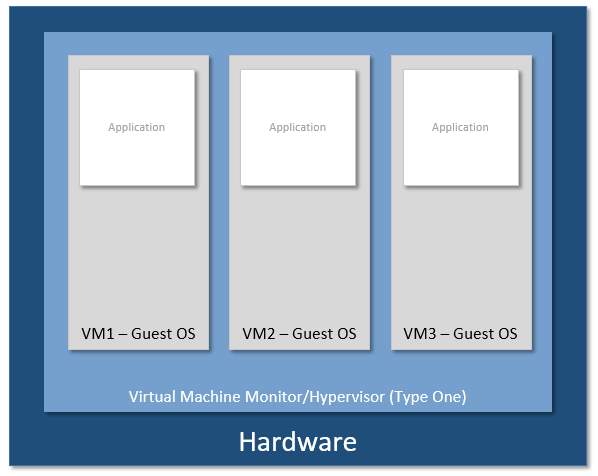

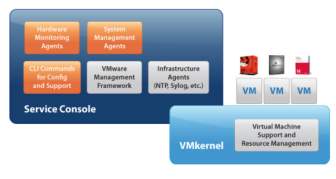

Type one

Type one runs on bare metal, directly on the hardware without the need of a host OS. This providing clear and direct communication between the VM and the hardware. Type one here are typically known as ‘hypervisors’. Type One with the direct communication offers a faster communication with the hardware compared to Type two. (Lee, n.d.) (Robin, 1999)Additionally, type one offers a greater security in comparison. The major benefit here, is each VM is separate, hence if a OS crash or a VM crash was to occur then no other affect would be seen on the other VM’s (More Processes, 2013). There are several popular programs that fall into this category, such items as Xen, VMware vSphere, Microsoft Hyper-V and KVM are just some examples.

Figure 2 – VMM Type One Example

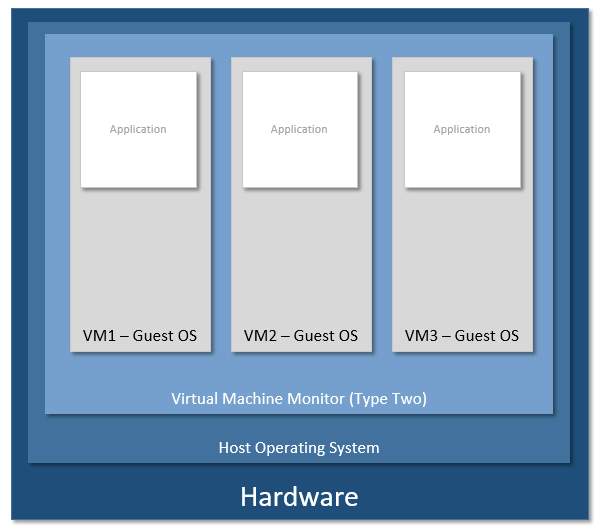

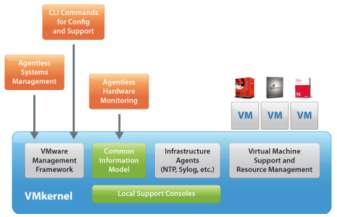

Type two

Type two VMM, runs on a host OS managing, monitoring and redirecting requests to the hardware and hosting environment, with minor processing ran during the redirect. Here the host machine contains the hypervisor to host the VM’s, each guest VM is then hosted in a secure and isolated environment. The VM here runs on the third level above the hardware, above a virtualized layer, creating an environment with an abstraction of the hardware, that can be used by the VM’s. (Lee, n.d.) (Robin, 1999)

Examples of these type two systems are VitualBox, Parallels, VMware Workstation and VMware Fusion. Type two VMM are applied when the users want access to the host OS and what that may contain, such as applications and documents. One advantage here is the support of the hardware, type two can support a wider range of hardware, with a simpler and less complicated than type one. However, type two does rely on the host OS, if an error was to occur here on the host or a restart on this host is needed, this would directly impact the VM’s (Security Wing, 2014)

Figure 3 – VMM Type Two Example

Several products have been created to support Enterprise level virtualization, these are on average close-course, funded systems that offer an increasing support into managing multiple servers with a high level of virtual machines. These systems operate like the standard VMM programs, however grant an overall view of all hosts and VMs with the stated network. These complete VMM products on average will use Type one VMM, due to the direct control and resource allocation they provide.

2.4 Data Center Virtualization

Data Center virtualization, technically defined as the “the process of designing, developing and deploying a data center on virtualization technologies”(Techopedia, n.d.). In short using virtualization technology and data center hardware, multiple virtual resources can be created, using fewer items of hardware, minimizing costs, and resources needed for an enterprise scale data center.

The hardware devices can be expensive, consume space and power and generate a lot of heat, additionally maintenance tasks, such as re-deployment and backups can be time consuming and can require extensive downtime. Hardware also runs the risk of failing, producing potential threats to the business. Though the use of virtualization these servers can be consolidated into fewer pieces of hardware in a virtual environment, in place of vast server farms, that are historically designed to run and operate a single enterprise application such as a database or an exchange server on a single item of hardware. (WMware, n.d.)

Server Virtualization has become popular in DC’s, providing an easy method of partitioning the hardware, allowing multiple virtual applications to run in isolated area on a single hardware item, each operating as a single independent server. Data centers typically are designed to use a type one VMM, or hypervisor, where the dependency on another OS is low, reducing risks and lowering the overhead.

As previously mentioned there are a number of VMM products which offer enterprise level management of data centers, these systems are designed to connect and manage multiple host servers with a high volume of VMs. These management systems designed for enterprise data centers virtualization are able to provide type one VMM, with support, management and ease of utilization.

With the vast size and demands of data centers, an area in which administrators can manage and support the data center from one area is essential and critical to a smooth operation. These centralized VMM can offer rapid deployment, management, monitoring and reporting of multiple hosts and VM’s. These can work in coordination with standard VMM tools, providing the increasing need to greater visibility and monitoring, in order to gain virtualizations full benefits.

Both VMM systems require the need for automation to ease workloads and demands required to run and administer data centers, this can be achieved through automated scripts. Once written, scripts can ease and support essential tasks reducing administrators work load. Additionally, increasing the benefits and demands of a datacenter to ensure an enterprise is gaining the best from virtualization.

Reasons to virtualize an enterprises infrastructure contains many benefits, however, can introduce some new challenges to a business. For a business to determine if a virtual need is required is highly dependent on the application and platform, some of which can be more difficult that other. Some of the many benefits of VMs within data centers specifically for enterprises are:

Reduced costs

With the use of a VM, providing access to all resources, potentially using all servers at their full capacity, allows in a reduction in costs in several areas. Such as the reduction of physical servers overall, the cost of upkeep, maintenance and operational costs. With the ease of migration within the Data center, costs can drop, due to the ease of movement of VM’s between hardware reducing energy, and disaster recovery plans (Christopher Clark, 2005).

Higher utilization

Providing near capacity serves, with use of all resources provides the best available and utilized servers, translating into more VM’s hosted on fewer physical servers. (Jeremy Sugerman, 2001) This consumption of resources is necessary to any enterprise to ensure that the equity of the assets within the Data center are not under-utilized, equating into lower costs, maintenance and management.

Increased management

Virtualizing creates an easier management for the users, both infrastructural and virtually. The flexibility here can allow the creation, duplication and dropping of VM is as needed, multiplying these across servers within the enterprise. This management can support the fast and easy provision of applications within the VM’s, this vital property can improve the performance, reliability and management. (WMware, n.d.)

Disaster recovery and redeployment and backups

Virtual machines can be dynamically transformed allowing highly adaptive and responsive data centers, allowing rapid re-deployment between physical servers. Virtual snap shots, allowing a responsive up-to-date backup, allowing the re-deployment of a VM to occur in a matter of minutes. In relation, to recover from a potential disaster, virtualization removes the dependency from a select item of hardware; in place, allowing the VMs to be adaptive and operate on different severs. This support, and the consolidation of severs required allows for a speedier and more affordable recovery plan, with virtual assets providing a simpler backup and recovery process with a greater flexibility of resources and a smaller recovery time. (Hoppes, 2009)

2.5 Virtualized Data Center Automation

Data Center automation involves the process of managing and automating the workflow, processes and monitoring of the data center facility. Data center automation provides a centralized solution that can access, view, edit, monitor and administrate most if not all resources that is found within the data center. Data center Automation can be a relatively easy process depending on what tools will be used. (Techopedia, n.d.)

There are a number of applications available where automation steps can be set up and edit through the data center, the applications will then monitor the data center performance and run automated tasks when a particular event occurs, or at a pre-set time. A further method that is very common in small to medium size data centers is using scripting to run batch scripts, or using PowerShell to execute the desired task. (Milne, 2015)

Automation provides several features that may benefit the data center, including providing a wide overview and insight into the full and complete data center, automating routine processes such as patching, updating and reporting, automating and scheduling tasks for out-of-office hours or set times.

Chapter 3 – Literature Review

Within this chapter, we will identify and critically evaluate literature in relation and support of this project. Crucially looking into related areas around the use of management within a Virtual data center environment. In order to focus and evaluate the areas of improvement and advancement, here we look into a number of related papers to gain an all-round understanding of the subject matter and its recent and past developments.

3.1 Hypervisor/Virtual Machine Management Applications

Hypervisors vs. Lightweight virtualization: A Performance Comparison – Roberto Morabito, Jimmy Kjallman and Mikka Komu – 2015.

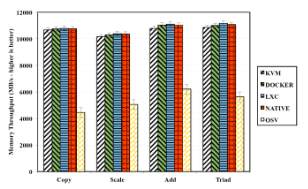

Written in 2015, the paper here focuses upon a performance evaluation of several Linux based virtualization systems. The aim here to review the Linux KVM hypervisor against a several Linux containers based systems, LXC, Docker and OSv. Morabito and his team, review that the increasing level of overhead in these systems is deterring users away from the many benefits of virtualization.

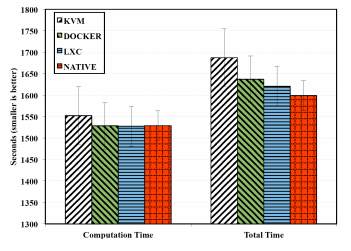

The methodology used allows a standard testing platform using non-visualized performance as a base. With the aim of monitoring the CPU, Memory, Disk I/O and Network I/O performance. In relation to the CPU results, they found that “Both the container-based solutions perform better than KVM” (Roberto Morabito, 2015), Exampled in figure four.The Disk I/O test equated that a very similar performance seen throughout, however Docker seen to at one point running better than the native does. Memory resulted in a half difference for OSv, seen in figure three. Overall stating that the container-based solutions are challenging the more traditional systems, although KVM has seen improvements in current years.

Discussion

The investigation here conducts a good and intensive review and benchmark for the alternative to the standardized hypervisors technology. This experiment is very alike “Container-based Operating System Virtualization: A Scalable, High-performance Alternative to Hypervisors” although with some differences, such as the comparison against the native machine and hypervisor. The work here is a good and progressive follow on, and provides more up-to-date information on container-based systems and alternatives to those pitched in the previous paper. Additionally, the use of KVM identified in other comparison style literatures “A component-Based Performance Comparison of Four Hypervisors”(Jinho Hwang, 2013). This Paper supporting the claims made, producing a similar results set, however the Hwang et al. conducted a more in depth experiment, testing in several different circumstances.

The results here are surprising to show how well the container-based systems are performing in comparison to hypervisors. However, in terms of security and isolation of VMs the tools challenged here will need reviewing, thus mentioned by Morabito as a further work.

A Component-Based Performance Comparison of Four Hypervisors – Jinho Hwang, Sai Zeng, Frederick y Wu and Timothy Wood – 2013.

Hwang et al. compares four different hypervisors in this paper, reviewing the performance against CPU, Memory, Disk I/O and network I/O. The four hypervisors; Hyper-V, KVM, VSphere and Xen are ran through a serious of tests with the aim of discovering which has the best performance and features for administrators of a data center. For a clean experiment, the same hardware has been set up for each Application, with the same virtual machines, Hwang et al. providing a clean environment and equal parameters. Here they use a number of external monitoring and testing applications to review the pre-agreed performance factors, and additionally testing the effects on multiple numbers of VMs.

To summarize, Hwang et al. found no superior hypervisor system overall. In fact, they found that each hypervisor had areas of improvement and areas where they suppressed the rest. Such as in testing the Disk, using Bonnie++, Xen has the worst performance, due to multiple smaller write actions, compared to the other three hypervisors. Overall, the discovery noted the VSphere performed the best in the majority of tests as predicted, due to the age and development of this program in comparison. In summary Hwang et al. state, “Our results indicate that there is no perfect hypervisors and that different workloads may be best suited for different hypervisors” (Jinho Hwang, 2013). Thus confirming that all hypervisors have a suitable benefits and strengths but depends on the use and the enterprise.

Discussion

Overall, the paper involves a good investigation with strong evidence to support the claims made. This paper alike “Hypervisor Shootout: Maximizing Workload Density in the Virtualization Platform”, “Hypervisors vs. Lightweight virtualization: a Performance Comparison” (Roberto Morabito, 2015) and “Performance Evaluation and Comparison of the Top Market Virtualization Hypervisors” again perform a review of hypervisors. Each containing strengths to support each claim Hwang et al. testing both open and close source programs, providing a clean balance of what system are available. Resulting in showing the differences in what they can offer. Although the difference between this and “Hypervisor shootout” is Hwang et al. testing the power and performance of the machines rather than the amount of VMs that can be created effectively. The combination and overlap of these papers enable an enterprise to have a clean and thorough overview of all that can affect their choice in hypervisor.

Performance Evaluation and Comparison of the Top Market Virtualization Hypervisors – Nile University – Abdellatief Elsayed and Nashwa Abdelbaki – 2013.

Literature here focuses upon the reviewing and identifying the characteristics of top market closed source hypervisors, providing a clear comparison in performance. Elsayed and Abdelbaki compare VMware ESXi5, Microsoft Hyper-V2008R2 and Citrix Xen Server, in two stages the first with one VM each, the second increasing the VM load, with the Thirdly they review Hyper-V 2008R2 against Hyper-V 2012RC. All experiments run on a standardized sever, each monitored by both DS2 Dell data store and PRTG.

Discussing the results Elsayed et al. found during the first test with one VM running, “Superiority of using the Citrix Xen server followed in decreasing order by the Hyper-V and then VMware respectively” (Abdellatief Elsayed, 2013, p. 48), stating the results were because Xen showed lower server CPU and memory usage during the tests. The second test run, this time with Hyper-V showing dominance, this again due to lower CPU utilization and memory usage overall, however surprisingly Xen showing the lowest CPU utilization, this had a 98% increase in memory usage. The third comparison experiment demonstrated Hyper-V 2012 RC had the advantage with “enhancement in memory handling and disk IOP’s” (Abdellatief Elsayed, 2013, p. 49) a predicted result through the paper.

Discussion

Research here contained well-conducted and adequate evaluation methods, back each point up running the experiment using two different monitoring tools. Elsayed et al. provide a convincing argument, with clear and reasonable data to prove the results gained. Supporting evidence seen in “A component-Based Performance Comparison of Four Hypervisors”(Jinho Hwang, 2013), providing secondary test showing Xen has reduced performance in CPU testing. This paper very alike “Hypervisors vs. Lightweight virtualization: a Performance Comparison”(Roberto Morabito, 2015), in which open source hypervisor systems have been experimented with in a very similar fashion. In conjunction, the two papers provide a clear understanding of performance ratings for a wide variance of VMM systems. Future work can be providing for both these papers aligning them and reviewing the performance against each VMM system.

Secure Virtualization for Cloud Environment using Hypervisor-based Technology – Farzad Sabahi – 2012.

Sabahi conducted this investigation in 2012, focusing upon security for VM and its hypervisor technology in use. Here Sabahi reviews the use of VM in terms of a cloud environment or data center for an enterprise, creating an improved architecture to increase security and reduce potential attacks. The investigation here reviews the increased need of isolation between VMs on shared physical machines; ensure that VMs cannot access both other VMs and the hypervisor. Reviewing both the benefits and weaknesses of a hypervisor based system, enabling Sabahi to create three major levels of security management that enterprise hypervisors should have:

- Authentication.

- Authorization.

- Networking.

The proposed methodology of the architecture created aims to increase these three areas to improve both VM isolation and hypervisor security, with the introduction of security and reliability units into the hypervisor layer. Thus resulting in vulnerabilities and creating a more complex secure system.

Discussion

Overall, the project contains a respectable research idea, future work would be to create a prototype and test the increase security of the hypervisor. This paper although with little in common with the majority of the literature review, highlights the increasing need for isolation between VMs and the increase in technology that will need to be actioned. This seen again in “VManage: Loosely Coupled Platform and Virtualization Management in Data Centers” (Sanjay Kumar, 2009)where a portion of study focuses upon the need of isolation within the VM’s and VMM’s. The importance of isolation seen throughout “Container-based Operating System Virtualization: A Scalable, High-performance Alternative to Hypervisors”(Stephen Soltesz, 2007), with a supporting experiments reviewing the need for isolation and efficiency.

Hypervisor Shootout: Maximizing Workload Density in the Virtualization Platform – The Taneja Group – 2010

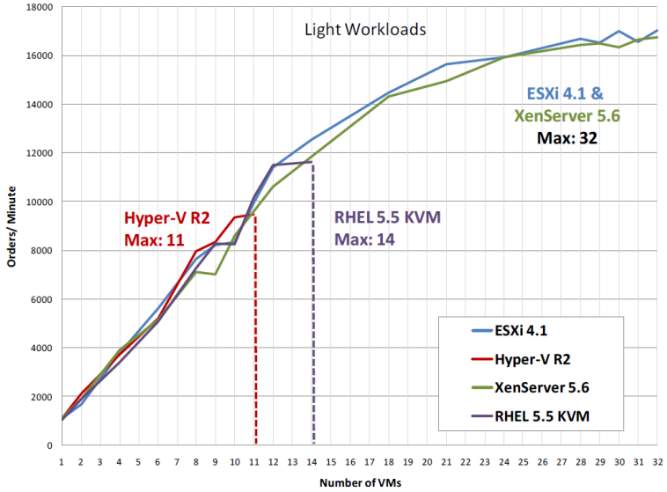

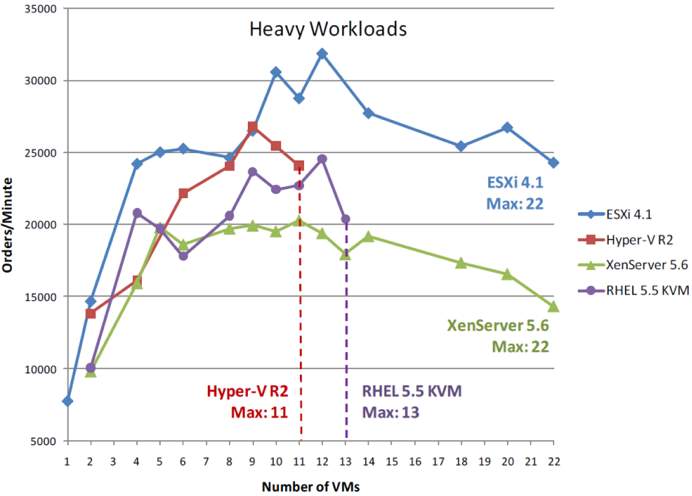

The investigation here conducted by the Taneja group in 2010, investigates the VM density, a measurement of the number of VMs that can run concurrently on a hypervisor whilst performing a number of different workloads. Here the Taneja Group focus on four largely well-known hypervisors:

- ESXi 4.1

- Hyper-v R2

- XenServer 5.6

- Redhat Enterprise Linux 5.5 Kernel-based virtual machine monitor

Within the Methods of testing, the group have used DVD store Version 2 test application, developed by Dell. This enabled the group to perform stress and tests conditions in order to provide a standard and clean testing platform. Additionally, they chose to test all hypervisors on a single hardware set up that remained consistent throughout the tests. To further support there tests they additionally split there testing into two categories, light (two thread process and 60-minute settle period) and heavy (made more aggressive, reducing settle period to 0-minutes).

The group then lead on to discuss their results, firstly for the light testing, overall they found that Hyper-V has the least memory commitment features, dropping at around 11 VMs, with 22GB of VM memory. Additionally, KVM coming in second with 14 parallel VMs before reaching the memory and CPU limits. To finish both XenServer and ESXI performed the best, with a result of a maximum of 32 VMs. Taneja group, then continue to describe the results for the heavy testing, with a significant reduction in VM numbers. Again, Hyper-V struggled, reaching its limits at the same level. With both XenServer and ESXI resulting in 22 VMs, although with a performance difference. (The Taneja Group , 2012)

Figure 6 – Performance under Light workloads Vs the number of concurrent VM (Hypervisor Shootout: Maximizing Workload Density in the Virtualization Platform)

Figure 7 – Performance under heavy workloads VS the number of concurrent VMs (Hypervisor Shootout: Maximizing Workload Density in the Virtualization Platform)

Discussion

In general, the investigation is a very in depth and enlightening view into the hypervisors and the density in which they can perform at close to their limit. The Taneja group although haven’t aimed this at Data centers this can be used to review the virtualization techniques that can be used, this data can show a IT team the handling of what the hypervisor can offer in order for them to decide on hypervisor programs. Improvements to consider; the Taneja group could have looked into more capabilities of the hypervisors, such as processing power, in order to provide an all-round detailed and technical review of hypervisors. Such as the likes of investigation seen in “Container-based Operating System Virtualization: A Scalable, High-performance Alternative to Hypervisors”(Stephen Soltesz, 2007) and noted in “A component-Based Performance Comparison of Four Hypervisors”(Jinho Hwang, 2013) , where a review very similar to this has been conducted this time focusing on component base performance, not solely on workload. Overall, a convincing argument with a good level of research provided to provide the logical and reasonable results.

Container-based Operating System Virtualization: A Scalable, High-performance Alternative to Hypervisors – Stephen Soltesz, Herbert Potzl, Mark E. Fiuczynski, Andy Bavier, Larry Peterson – 2007.

The investigation conducted by a number of researches from well-known universities, presents an alternative source in place of the classic hypervisor. The paper discusses and reviews a number of Container based systems, with the methodology focusing on the design and implementation of Linux-VServer a container based system. Chosen as it is an open-source program, and the experience of use from all contributors to the paper. The paper then continues one to investigate and contrast the architecture of VServer with the then current model of the open-source hypervisor based system Xen.

The investigation motivated by the increasing use of virtualization throughout for business, developers, hosting companies and Data centers, for a more cost effective method and most efficient use of severs. The case presents that container-based operating system (COS), can trade the need for isolation for efficiency. Measuring efficiency as overall performance and scalability of the VM’s, and Isolation measured in Fault isolation, Resource isolation and security isolation, where both COS and hypervisor technologies contain both of these. The paper reviews this first comparison, stating, “There is no VM technology that achieves the ideal of maximizing both efficiency and isolation” (Stephen Soltesz, 2007). Although, affirming that dependent on the situation depends on what system should be used, for example using the COS system when efficiency is needed more than isolation.

From this Soltesz et al. discuss with the use of a Linux-VServer a COS system, with the methodology of testing the resource isolation, Security isolation, and file system unification for the VServer system. The results showing for all resources VServer can impose limits for each VM consummation, this actioned by the use of ticket bucket filters for both the CPU and I/O scheduling. Additionally, using kernel modifications to enforce secure isolation, and the use of shared file systems for common files for each VM, reducing disk space and providing a copy-on-write system.

The experiment to explore these statements performed on a standardized machine, with the first part of the experiment testing the performance of the hypervisor or COS, the second testing the isolation. Producing the results that Xen has a better support for multiple kernels, network stack and VM migration, in comparison VServer produces a smaller kernel, mark. VServer has an increased performance in relation to I/O resources, thus performance lacking in Xen.

Discussion

Soltesz et al. conduct a good and intensive research into the alternative to hypervisors, producing a series of clear results for a Linux based VM system. Producing a performance analysis within this research, could highly benefit and support the results gained. The paper contains a large amount of support for each of its claims, providing a convincing and sufficient argument. However, this paper provided a widespread and thorough review for the isolation and efficiency of both the COS system and hypervisors. The use of COS systems again reviewed in “Hypervisors vs. Lightweight virtualization: a Performance Comparison”(Roberto Morabito, 2015), providing an additional theorem and evidence to support claims made, although this paper focuses more on performance than Soltesz et al. has provided.

Virtual Machine Monitors: Current Technology and Future Trends – Mendel Rosenblum and Tal Garfinkel – 2005.

In 2005, a paper published by Rosenblum from VMware and Garfinkel from Stanford University Called Virtual machine monitors: Current technology trends and Future trends. Mainframe computing has seen many downfalls within business environments, Rosenblum et al.’s paper reviews into this, identifying novel solutions with the use of virtualization. The investigation firstly emphases virtualization implementation issues, providing examples of challenges and techniques discovered to overcome these. Secondly, the paper leads on to an “examination of current products and recent research providing interesting insights into the future of VMMs” (Mendel Rosenblum, 2005, p. 45).

The review leads on to state the challenges faces in virtualization in regards to CPU, I/O and Memory, they continue on to state challenges within the virtualization of these areas and how they can be overcome, using certain tools or methods, such as VMware, a VMM tool. Rosenblum et al. provides details of an investigation they have undertaken, providing a view in to future technologies and VMM demands.

This investigation, like the previous paper ‘Container-based Operating System Virtualization: A Scalable, High-performance Alternative to Hypervisors’ (Stephen Soltesz, 2007); focuses upon the security and isolation of the VMs and how the VMM software can provide this. this paper does not go into as much detail as the COS based paper, but provides a clear indication of where security and isolation can lack and techniques that can be used to overcome these, without technical details. The two papers contain a healthy and understandable agreement with each other, although with different levels of technical abilities. Rosenblum et al. continue on reviewing the use of VMMs, by using an evaluation of a whole on how VMMs can be challenges and techniques required to overcome these.

Discussion

Although a thorough investigation has been taken into an account reviewing a number of different challenges and how these can be solved or worked around the researches have not discussed how the information came about and what experiments have been undertaking for the results and remarks made, such as seen in previous papers. A recommendation here would be to investigate these further providing a technical overview with more detail. The review of the VMMs and there uses can provide a clear and useful base for further research, using the combination of multiple papers a more advance review into VMMs with clear testing for several areas can be made. However, the age of this paper currently, does result in the VMM being tested are now not the current models. This paper has a large amount of interest, however although all theories may be correct, the paper does not contain a large amount of support for its claims an area in which improvement can be made, such as re-running these exams on the latest software versions.

3.2 Performance and Management with a Data Centre Environment

DCSim: A Data Centre Simulation Tool for Evaluating Dynamic Virtualized Resource Management – Michael Tighe, Gaston Keller, Michael Bauer and Hanan Lutfiyya – 2012.

The investigation produced here reviews the needs and unique challenges seen in managing a data center environment. Tighe and the team initially discuss the need and problem they face; they identify that standard algorithms used to manage virtualization are unable to cope in the scale of complexity seen in the infrastructure of a data center. The team here aim to produce a simulation tool to be able to test and experiment on a data center; data center simulator (DCSim). Discussing the architecture, they state they identify this data center has all the features of an enterprise level data center, albeit on a smaller scale.

Tighe et al. continue to experiment there proposed DCSim system, reviewing VM management policies, scalability and machine capabilities. Scalability results show that the DCSim reacted well and an increase in VMs did not disproportionately affect the simulator. Identifies VM allocation and re-allocation, the results show that the using three algorithms static peak, static average and dynamic, the results show the dynamic algorithm produces the best results, with an acceptable level of SLA violations.

Discussion

Overall Tighe et al. produces a clear and understandable framework and a great idea for testing automation and migrations without altering a live environment. The group leave the paper with a wide area of future work and development plans. Although little performance testing has been conducted on how the VMs cope within this environment and with the algorithms, this could be an area of improvement that could have been added into the paper, such as seen in other papers.

Recommendations for Virtualization Technologies in High Performance Computing – Nathan Regola and Jean-Christophe Ducom – 2010.

The investigation here conducted by Regola et al. focuses upon the evaluation of open source hypervisors for high performance computing (HPC) within a Data center environment. The authors look to find and review a number of hypervisors, to support the need to consolidate HPC servers. They initially discuss how this is not normally feasible, due to the amount of workload and power these physical machines require. Although the need to virtualize these types of machines is increasing, due to the benefits virtualization can bring.

The methodology of the paper, initially Regola et al. review KVM and OpenVZ in a HPC virtualized environment. After discussing how KVM and OpenVZ developed to fit a HPC environment, they continue to evaluate virtualization in HPC, with specific attention to disk, network and I/O, before reviewing network and latency. They describe the results, stating that the KVM displays high read and random read performance with a lower performance factor compared to OpenVZ. Discussing the overall results concluded, “OpenVZ was the best choice for HPC due to the lower overhead”(Nathan Regola, 2010). The paper ended with the results of OS virtualization such as OpenVZ is the only current solution that supports the CPU and I/O performance needed in HPC

Discussion

Overall, the results of the paper clearly demonstrated and described thoroughly the results of the investigation, producing clear positive and negatives of each hypervisor. Regola et al. have provided a number of related work and future work items to expand on. However, whilst only reviewing open source programs, the project could have acknowledged some open source solutions and presented the findings on how they react in a HPC environment to provide a clear comparison of all solutions. Further to this, “Container-based Operating System Virtualization: A Scalable, High-performance Alternative to Hypervisors”(Stephen Soltesz, 2007), in this instance provides a clear background research into why an OpenVZ was discovered to be the best solution. This paper alongside this support proves the theorem Regola et al. was try to prove.

VManage: Loosely Coupled Platform and Virtualization Management in Data Centers – Hewlett Packard Laboratories – Sanjay Kumar, Vanish Talwar, Vibhore Kumar, Parthasarathy Ranganathan, and Karsten Schwan – 2009.

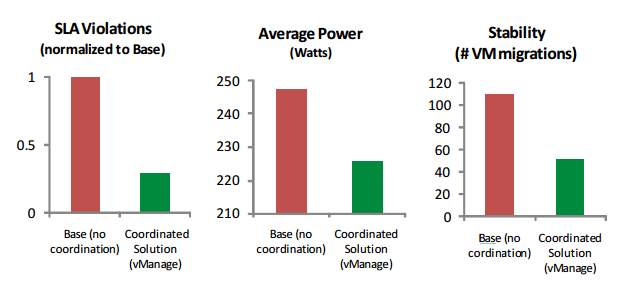

Mid 2009, a group of researches produced a paper called, VManage: Loosely Coupled Platform and Virtualization Management in Data Centers. The project focal point aims to provide a solution for both platform management and virtualization management, to provide a beneficial overview to improve the efficiency of data centers. The Researches propose a method, VManage, in which platform and virtual management performed in the same system, providing a coordination within data centers. Producing a set of results with both physical and virtual improvements, improving overall efficiency, performance, stability, isolation and runtime.

Kumar et al. wanted to create a solution to increase the efficiency of IT infrastructure and decrease the increasing costs that are in data center management. Kumar et al. detail the problems occurred with both physical and virtual managements, including power and thermal loss and VM resources, isolation, security and stability. The Research team discuss their aim of creating a “loosely coupled platform and virtualization management and facilitate coordination between these in data centers” (Sanjay Kumar, 2009). The system with the use of “registry and proxy servers” designed to discover individual sensors and actuators, used to register and report performance, alongside this the VManage system uses a stabilizer, to increase stability and decrease redundancy in the coordination interface.

Like similar papers above the experiment here is undertake in a Xen Environment, in this instance using 28 VMs on a number of Dell PowerEdge 1950’s, running a mix of enterprise systems and workloads to emulate a working enterprise and there use of the data center. The researchers provide details into the testing criteria, here they are looking for SLA violations, average power and stability (number of VM migrations). Once they have tested the prototype they detail the results found stating compared to traditional methods of management “VManage can achieve additional power savings (10% lower power) with significantly improved service-level guarantees (71% less violations) and stability (54% fewer VM migrations), at low overhead.” Seen in Figure 3.

Figure 7 – VManage results for SLA violations, Average Power and Stability of VM migrations, in comparison to a single management system

Discussion

The investigation and prototype developed in this paper provides a good synopsis and a practical approach for an IT department to manage their data centers, with clear and understandable results. Kumar et al. provide a proposal of development to allow ease of plugins to add in more controllers for management. Furthermore, unlike the above papers, the team have failed to identify the clear management of the VMs, and the importance of their need for security and isolation depending on the program featured. This management system could provide a development into the migrations undertook and the effect on both the VM and the hardware, like that of the experiment ran in “Autonomic Virtual machine placement in the Data center” (Chris Hyser, 2008) in order to ensure the efficiency of the migrations on both the VM and physical attributes. Kumar et al. have left a clear benchmark for the future of this prototype, however to provide a rounded overview a comparison to existing hardware-software layer systems such as VMware virtual center, could have been provided.

Dynamic placement of Virtual machines for Managing SLA Violations – IBM – Norman Bobroff, Andrzej Kochut and Kirk Beaty – 2007.

Written in 2007, by a number of researches at IBM, to investigate and improve server utilization for data center managements aiming to introduce a management algorithm for “dynamic resource allocation in visualized server environments.” (Norman Bobroff, 2007). The IBM team are hoping to minimize the costs of a data center, in terms of reducing overcapacity, improving performance and decreasing SLA violations. The team forecast the need of the algorithm, reviewing historical data users of each existing VM, in terms of resources such as CPU and Memory, to gain a full forecast that addition create a model to under the future predications of multiple VMs in a standard fashion on a single physical machine.

The experiment was conducted using three IBM Blade servers, each running VMware. Each VM has heterogeneous workload, to vary the CPU utilization. The management objective seen to minimize the time of active physical machines hosting VMs subject to resource constraint. With the results stating, that the management algorithm was able to exceed its targets in relation to meeting SLA objectives, reduce the rate of SLA violations and an average of 44% reduction in the number of physical machines.

Discussion

Whilst the experiment produced clear and understandable results, there is only minimal discussion in relation to resources and the effect this has on both the automatic management and the VMs, with the minimal discussion focusing upon the CPU. The improvements to introduce to increase the support of this claim and relate the paper more in this aspect to gain a fuller overview of how the algorithm reacts. The paper here relates very similarly to “Application Performance Management in Virtualized Server Environments” (Gunjan Khanna, 2006)both using the very similar test environments, both with several supporting items, such as positive improvements to the VM environments and how they react to the algorithm to overcome and work within the pre-set SLA’s of each test environment.

Application Performance Management in Virtualized Server Environments – Gunjan Khanna, Kirk Beaty, Gautam Kar, Andrzej Kochut – 2006.

Khanna et al. provide an investigation in to the use of sever virtualization as a concept to solve “Low server utilization and high system management costs”(Gunjan Khanna, 2006, p. 1) within an enterprise data center. Firstly, the investigation reviews current server consolidation algorithms and describes the mapping processes for this initial set up; this process allows the team do demonstrate the problem they are attempting to solve. Thus leading the team to describe the formula in which will be used to solve the utilization problems seen using machine cost and capacity as parameters.

Khanna et al. then run an in-depth experiment, describing the test bed; in this situation to replicate an Enterprise scale data center, using IBM BladeCenter for the hardware and VMware ESX server. Khanna et al. to create an equal test environment set up a number of different size machines with different OS. The team monitor resources and the machines during the experiment, along with the resources required for the migration of the machine. Describing the results, in term of the number of VM again the migration cost of the machine algorithm created versus the initial placements, they notice that this performs well at first, but with an increase in VMs the cost increases, due to the closely packed allocation of machines introducing a higher number of migrations. Concluding the paper, Khanna et al. state with the use of the algorithm created, they are able to monitor high uses of resources, such as the CPU or memory in comparison to SLA violations, so machines can be migrated between physical resources to minimize costs and maximize utilization.

Discussion

Overall Khanna et al. provides a detailed view into the algorithm created and both its successes and downfalls. They strongly described the proposed algorithm with a good use of research behind each point. The team have noticed some areas, which are week and have noted these for future work to improve on them. In relation “VManage: Loosely Coupled Platform and Virtualization Management in Data Centers” (Sanjay Kumar, 2009) use pre-determined SLA violations within the algorithm and use this to state when a migration should occur, an issues that needs to be defined in all data center migration tasks. The paper could however, like previous papers review into how the VMs respond to the constant migrations taking place and how this affects both the resources but the workloads of the machines. Similar to this they have noted they wish to work on in the future is the use of application workloads and their resource patterns and how this relates to the produced algorithm.

3.3 Autonomic Management of Virtual Machines

Effective Resource and Workload management in Data centers – Lei Lu and Evgenia Smirni – 2014.

This research conducted by Lu and Smirni, focuses upon several areas in relation to management of data centers, they focus upon “How virtualization technologies can be utilized to develop new tools for maintaining high resource utilization, for achieving higher application performance and for reducing the cost of data center management” (Lei Lu, 2014). The results that they have gained from the paper are four prototypes designed to increase automation, each accomplishing the problems previously identified. Each four methodology defined below:

- Lu and Smirni firstly develop an autonomic admission control policy called “AWAIT”, proposing an active request and blocking queue strategy for requests when a multiple VMs are overwhelming the hardware in workload surge requests. After testing, Lu and Smirni discus the results, stating a positive outcome between conflicting requests.

- Secondly, they focus upon a technique to model dependency relationships of VM resource demands, estimating that this will decrease the inaccuracy currently seen in standard tools for measuring resources utilizations in VMs. Producing a direct factor graph the researches state results seen in comparison to diverse suite that show “improved accuracy of resource utilization estimates using DFG”(Lei Lu, 2014).

- Continuing, producing a prototype management tool, an autonomic system designed to alter resource settings per VM, AppRM. Designed to overcome VM sprawl issues seen within large-scale data centers and the difficulty of knowing which application needs what resources. The application works to meet pre-set Service Level Objectives (SLO), and adjusts to dynamic workloads. Thus tested in a VMware environment, with both over and under resourced VMs, producing a clear adjustment in all scenarios.

- Lastly, again producing a prototype providing an automated placement program to overcome server under-utilization. Lu and Smirni, creating a load-balancing algorithm to assign VMs across a data center applying min and max loads to servers, before using network analytical model to predict the performance of the VM under the specified placement. Resulting in on average a reduction in the worst configuration response and a reduction in a random configuration response.

Discussion

Overall, the paper gives a wide overview on the systems stated to increase automated management of resources and server utilization, and they have created solutions for a wide area of related problems, the paper has little backup of the facts stated. Lu and Smirni have lacked in physical evidence of their claims, here they could have provided a more detailed results for the items stated, resulting in a high benchmark for future work. Lu and Smirni could also focus on meeting SLA has seen in other papers within this review, rather than SLO’s, SLA proving a full agreement and understanding from all parties to the agreement in place, compared to the performance objective. However there research conducted in the Fourth target does support the claims seen in “Autonomic Virtual machine placement in the Data center” (Chris Hyser, 2008)where both papers focusing on the load balancing of resources, and a autonomic migration, resulting in balanced resource nodes and a better environment for the VM to perform.

Autonomic Virtual machine placement in the Data center – Hewlett Packard Laboratories – Chris Hyser, Bret McKee, Rob Gardner, and Brian J. Watson – 2008.

The project here, written by a number of research technicians for HP in early 2008, presents a paper reviewing and investigating into Virtual machine placement and the automatic management of these depending on a set number of policies. The paper aimed to produce a high-level overview for data Centre operators and owners in order to “improve quality of a service for data center owners or to increase profitability of providing service for data center owners” (Chris Hyser, 2008, p. 2). The investigation aimed to solve several problems related to virtual machine mapping, focusing upon areas in resource management, space management and business and security constraints. The HP researches present a claim that with the use of an autonomic controller, they are able to ensure the VM’s are able to react in accordance to the pre-set policies from the data center and transfer the VMs between physical hosts, to combat efficiency, stability and minimize the need for human control.

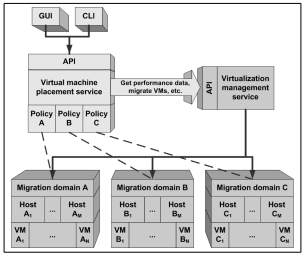

The methodology used by the Hyser et al. has been to create a prototype controller, in order to meet and excel in overcoming VM mapping and rearrangement problems. After this it is discussed the factors and policies that would be taken in to account when generating a new mapping. The authors have produced an architecture, which can have been seen in Figure 1 below, the team have initiated a system in which communicated with the VMM software, to gain data in order to execute the live migrations. The experiment here uses the HP virtual machine manager, alongside a data center built using four HP servers, each with 8 GB memory, 2×3.60Ghz Intel processors, three of which machines are used for the migration experiment and the fourth is used to host the prototype software.

Figure 9-Prototype System Architecture (Autonomic Virtual Machine Placement in the Data Centre, HP, 2008)

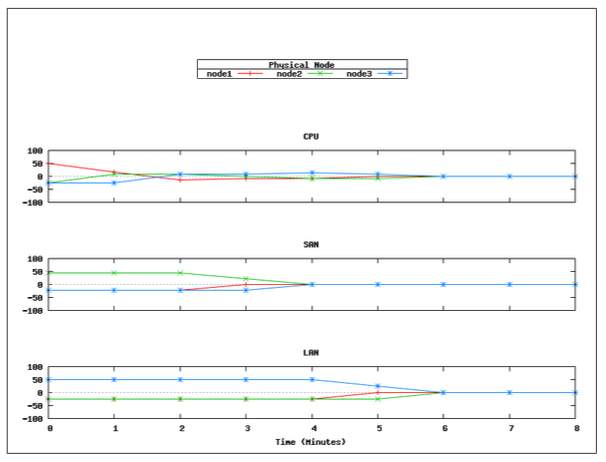

Once the experiment has been conducted, the researches explains their recommendations and results of the use of the prototype, the results presented in graphs show the representation of CPU, LAN and SAN represented on three different Nodes, initially start the experiment unbalanced. The Prototype works to drive and minimize the resources and create an even average. The imbalance seen and exceeds pre-set policies; a live migration is conducted producing a clear and obvious balance of the resources, producing a clear indication of a VM on each physical host.

Figure 10-Balance Policy Load Resource Summaries (Autonomic Virtual Machine Placement in the Data Centre, HP, 2008)

Discussion

Hyser et al. present the results clearly and have shown a clear area for additionally work. Here they have outlined this within the paper stating areas in which they propose to further research and develop. Hyser et al. have produced a clear and proposal for the prototype with well-displayed and formulated results. Whilst the prototype testing is, a good start Hyser et al. could look into running and testing the software in a number of different scopes, such as with different types VMM software, or with an increased number of servers and VM’s. Something similar to the tests conducted in “Performance Evaluation and Comparison of the Top Market Virtualization Hypervisors”(Abdellatief Elsayed, 2013). Overall, the paper conducts an intensive benchmark of research into automation software, leaving a continual progression for future work.

Server Virtualization in autonomic management of heterogeneous workloads – Malgorzata Steiner, Ian Whaley, David Carrera, Ilona Gaweda and David Chess – 2007.

The report here develops an investigation and experiment on the automation of server virtualization for large and un-regular workloads. The paper written describes the challenges in automatically managing heterogeneous workloads in an autonomic data center environment. Steiner et al. propose a solution to demonstrate this and its effectiveness in both an experiment and simulation. Steiner et al. and his team face a number of challenges in order to successfully, efficiently and proactively manage a heterogeneous workload. Discussing the three main challenges that they are aiming to overcome, first is the difference in performance that can come from an assorted workload, the second is time scale management and the difference that each workload can bring, and the difficulty in knowing the resource allocation and performance that is need for each. The Third is accumulation of applications on each physical hardware item, ensuring allocation of resources and its usages and the efficiency of this. Stiner then presents solutions with the aim of solving the three challenges discussed, to improve the management of heterogeneous workloads within server virtualization.

A Trending use within the literatures discussed is the use of Xen as the base for the virtualization experiments; again, Steiner et al. sees this in use. Steiner et al. here use both an experimental test and a simulation, with the use of Xen and WebSphere extended deployment. Stating that the experiment used, introduces several features; accumulating workloads onto one physical machine, allows high-level performance in resource allocation and thirdly creates a more effective manner in scheduling.

Discussion

Steiner et al. and the team leave the literature with an effective plan to continue studying. However, further experiments should again be running to further the results gain, perhaps with the use of migration rather than simply using Move-and-restore tactics due to the lack of resources. Further to this, they have discussed in detail how this would affect the management of VM’s within the Xen environment, and the ease of having an image created for the creation of more VM’s when needed. Although for the experiment they have relied on the standard set up of resources from Xen, a recommendation here would be to further this and create unique VMs and uses to expand the evidence they can get from their prototype.

3.4 Conclusion

Virtualization has been a growing portion of the computing world in recent years, with a wide number of written literature supporting a wide variety of aspects that it covers. The body of literature has been focusing upon a number of areas within virtualization management, performance management and autonomic management, along side in depth reviews of currently available systems. There are a wide number of methodology’s and tools varying throughout, used for investigating management styles, technologies, performance and differences from paper to paper. Many papers reviewed use a comparison style method comparing VMM software in different scenarios, or against different systems such as container based systems, presenting an understanding of the better software in a particular scenario.

With the wide variation of literature reviewed, some earlier published work may contain systems that are now outdated or have a more current system with different capabilities, thus leaving an area to review and expand in this area. With the comparison between papers, each methodology/theory being reviewed, followed by a discussion of the literature identifying any relations, support or weaknesses with the paper, in order to fully summaries and critique.

Chapter 4 – Centralized Virtual Machine Managers Review and Comparison.

Though this chapter, the project will focus upon identifying current Enterprise level centralized VMM software’s that are used to support and manage data center environments. Reviewing technically what they are capable of performing and in comparing these against one another, in order to gain an overall view and understanding of what currently is available. The chapter will focus upon the most commonly used enterprise centralized management VMM products, Microsoft’s System Center Virtual Machine Manager, VMware vCenter and Citrix XenCenter.

4.1 System Center Virtual Machine Management (SCVMM)

System Center Virtual Machine Manager, a virtual machine management system from Microsoft System Center suite. Microsoft System Center consist of several system management products, providing a number of management, monitoring, automation and reporting tools used to support and assist corporate and enterprise level systems. SCVMM can work independently or along side other System Center products, such as operations manager or configuration manager (Rouse, 2012). SCVMM is used to centrally support a Virtual environment, consisting of several hosts and machines. Thus presenting an overall management view to provide ease of planning, deploying, managing, reporting and optimizing VM’s for the data center administrators.

4.1.1 Hyper-V

Hyper-V is a hypervisor based product from Microsoft, with a type-one design, residing directly on the hardware of the sever after enabling the install. Launched in 2008, Hyper-V has continued to grow and develop with each Windows server release, currently with 6 versions, from Windows Server 2008 to Windows 2016. From Windows Server 2012 onwards, Hyper-V is built in integrated service. Hyper-V is a windows features, enabled at the choice of an administrator. For non server use, Hyper-V is additionally available on windows 8 through to 10 machines. Like above, this need to be enabled before use.

Most recently, windows have released a windows Hyper-V server product, this product designed to support virtualization. By reducing the server size to the bare minimum from removing all unrelated, excluding virtualization, services and the GUI. This system comes with a number of benefits, due to the minimal size of the server, the maintenance is smaller, and there is a reduction in the number of patches that will be required. (Zhelezko, 2014) Comparing windows server 2016 with hyper v enables and the windows hyper v server, there is no clear identification on which Hyper-V application to use within a data center, this simply is down to the enterprise and what licensing system that they have, and how many guest OS they require to install on VM’s (Posey, 2014).

Hyper-V is the preferred choice of hypervisor for a window server configuration, and for the use within SCVMM. Hyper-V is able to provide a virtualization solution in order to support data centers and enterprises, using windows server tools as the basis. Hyper-V contains a management GUI console, to manage and support the single server. The GUI offers a similar concept to SCVMM and again can be managed and supported by PowerShell scripting. Hyper-V contains many of the same features as SCVMM, without the multiple sever concepts. However, unlike SCVMM the Hyper-V manager can only support the server its installed on, unlike SCVMM where multiple servers can be supported and managed from a single view, one benefit for data center administrators.

4.1.2 Versions

The most current stable version of System Center is System Center 2016, this released in the later quarter of 2016, with a supported upgrade path from System center 2012, which is currently most used with in enterprise environments. The compatibility allows for severs with Windows server 2012 R2 or above for System Center 2016, where as 2012 is compatible with servers built to windows Sever 2008 R2 and above. The upgrade of SCVMM 2012 to SCVMM 2016 offers several new functions, such as an increase security using guarded host deployment, improvements in networking, such as deploying software defined networking using templates and more operations within running VMs (CFreemanwa, 2016). SCVMM 2012 has had a number of updates through its life span between 2012 and 2016. SCVMM 2016 has yet to have a complete update from Microsoft.

In addition, there are a number of Hyper-V versions, Hyper-V offer a free client that can be installed on machines operating at windows 8 to windows 10. This version is pre-installed on the machines becoming active after enabling. Hyper-v then has a number of enterprise versions, which again are reside upon the server until enabling, these versions can vary from 1.0 to 5.0 on depends of which OS you are operating on the server.

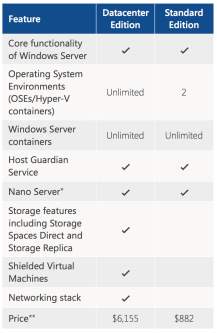

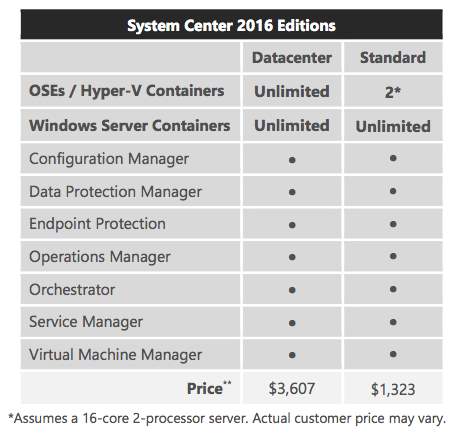

4.1.3 Potential Enterprise Costs

SCVMM is a purchasable product, with the price varying on the amount of machines and what is required and other such factors. There are a number of options that are dependent of the enterprise, for example, extra costs are applied for licensing of infrastructure, examples such as windows Windows Server 2012 Datacenter Edition, or SQL Server. Table 1, shows the minimal costs that is needed for the System center suite 2012 (Microsoft Volume Licensing, n.d.) for both the standard and Data center editions. The standard edition provides support and a cheaper option for SME’s that have only lightly virtualized servers, or hybrid based system due to the enterprise’s size. In comparison the data center edition, is designed for a full data center set up, with each license covering up to two physical processes, which can manage an unlimited number of OS based VMs. Both of which contain access to the full software center applications, including SCVMM. Complete quotes for SCVMM are only available through Microsoft Volume Licensing system, here they administrators identify what is needed and required from the SCVMM before a confirmed price is given. Table 1 contains, the average price excluding any additional licenses.

| Edition | VMs per license | Includes | Cost (USD) |

| System Center 2012 R2 Standard Edition | 2 | App Controller

Configuration Manager Data Protection Manager Endpoint Protection Operations Manager Orchestrator Service Manager Virtual Machine Manager |

$ 1,323 |

| System Center 2012 R2 Data Center Edition | Unlimited | App Controller

Configuration Manager Data Protection Manager Endpoint Protection Operations Manager Orchestrator Service Manager Virtual Machine Manager |

$3,607 |

Table 1 – Potential Costs of SCVMM 2012 (Microsoft Volume Licensing, n.d.)

Pricing for 2016, relays a very similar pricing and licensing structure, again providing costings for both standard and data center editions. However, within 2016 a focus is upon using Hyper-v, which has had a number of improvements and is now a built in function within windows sever. (Microsoft Volume licencing, 2016).

Hyper-V is a free hypervisor tool, however for all installs the users do need to have a licensed OS, whether this is windows 8/10 or windows sever 2012/2016, each with a cost. With a number of different levels of licensing available, Microsoft can offer products to a wide range of customers, granting enterprises flexibility and control towards the scales that are needed. With these products available through Microsoft volume licensing system. (Microsoft, 2016)

4.1.4 Features

SCVMM has a large number of features and functions essential for the smooth management of a data center. SCVMM had initially developed these functions, with SCVMM 2016 improve and expanding some of the most popular and used items. Below we have looked into some of the most useful features that currently are offered, in both SCVMM 2012 and 2016.

SCVMM is designed to support central management of VMs within a data center, either on premise or from a third party provider. SCVMM id designed to manage large numbers of virtual servers, Microsoft stating “Supported number if 400 virtualization hosts, and 8,000 virtual machines” (Microsoft TechNet, 2013). The main functionality of SCVMM, which is one of the main causes of popularity is the ability to manage multiple hosts that use different hypervisors, not only can this support Microsoft Hyper-v and SCVMM based machines, but SCVMM can support VMware and Citrix Xen based servers. This overall support has increased the popularity, due to the flexibility and allowances in provisions it offers.

SCVMM provides a centralized area for these VMs and Servers, where an administrator is able to perform and work with a view of how a change will affect other VM’s and Hosts. Changes can be made on the SCVMM system whilst VMs are live, without causing interruption or for a shutdown or restart to be required.

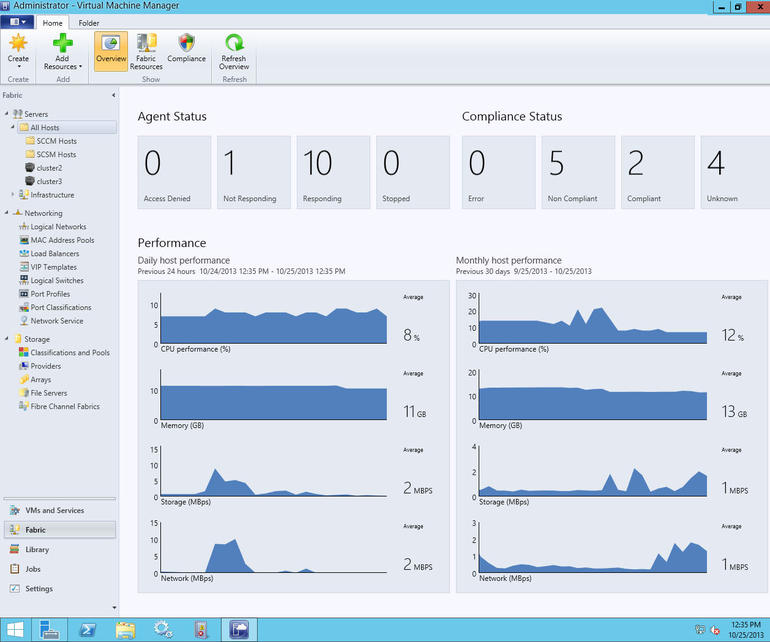

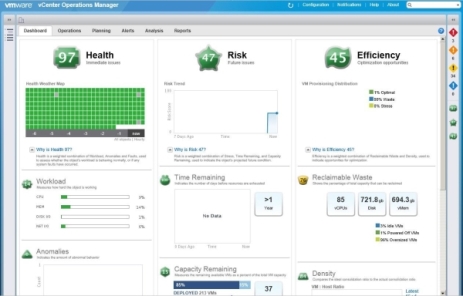

SCVMM being able to display and manage a complete virtual infrastructure, presents a clear and easy way for administrators to view and understand what state the whole network is in. Within SCVMM a GUI can show a clear understanding of the health of all VMs and hosts in the network, this GUI dashboard shows overall states, properties, CPU, networking and disk performance of each VM (TechNet, n.d.). An example of the SCVMM health dashboard is shown in Figure 11, this demonstrating the system and how it performs.

With SCVMM being a part of the System center application pack, SCVMM is able to be integrated with other system center products such as operations manager. Thus being able to produce reports with more details and information, than shown in the GUI. (TechNet, 2014)

Linking closely is the ability to be able to evaluate host capabilities and storage options, to suggest and consolidate workloads and VM’s, thus creating more available and usable space. Reducing need for additional resources and/or hardware. This intelligent placement analysis allows the administer to deploy machines again pre-set algorithms created. Dynamically optimizing the Data center and its resources.