Grid Computing Middleware API for Dynamic Configuration

Info: 31374 words (125 pages) Dissertation

Published: 24th Nov 2021

Tagged: Information Systems

CHAPTER 1: INTRODUCTION

In this new developing scientific and technological era, we continuously thrive to solve the complex science and engineering problems. These problems deal with huge amount of data and in order to solve the problems, this huge amount of data has to be analyzed. The analysis of this data can be done by high-performance computing that require availability of enormous computing power. And the branch of high performance computing that can provide the solution is grid computing.

The focus area of the researcher in this thesis is Grid Computing. Grid computing has rapidly emerged as technology and infrastructure for wide area distributed computing. In wide area distributed computing, the resources are distributed globally and are used for computing. So the resources need to be properly shared and used. The security issues should also be taken into consideration when sharing the resources. In Grid computing, resource manager manages the resources needed to an allocated task. But as the number of resources is vast, the probability of failure of a resource is high. If the resources are not dynamically monitored and managed, the computation will fail. So the resources must be used efficiently and effectively. This can be achieved by the middleware.

Motivation and Objective of Research

Motivation

The Grid Computing Environment is evolving at a rapid rate because of the requirement of distributive and collaborative computing. It provides high performance computing to the user who does not have sufficient computing power and the resources. Adding to it, the establishment of virtual organization and rendering services through virtual environment is growing up at a very high rate. To satisfy upcoming requirements GRID networks has become an ultimate solution as on today.

Objective

Looking to the need of the area and the challenges to be taken care, the area of enhancement of GRID architectures, Middleware API’s and Services is taken at the focus of this research. The research work will study at depth the existing Grid Architectures, Middleware API’s supporting various services under each architecture as well as services desired but not available and some challenges are to be identified under this study and analysis. Having done the analysis, the research work will focus on the enhancement of architecture & middleware by way of modeling an improved architecture, enhanced domain of the services and the solution for some of the unattended but needed challenges. For this necessary enhancement of middleware API’s Library is developed. The testing will be carried out at prototype level to justify the targeted achievement.

Scope of Research

In this thesis, problem resulting due to static configuration of middleware are studied and an extension in API is proposed that can help in dynamic configuration of the middleware. In this thesis I have reviewed architectures of various Grid Systems available. I have also reviewed Ganglia Monitoring System and Autopilot Monitoring System. I have found that Ganglia support many features but also have many limitations due to its architecture. I also reviewed and analyzed various grid middleware and frameworks like UNICORE, GLOBUS etc. and found that the need of the hour is a GUI based Grid Framework. Then I analyzed available GUI grid framework – Alchemi .Net and found there is a scope to improve it.

Organization of Thesis

Thesis is organized in chapters as follows –

Chapter 1 is the introduction which contains the Motivation and Objective of Research, Scope of Research and its organization.

Chapter 2 is Literature study and its analysis. The researcher studied various architectures and middleware available. Some of them are state of the art architectures and middleware.

Chapter 3 contains Literature review and findings. Out of more than 300 papers reviewed, 70 papers are selected and reviewed further for problems in architecture and middleware solutions. Drawbacks in existing grid architecture and middleware are identified and presented in the review and findings section.

In Chapter 4, based on findings the problem is formulated and the target of the research is identified.

Chapter 5 contains the proposed work where solution to middleware is proposed. API specification for the proposed solution is given. Further, as an extension to the knowledge, new algorithms are designed and developed for the proposed solution.

Then in Chapter 6, the implementation methodology is given through different experiments, and summary of the results is discussed by comparative analysis.

Finally in Chapter 7 the targets achieved are given as a conclusion.

And last but not the least, in Chapter 8 possible extension of the research work is given.

Chapter 9 contains the References.

Chapter 10 is the Bibliography.

Chapter 11 is the Appendix in which publications of the researcher are given.

CHAPTER 2: LITERATURE STUDY & ANALYSIS

2.1 GRIDCOMPUTING

A Grid environment is a distributed environment comprising of diverse components which are used for various applications. These components are contained with all the software, hardware, services and resources needed to execute the applications. There are three core areas of a Grid environment [1][2] – Architecture, Middleware and Services. In this research, we have focused on the Middleware. Middleware exists on top of the operating system and helps in assisting & connecting software applications and components.

There are many middleware available that can be used for different types of applications. Each middleware has different communication policies & rules, and different modes of operation. These policies, rules and operations classify middleware into procedure oriented, object oriented, message oriented, component based or reflective[1]. Similarly, the functionalities exhibited by the middleware can be classified into – application specific, management specific and information exchange specific categories.

It has been identified that the problem with the GRID environment is that it has diversely distributed components used by large number of users. These can make them vulnerable to faults, failure and excessive loads. Thus, security becomes a very important aspect as most transactions and operations are done online. It is very important to protect the applications and the data involved from malicious, and unauthorized or sometimes unintentional attacks. There should be well defined access policies, cryptography mechanisms and authentication models to solve this issue and provide security.

2.2 GRID ARCHITECTURE APPROACHES

The researcher surveyed different grid architecture and their mechanisms with the objective to find out what is presently available and what can be done to improve it. Some of the architectures studied are discussed as under.

SOGCA – Service-Oriented Grid Computing Architecture

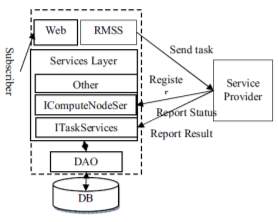

The SOGCA[3] is implemented utilizing Web-service technology shown as Fig. 1.

Fig-1: Architecture of SOGCA (Y. Zhu. 2007)

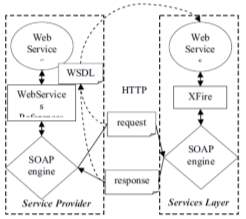

SOGCA inter-operated across heterogeneous platforms by adopting the Web-service architecture. Web services standardize the messages that entities in a distributed system must exchange to perform various operations. At the lowest level, this standardization concerns the protocol used to transport messages (typically HTTP), message encoding (SOAP), and interface description (WSDL). A client interacts with a Web service by sending a SOAP message; the client may subsequently receive response message(s) in reply. The web services implementation of SOGCA refers to Fig.2. There are several interfaces between the Services layer and Services Provider, including one for the Service provider information registering and updating, one for Service Provider state reporting dynamically and one for the task result reporting, etc.

Fig-2: Web Service Implementation (Y. Zhu. 2007)

Services layer utilizes Codehaus XFire which is the next generation framework of SOAP. It makes service oriented development approachable through its easy to use API and support for standards. It also gives high performance. The service provider sends the SOAP messages to service layer by the Web Services References API of .NET platform using WSDL.

AGRADC: Autonomic Grid Application Deployment & Configuration

Architecture

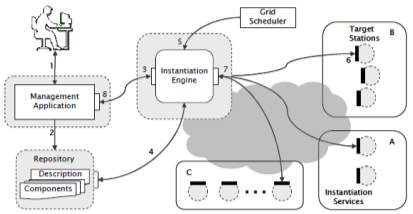

AGRADC (Autonomic Grid Application Deployment & Configuration Architecture)[4], an architecture to instantiate grid applications that allows the necessary infrastructure to be deployed, configured, and managed upon demand. AGRADC is composed of four conceptual elements: management application; component repository; instantiation engine; and instantiation services. Figure 3 gives an overview of the architecture instantiated in a grid infrastructure comprised of three administrative domains, namely A, B, and C. The management application allows the developer to define the application components, specify the steps for deployment and configuration, and request the instantiation of the application. The components and deployment scripts are stored in a repository. The instantiation engine receives invocations from the management application and orchestrates the instantiation of the environment requested. Finally, instantiation services – lodged in all grid stations – supply interfaces enabling them to execute deployment, configuration, and management of components.

The interaction among architecture elements takes place as follows. First of all, using the CDL language, the developer defines the components that participate in the application (e.g., database, http server, and grid service), the deployment sequence to be respected, and the configuration parameters. The result of this phase is the generation of a set of CDL files and components that are stored in the repository. The next phase is the application instantiation request to the instantiation engine (3), which is accompanied by the identifier of the application description file location. Upon receiving the request, the instantiation engine retrieves this file (4), interprets it, and initiates the instantiation process. As the engine identifies each component in the description file, it retrieves the corresponding component from the repository. Based on the information provided by the grid scheduler (5) with relation to the resources available, the engine decides in which stations each component will be instantiated and interacts with the instantiation services of the selected stations (6). The interaction foresees operations for the deployment, configuration, and management of the components. The result of these operations – success or failure – is informed to the engine via notifications generated by the instantiation services (7).

Based on policies expressed at the engine, it automatically reacts advancing the instantiation process or executing a circumventing procedure. Finally, if the instantiation process runs successfully, the execution environment of the grid is ready to execute the application.

Fig-3: The AGrADC Architecture & Interactions among its components (L. P. Gaspary et al. 2009)

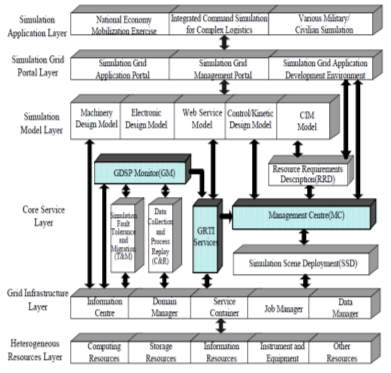

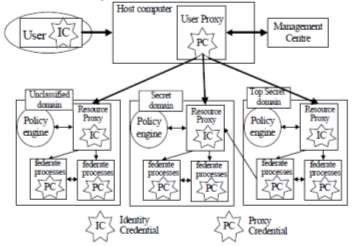

A Security Architecture for Grid-Based Distributed Simulation Platform

A grid-based distributed simulation platform (GDSP)[5] provides the base infrastructure for service oriented simulation platform and environment. It can run simulations efficiently on wide area network; it can reuse simulation resources, and can also improve load balancing capability of the system. GDSP provides assurance of information but the simulation must be guarded from unauthorized access. The overall structure of GDSP is shown in figure 4. Simulation Application Layer includes all kinds of simulations for specific applications. Simulation Grid Portal Layer is the interface for the interaction between simulations and users. Simulation Model Layer provides various models which are necessary to simulations. The model is the abstract of a kind of simulation federates and must be deployed to GDSP. It is grid service of WSRF (Web Services Resource Framework) and every service instance represents a federate. In this way, models need not place on the local. Users can invoke them through remote access over wide area network. And multi-users can access the different instances of the same model service simultaneously. Services can communicate with each other in spite of the diversity of programming languages and platforms.

Fig-4: GDSP Architecture (H. He. et al. 2008)

Fig-5: GDSP Security Architecture (H. He. et al. 2008)

To provide an authentication and access control infrastructure, GDSP security architecture uses mechanisms of proxy and multiple certifications. It protects system security and organizational interests, and allows simulations based on GDSP to operate securely in heterogeneous environments. The performance impact of the security architecture on GDSP is little.

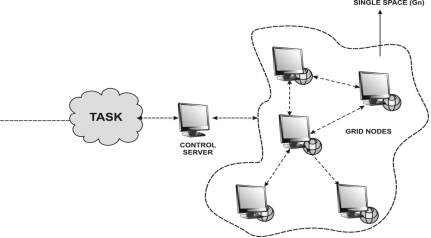

Object Based Grid Architecture for Enhancing Security in Grid Computing

Object based Grid Architecture[6] act as a single system; the resources connected with the grid architecture were treated as the single components or peripherals.

Fig-6: OGA Grid Architecture (M. V. Jose and V. Seenivasagam 2011)

OGA provides a single space grid platform to enhance the security in grid computing and to enhance the privacy in the grid computing. Each node in the grid space was considered as the objects in the MIS and the authentication process will be done in the MIS. During the authentication process the MIS will authenticate the requester with the grid node using the grid node objects. A user entering into the grid space can feel the reality of grid through the MIS virtual interface but this interface is a dynamic object maintained by the MIS and by the corresponding grid node. The node (Gn) connected with the grid environment has a separate partition according to the resource administrator allocated. In that separate partition all the process will be done.

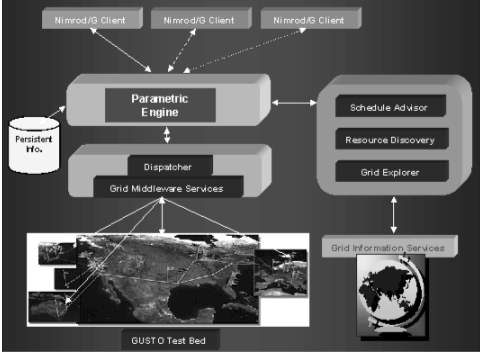

Nimrod/G: Architecture for Resource Management and Scheduling System

The architecture of Nimrod/G[7] is shown in Figure 7 and its key components are: Client or User Station, Parametric Engine, Scheduler, Dispatcher, & Job-Wrapper.

Nimrod/G Client

Nimrod/G Client

Nimrod/G Client

Nimrod/G Client

Nimrod/G Client

Parametric Engine

Schedule Advisor

Resource Discovery

Persistent Info

Dispatcher

Grid Explorer

Grid Middleware Services

Gusto Test Bed

Fig-7: NIMROD/G Architecture (B. Rajkumar et al. 2000)

Client or User Station

This component acts as a user-interface for controlling and supervising an experiment under consideration. The user can vary parameters related to time and cost that influence the direction the scheduler takes while selecting resources. It also serves as a monitoring console and lists status of all jobs, which a user can view and control. Another feature client is that it is possible to run multiple instances of the same client at different locations. That means the experiment can be started on one machine, monitored on another machine by the same or different user, and the experiment can be controlled from yet another location.

Parametric Engine

The parametric engine acts as a persistent job control agent and is the central component from where the whole experiment is managed and maintained. It is responsible for parameterization of the experiment and the actual creation of jobs, maintenance of job status, interacting with clients, schedule advisor, and dispatcher. The parametric engine takes the experiment plan as input described by using our declarative parametric modeling language and manages the experiment under the direction of schedule advisor. It then informs the dispatcher to map an application task to the selected resource. The parametric engine maintains the state of the whole experiment and ensures that the state is recorded in persistent storage. This allows the experiment to be restarted if the node running Nimrod goes down.

Scheduler

The scheduler is responsible for resource discovery, resource selection, and job assignment. The resource discovery algorithm interacts with a grid-information service directory, identifies the list of authorized machines, and keeps track of resource status information. The resource selection algorithm is responsible for selecting those resources that meet the deadline and minimize the cost of computation.

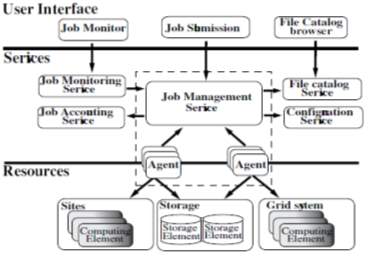

DIRAC: A Scalable Lightweight Architecture for High Throughput Computing

To facilitate large scale simulation and user analysis tasks, DIRAC (Distributed Infrastructure with Remote Agent Control)[8] has been developed. DIRAC can be decomposed into four sections: Services, Agents, Resources, and User Interface, as illustrated in figure 8.

Fig-8: DIRAC Architecture (A. Tsaregorodtsev et al. 2004)

The core of the system is a set of independent, stateless, distributed services. The services are meant to be administered centrally and deployed on a set of high availability machines. Resources refer to the distributed storage and computing resources available at remote sites. Access to these resources is abstracted via a common interface. Each computing resource is managed autonomously by an Agent, which is configured with details of the site and site usage policy by a local administrator. The Agent runs on the remote site, and manages the resources there, monitors and submits job.

Jobs are created by users who interact with the system via the Client components. All jobs are specified using the ClassAd language, as are resource descriptions. These are passed from the Client to the Job Management Services (JMS), allocated to the Agents on-demand, submitted to Computing Elements (CE), executed on a Worker Node (WN), returned back to the JMS and finally retrieved by the user. Jobs are only run when resources are not in use by the local users. It operates only when there are completely free slots, rather than fitting in short jobs ahead of future job reservations. DIRAC has started to explore potentials for distributed computing from instant messaging systems. High public demand for such systems has led to highly optimized packages which utilize well defined standards, and are proven to support thousands of simultaneous users.

Agora Architecture

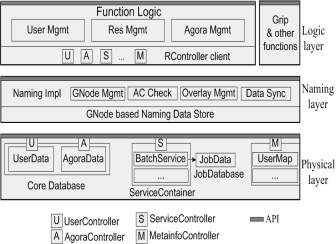

Agora[9] is an implementation of Virtual Organization. It manages users and resources. It has instances which provide policies to support a MAC hybrid cross-domain access control mechanism. These instances also maintain the context of operations. The architecture is shown in figure 9. It consists of three layers –

- First is the physical layer that contains external resources. It uses an abstraction RController to manipulate external resources.

- Second is the naming layer. All the GNodes are managed by this layer. GNodes include all the entities, users, resources, and agora instances.

- Third is the logic layer that implements all the Agora functionalities.

There are five basic concepts in Agora architecture: resource, user, agora, application, and grip. These five concepts have close relationship. In a word, an application is represented and managed by a grip, runs on behave of a user in a specified agora, and may access the authorized resources in the agora.

Resource: A resource is an entity providing some functions. There are external resources and internal resources. External resources are hosted in the real world, such as CPU, memory, disk, network, server, job queue, software, file, data, etc. Internal resources are managed by Agora in a uniform way. Service is an example of an internal resource.

User: A user is a subject who uses resources. There are three kinds of users in GOS: host users, GOS users and application users. A host user is a user in the local operating system. A GOS user is a global user. An application user is a user who is managed by a GOS application.

Agora: An agora instance, or agora, is a virtual organization for a specific purpose. An agora organizes interested users and needed resources define the access control policies, and forms a resource sharing context.

Application: An application is a software package providing some functions based on resources to end users.

Grip: A grip is a runtime construct to represent a running instance of an application. A grip is used to launch, monitor, and kill an application and to maintain context for the application.

Fig-9: AGORA Architecture (Y. Zou et al. 2010)

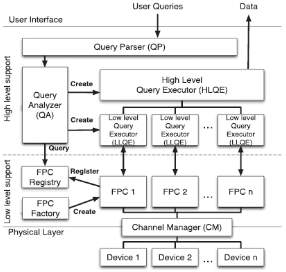

PerLa: A Language and Middleware Architecture for Data Management and Integration in Pervasive Information Systems

The development of the PerLa[10] System focused on the design and implementation of the following features:

- data-centric view of the pervasive systems,

- homogeneous high-level interface to heterogeneous devices,

- support for highly dynamic networks (e.g., wireless sensor networks),

- Minimal coding effort for new device addition.

The result of this approach is the possibility of accessing all data generated by the sensing network via an SQL-like query language, called the PerLa Language, that allows end users and high-level applications to gather and process information without any knowledge of the underlying pervasive system. Every detail needed to interact with the network nodes, such as hardware and software idiosyncrasies, communication paradigms and protocols, computational capabilities, etc., is completely masked by the PerLa Middleware.

The PerLa Middleware provides great scalability both in terms of number of nodes and types of nodes. Other middlewares for pervasive systems only support deployment-time network configuration or provide runtime device addition capabilities for a well defined class of sensing nodes at best (e.g., TinyDB). These limitations are no longer acceptable. In modern pervasive systems, specifically wireless sensor networks, nodes can hardly be considered “static” entities. Hardware and software failures, device mobility or communication problems can significantly impact the stability of a sensing network. The ever-increasing presence of transient devices like PDAs, smart-phones, personal biometric sensors, and mobile environmental monitoring appliances makes the resilience to network changes an essential feature for a modern pervasive system middleware. Support for runtime network reconfigurability is therefore a necessity. Moreover, the middleware should also be able to detect device abilities and delegate to them whichever computation they can perform. The tasks that nodes are unable to perform should be executed by the middleware. PerLa fulfills these requirements by means of a Plug & Play device addition mechanism. New types of nodes are registered in the system using an XML Device Descriptor. The PerLa Middleware, upon reception of a device descriptor, autonomously assembles every software component needed to handle the corresponding sensor node. End users and node developers are not required to write any additional line of code.

Fig-10: Perla Architecture (F. Schreiber and R. Camplani 2012)

The PerLa architecture[10] is composed of two main elements. A declarative SQL-like language has been designed to provide the final user with an overall homogeneous view on the whole pervasive system. The provided interface is simple and flexible, and it allows users to completely control each physical node, masking the heterogeneity at the data level. A middleware has been implemented in order to support the execution of PerLa queries, and it is mainly charged to manage the heterogeneity at the physical integration level. The key component is the Functionality Proxy Component, which is a Java object able to represent a physical device in the middleware and to take the device’s place whenever unsupported operations are required. In this paper, we have not investigated some optimizations that can improve the performance of the middleware and the expressiveness of the language.

MonALISA – a Distributed Monitoring Service Architecture

MonALISA[11] stands for Monitoring Agents in A Large Integrated Services Architecture. It provides a distributed monitoring service and is based on a scalable Dynamic Distributed Services architecture. This architecture is implemented using JINI/JAVA and WSDL/SOAP technologies. The system provides scalability by using multithreaded Station Servers to host a variety of loosely coupled self-describing dynamic services. Each service has the ability to register itself and then to be discovered and used by any other services or clients. All services and clients have the ability to subscribe to a set of events in the system to be notified automatically. The framework provided by MonALisa integrates many existing monitoring tools and procedures to collect parameters describing application, computational nodes and network performance. It has built-in network protocol support and network-performance monitoring algorithms with which it monitors end-to-end network performance as well as the performance and facilities.

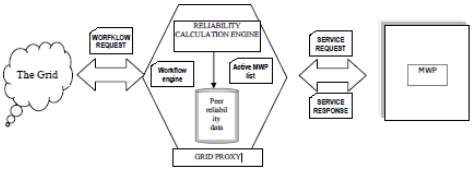

An Architecture for Reliable Mobile Workflow in a Grid Environment[12]

Mobile workflow peers (MWPs) are mobile devices that can connect to grids and participate in workflows. Grid Proxies (GPs) are fixed grid nodes with sufficient resources to allow mobile peers to connect to and participate in workflows on the grid. Because MWPs may be part of different networks they cannot communicate directly with each other, or with other grid nodes; instead they communicate only with the GPs. Each MWP can be in one of the following states: Connected (i.e. on line) or Unavailable (i.e. off line or otherwise busy).

Fig-11: Mobile grid workflow system Architecture[12]

(B. Karakostas and G. Fakas 2009)

A GP keeps track of connected MWPs using a ‘heartbeat’ protocol that involves a periodic sending of a message to a MWP and waiting for a response. If no response is received within a timeout period, the GP considers the MWP unavailable. For each MWP, the GP maintains an index of its reliability, based on the number of disconnections over a certain period. A GP also calculates as the average reliability of the registered MWPs GPs advertise to the grid the services of MWPs that have been registered with them. A GP receives an invitation to participate in a grid workflow by providing a service. The GP can accept the invitation to execute a workflow activity by delegating its execution to a registered MWP. The MWP remains transparent as far as the workflow process and the other grid participants are concerned, as all the interaction of the workflow process is with the GP (Figure 11). However the GP relies on its connected MWPs to actually perform the activity. GP cannot guarantee at the time of the service request, that a suitable, registered MWP will be online. Instead, a GP must perform a reliability calculation to determine the degree of redundancy required to meet the workflow deadlines. This means that the GP will delegate the execution of the activity to multiple MWPs to ensure that one of those will be available to provide the service with an acceptable probability.

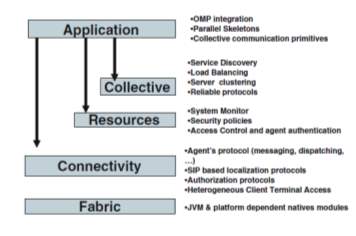

MAGDA: A Mobile Agent based Grid Architecture

MAGDA – Mobile Agents based Grid Architecture[13], is conceived in order to provide secure access to a wide class of services in a distributed heterogeneous system, geographically distributed. MAGDA is a layered architecture. Figure 12 shows a listing of MAGDA components and services at each Layer of the Grid model.

Fig-12: MAGDA Architecture (R. Aversa et al. 2006)

A mobile agent based middleware could be integrated within a Grid platform in order to provide each architecture with the facilities supported in the other one. In general current middleware are not able to migrate an application from one system to another as there are a lot of issues to be addressed. Some examples are the differences across resources in installed software, file system structures, and default environment settings. On the other side reconfiguration is a main issue to implement dynamic load balancing strategies.

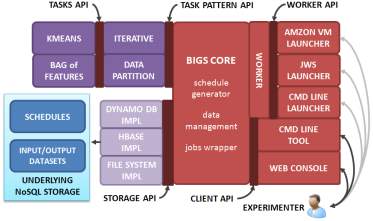

BIGS Architecture

BIGS[14], the Big Image Data Analysis Toolkit, a software framework conceived to exploit computing resources of different nature for image processing and analysis tasks. Through its architecture BIGS offers a clear separation of roles for contributing developers and decouples functionality for storage from algorithms implementation, from core development and from deployment packaging.

BIGS architecture is conceived with two goals in mind: (1) to provide an extensible framework where new tasks (algorithms), task patterns, storage interfaces and worker deployment technologies can be integrated; and (2) to encourage a clear separation of roles for contributors to the project through source code decoupling. This is achieved by establishing a set of APIs (Application Programming Interfaces) which decouple BIGS components from each other and against which different BIGS developers implement their code, as shown in Figure 13.

Fig-13: BIGS Architecture (R. Ramos-Pollan et al. 2012)

Each API or component defines the roles of the contributors to BIGS architecture.

Sintok Grid

Sintok-Grid[15] is a Grid used at the University Utara, Malaysia. Its basic architecture is shown in Figure 14 that depicts how the main nodes in the network are connected together and to the public network. This basic architecture consists of two network segments, namely the internal network and the external (public) network. All the servers of the Sintok-Grid are hosted by the internal network and the other grid sites in the Academic Grid Malaysia are hosted by the public network.

Fig-14: Sintok Grid Architecture (M. S. Sajat et al. 2012)

The Sintok-Grid is a part of the larger A-Grid system, so the server nodes need to be visible and should be accessed from networks outside the Grid. Hence the servers were configured with public IP addresses. To make the grid system accessible from outside, the servers can be enabled to be identified using hostnames rather than IP addresses. For this the Domain Name Server (DNS) should be fully configured so that for each public IP address, forward and reverse lookups are supported. Reverse lookups helps in detecting intrusions and protects the servers from outside attacks. So a DNS system was setup to make the Sintok-Grid seamlessly integrate with the A-Grid. The bandwidth between the WNs and the network, or WAN cloud, plays a major role in the overall performance of the system. The network bandwidth should accommodate all the data and the command requests and responses that are transferred between the public network and the local network. The bandwidth of the internet link is sufficient to handle the expected traffic in Sintok-Grid. The system must be installed with the right software especially the operating system, middleware and virtualization software, once the hardware of the grid system and the network has been installed. In the current implementation of the Sintok-Grid, Scientific Linux 5.x, gLite 3.2 and Proxmox 1.8 have been selected as the operating system, middleware and virtualization platform respectively. Specific elements of the grid system such as CE, SE, UI and IS require specific installation process to be followed.

SUNY (The State University of New York) POC Project [16]

In late 2007 and early 2008, Dell Database and Applications teams partnered with SUNY ITEC and Oracle in a Proof-Of-Concept (POC) project. In this project, the Banner ERP application systems are consolidated through grid computing into a single Grid that is made of Dell servers and storage. The goal of this project was to demonstrate that the Grid running Banner ERP applications can scale to handle 170,000 students in the databases with 11,000 students simultaneous registering for courses and performing others tasks and with around 70,000 courses being selected in one hour, 37 times the actual number seen at a SUNY[16] school of 110,000 students.

Features offered by Oracle 10g and 11g to build the Grid computing model are:-

- A scalable and highly available database clusters is offered by clustering technology.

- As a service directs database connections from an application to one or more database instances, Database services allow applications to become independent from the physical implementation of service. This redirection makes it easy to dynamically connect to other set of database instances with its load-balancing feature.

- Automatic Storage Management is provided.

- Central monitoring, managing and provisioning the entire Grid infrastructure is provided by Oracle Enterprise Manager Grid Control.

- With Load balancing feature, it can be ensured that the workloads of applications can be distributed among the database instances.

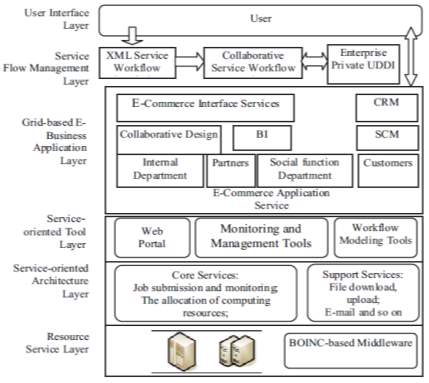

Conceptual Framework for Collaborative Business based on Service Oriented

Grid Computing

A conceptual framework for collaborative business of a platform that is based on SOA and Grid computing is shown in Figure 15:

Fig-15: Architecture of Collaborative Business Platforms based on SOA and Grid Service

- Resource Service Layer: This layer represents the computing resources and data resources that are distributed on the Internet. It also provides various resource call services such as the computing power storage capacity and security. This layer is the resource foundation of the collaborative business platform.

- Service-oriented Architecture Layer: This layer is located above the level of resources, providing interface access to the underlying resources layer: In order to support service-based grid application development, operation, deployment and debugging, the service-oriented architecture layer focuses on solving the various types of resource sharing and synergy. Users can submit and monitor jobs, suspend operations, and conduct the remote operation through the Job submission and monitoring service from this layer. Data resource management can process data distribution, replication, resource synchronization and can also manage meta-data.

- Service-oriented Tool Layer: This layer provides users with a consistent user and access interface (such as Web-based service portal). Also, it provides programming modeling tools for debugging and simulation, monitoring and management workflow for the grid application. A variety of tools and API simplify grid application development, deployment, debugging and management.

- Grid-based E-Business Application Layer: This layer contains a Web Service-based Grid application system, which includes all of the collaborative business and enterprise application services. A collaborative business platform integrates a variety of applications and functions which are encapsulated in a grid service. Furthermore, information on external partners, communication, social functions and the interaction among customers and other services are included in this layer.

- Service Flow Management Layer. This layer consists of three parts: XML service workflow description, collaborative workflow engine and business UDDI registration. XML service workflow description, composed in XML markup language, is used for collaborative business process description, and to store the files in order according to the call sequence of their description.

- The User Interface Layer. This layer is used for interactions and operations between end users through a simple and user-friendly interface. The user interface layer also provides visualization tools which enable users to customize the collaborative business workflow which is also called XML workflow description file.

2.3 GRID MIDDLEWARE APPROACHES

We have surveyed different grid middleware and their security solutions with the objective to find out what is presently available, the available security solution and what can be done to improve the available security solution. We also analyzed how efficient is the middleware in terms of its security solution. Some of the middleware we studied are as given below –

- UNICORE [17][18]

- GLOBUS [19]

- GridSim [20][21]

- Sun Grid Engine [22] [23]

- Alchemi [24][25]

- HTCondor [26]

- GARUDA[27]

- Entropia [28], [29]

- Xgrid [30]

- iDataGuard [31]

- Middleware for Mobile Computing[32]

- Selma [33]

- XtremWeb [34]

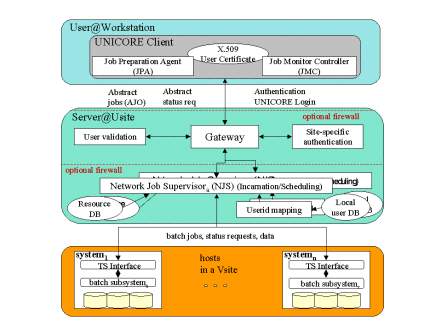

UNICORE

UNICORE [17] [18] stands for Uniform Interface to Computing Resources. It was developed in Germany to enable their supercomputer centers to provide their users with an intuitive, seamless and secure access to the resources. Its objectives were to hide the seams resulting from different hardware architectures, vendor specific operating systems, incompatible batch systems, different application environments, historically grown computer center practices, naming conventions, file system structures, and security policies. Also security was needed from the start relying on the emerging X.509 standard for certificates authenticating servers, software, and users and encrypting the communication over the internet.

UNICORE provides extensive set of services such as –

- Job creation

- Job management

- Data management

- Application support

- Flow control

- Meta-computing

- Interactive support

- Single sign-on

- Support for legacy jobs

- Resource management

UNICORE assumes that all resources that are consumed are to be accounted for and that the centers are accountable to their governing bodies. UNICORE provides the technical infrastructure which allows the centers to pool and exchange their resources either in total or in part.

The UNICORE Architecture

UNICORE creates a three-tier architecture depicted in Figure 3 at each UNICORE site. The UNICORE client supports the creation, manipulation, and control of complex jobs, which may involve multiple systems at one or more UNICORE sites. The user are represented as Abstract Job Objects, effectively Java classes, which are serialized and signed when transferred between the components of UNICORE.

The server level of UNICORE consists of a Gateway, the secure entry point into a UNICORE site, which authenticates requests from UNICORE clients and forwards them to a Network Job Supervisor (NJS) for further processing. The NJS maps the abstract request, as represented by the AJO, into concrete jobs or actions which are performed by the target system, if it is part of the local UNICORE site. Sub-jobs that have to be run at a different site are transferred to this site’s gateway for subsequent processing by the peer NJS. Functions of NJS are: synchronization of jobs to honor the dependencies specified by the user, automatic transfer of data between UNICORE sites as required for job execution, collection of results from jobs, especially stdout and stderr, import and export of data between the UNICORE space and target system, and client workstation.

The third tier of the architecture is the target host which executes the incarnated user jobs or system functions. A small daemon, called the Target System Interface (TSI) resides on the host to interface with the local batch system on behalf of the user. A stateless protocol is used to communicate between NJS and TSI. Multiple TSIs may be started on a host to increase performance.

Fig-16: Unicore Architecture (U. T. June 2010)

Grid Services Used

Security: Certificates according to the X.509V3 standards, provide the basis of UNICORE’s security architecture. Certificates serve as grid-wide user identifications which are mapped to existing Unix accounts with the option to follow established conventions. Certificates also mutually authenticate peer systems in a grid. For a smooth procedure all members of a grid should use the same certificate authority (CA) for both user and server certificates. Handling user certificates from different certificate authorities pose no technical problem: If Grid-A requires certificates from CA-A, and Grid-B only accepts those issued by CA-B, a UNICORE client can handle multiple certificates and the user will have to select the correct one when connection to either Grid-A or Grid-B. This is not different from the present situation with users having different accounts on different systems; now they have a different identity for each grid.

Resources: UNICORE relies on descriptions of resources (hardware and software) which are available at run job creation and submission time to the client. Standards defined by the working groups of the Global Grid Forum on Grid Information Services and Scheduling and Resource Management are valuable for future releases of UNICORE, especially for a resource broker. A replacement of the internal representation of resources will be transparent to the user.

Data: The UNICORE data model includes a temporary data space, called Uspace, which functions as a working directory for running UNICORE jobs at a Usite. The placement of the Uspace is fully under the control of the installation. UNICORE implements all functions necessary to move data between the Uspace and the client. This is a synchronous operation controlled by the user. No attempt is presently made top push data to the desktop from the server. Data movement between Uspace and file systems at a Vsite is specified by the user explicitly or by application support in the client exploiting knowledge about data requirements of the application. NJS controls the data movement which may result in Unix copy command or in a symbolic link to the data. Transfer of data between Usites is equally controlled by NJS. Currently, the data is transferred using a byte streaming protocol or within an AJO for small data sets.

Security is maintained in the UNICORE system by single sign-on. For this it uses X.509 version 3 certificates. Prior to use the system, the user must configure their client to use their digital certificate, imported into the Java file called as “keystore”. The user must also import the CA – certificate authority certificates for the resources that they want to access and use. When the client is started, the user has to give their password to unlock the “keystore” file. After this, when the client submits a job to the system, it uses the certificate to digitally sign the AJO (Abstract Job Object), that are the collection of java classes, before it is transmitted to the NJS (Network Job Supervisors) that is the server. After this the signature is verified using a copy of the user’s certificate maintained at the server, If verified, the identity of the user is established.

The X.509 certificate works as follows – Asymmetric key algorithms of cryptography are used in which the encryption and decryption keys are different. Thus it uses public key encryption mechanism. This set up is also called PKI (Public Key Infrastructure).

Two things can be ensured in UNICORE with X.509 certificates:

- Each client or server can prove its identity. It is done by presenting its certificate containing the public key and providing evidence of the private key.

- Encrypted messages can be read by private key only. This way an encrypted communication channel between different users on the Grid is established. The protocol uses the Secure Sockets Layer (SSL) mechanism.

GLOBUS

Globus[19] [32] [33] is:

- A community of users and developers who collaborate on the use and development of open source software, and associated documentation, for distributed computing and resource federation.

- The software itself—the Globus Toolkit: a set of libraries and programs that address common problems that occur when building distributed system services and applications.

- The infrastructure that supports this community — code repositories, email lists, problem tracking system, and so forth.

The software itself provides a variety of components and capabilities, including the following:

- A set of service implementations focused on infrastructure management.

- A powerful standards-based security infrastructure.

- Tools for building new Web services, in Java, C, and Python.

- Both client APIs (in different languages) and command line programs for accessing these various services and capabilities.

Detailed documentation on these various components, their interfaces, and how they can be used to build applications is available. These components in turn enable a rich ecosystem of components and tools that build on, or interoperate with, GT components—and a wide variety of applications in many domains. From our experiences and the experiences of others in developing and using these tools and applications, we identify commonly used design patterns or solutions, knowledge of which can facilitate the construction of new applications.

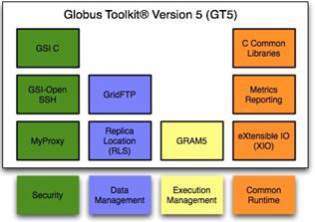

There are many tools to develop application in grid computing, GT5[37] is the one of the tool using which you can create application in grid computing or middleware of grid computing architecture. The open source Globus Toolkit is a fundamental enabling technology for the “Grid”. It provides facility to share computing power, databases, and other tools securely online across industry, institutional, and geographic boundaries without sacrificing personal freedom. It contains software services and libraries for resource monitoring, discovery, and management, and in addition security and file management.

COMPONENTS OF GT 5.2[38]

- Data Management

- GridFTP

- Jobs Management

- GRAM5

- Security

- GSI C

- MyProxy

- GSI-OpenSSH

- SimpleCA

- Common Runtime

- C Common Libraries

Fig-17: GT5 Components (M. K. Vachhani and K. H. Atkotiya 2012)

(A) GridFTP:

The GridFTP[39] protocol was defined to make the transport of data secure, reliable, and efficient for these distributed science collaborations. The GridFTP protocol extends the standard File Transfer Protocol (FTP) with useful features such as Grid Security Infrastructure (GSI) security, increased reliability via restart markers, high performance data transfer using striping and parallel streams, and support for third-party transfer between GridFTP servers.

One of the foundational issues in HPC computing is the ability to move large (multi Gigabyte, and even Terabyte), file-based data sets between sites. Simple file transfer mechanisms such as FTP and SCP are not sufficient either from a reliability or performance perspective. GridFTP extends the standard FTP protocol to provide a high-performance, secure, reliable protocol for bulk data transfer.

GridFTP is a protocol defined by Global Grid Forum Recommendation GFD.020, RFC 959, RFC 2228, RFC 2389, and a draft before the IETF FTP working group. Key features include:

- Performance – GridFTP protocol supports using parallel TCP streams and multi-node transfers to achieve high performance.

- Checkpointing – GridFTP protocol requires that the server send restart markers (checkpoint) to the client.

- Third-party transfers – The FTP protocol on which GridFTP is based separates control and data channels, enabling third-party transfers, that is, the transfer of data between two end hosts, mediated by a third host.

- Security – Provides strong security on both control and data channels. Control channel is encrypted by default. Data channel is authenticated by default with optional integrity protection and encryption.

Globus Implementation of GridFTP:

The GridFTP protocol provides for the secure, robust, fast and efficient transfer of (especially bulk) data. The Globus Toolkit provides the most commonly used implementation of that protocol, though others do exist (primarily tied to proprietary internal systems).

The Globus Toolkit provides:

- a server implementation called globus-gridftpserver,

- a scriptable command line client called globusurl-copy, and

- a set of development libraries for custom clients.

While the Globus Toolkit does not provide a client with Graphical User Interface (GUI), Globus Online provides a web GUI for GridFTP data movement.

(B) GRAM5:

Globus implements the Grid Resource Allocation and Management (GRAM5)[38] service to provide initiation, monitoring, management, scheduling, and/or coordination of remote computations. In order to address issues such as data staging, delegation of proxy credentials, and job monitoring and management, the GRAM server is deployed along with Delegation and Reliable File Transfer (RFT) servers. GRAM typically depends on a local mechanism for starting and controlling the jobs. To achieve this, GRAM provides various interfaces/adapters to communicate with local resource schedulers (e.g. Condor) in their native messaging formats. The job details to GRAM are specified using an XML-based job description language, known as Resource Specification Language (RSL).

RSL provides syntax consisting of attribute-value pairs for describing resources required for a job, including memory requirements, number of CPU’s needed etc. GSI C, MyProxy and GSI-OpenSSH for Grid Security: These components establish the identity of users or services (authentication), protect communications, and determine who is allowed to perform what actions (authorization), as well as manage user credentials.

(C) Grid Security Infrastructure in C (GSI C):

The Globus Toolkit GSI C component[19] provides APIs and tools for authentication, authorization and certificate management. The authentication API is built using Public Key Infrastructure (PKI) technologies, e.g. X.509 Certificates and TLS. In addition to authentication it features a delegation mechanism based upon X.509 Proxy Certificates. Authorization support takes the form of a couple of APIs. The first provides a generic authorization API that allows callouts to perform access control based on the client’s credentials (i.e. the X.509 certificate chain). The second provides a simple access control list that maps authorized remote entities to local (system) user names. The second mechanism also provides callouts that allow third parties to override the default behavior and is currently used in the Gatekeeper and GridFTP servers. In addition to the above there are various lower level APIs and tools for managing, discovering and querying certificates. GSI uses public key cryptography (also known as asymmetric cryptography) as the basis for its functionality.

D) MyProxy:

MyProxy[19] is open source software for managing X.509 Public Key Infrastructure (PKI) security credentials (certificates and private keys). MyProxy combines an online credential repository with an online certificate authority to allow users to securely obtain credentials when and where needed. Users run myproxy-logon to authenticate and obtain credentials, including trusted CA certificates and Certificate Revocation Lists (CRLs).

(E) GSI-OpenSSH:

GSI-OpenSSH[40] is a modified version of the OpenSSH secure shell server that adds support for X.509 proxy certificate authentication and delegation, providing a single sign-on remote login and file transfer service. GSI-OpenSSH can be used to login to remote systems and transfer files between systems without entering a password, relying instead on a valid proxy credential for authentication. GSI-OpenSSH forwards proxy credentials to the remote system on login, so commands requiring proxy credentials (including GSI-OpenSSH commands) can be used on the remote system without the need to manually create a new proxy credential on that system.

The GSI-OpenSSH distribution provides gsissh, gsiscp, and gsiftp clients that function equivalently to ssh (secure shell), scp (secure copy), and sftp (secure FTP) clients except for the addition of X.509 authentication and delegation.

GridSim [20]

Modeling and simulation of a wide range of heterogeneous resources, single or multiprocessors, shared and distributed memory machines, and clusters are supported by the GridSim[20], [21], [41], [42] toolkit. The GridSim resource entities are being extended to support advanced reservation of resources and user-level setting of background load on simulated resources based on trace data.

The GridSim toolkit supports primitives for application composition and information services for resource discovery. It provides interfaces for assigning application tasks to resources and managing their execution. It also provides visual modeler interface for creating users and resources. These features can be used to simulate parallel and distributed scheduling systems. The GridSim toolkit has been used to create a resource broker that simulates Nimrod/G for the design and evaluation of deadline and budget constrained scheduling algorithms with cost and time optimizations. It is also used to simulate a market-based cluster scheduling system (Libra) in a cooperative economy environment. At the cluster level, the Libra scheduler has been developed to support economy-driven cluster resource management. Libra is used within a single administrative domain for distributing computational tasks among resources that belong to a cluster. At the Grid level, various tools are being developed to support a quality-of-service (QoS) – based management of resources and scheduling of applications. To enable performance evaluation, a Grid simulation toolkit called GridSim has been developed.

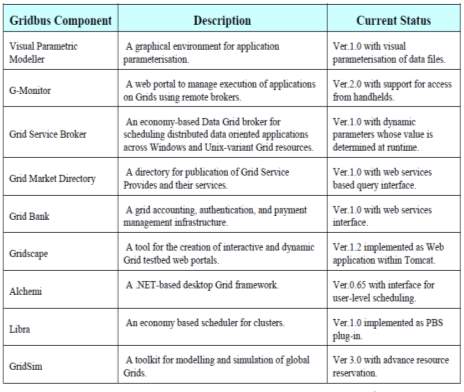

The Gridbus Project is engaged in the design and development of grid middleware technologies to support eScience and eBusiness applications. These include visual Grid application development tools for rapid creation of distributed applications, cooperative economy based cluster scheduler, competitive economy based Grid scheduler, Web-services based Grid market directory (GMD), Gridscape for creation of dynamic and interactive testbed portals, Grid accounting services, and G-monitor portal for web-based management of Grid applications execution.

The Gridbus Project has developed Windows .NET based desktop clustering software and Grid job web services to support the integration of both Windows and Unix-class resources for Grid computing. A layered architecture for realisation of low-level and high-level Grid technologies is shown in Figure 18.

Fig-18: Gridbus technologies and their status (R. Buyya and M. Murshed 2002)

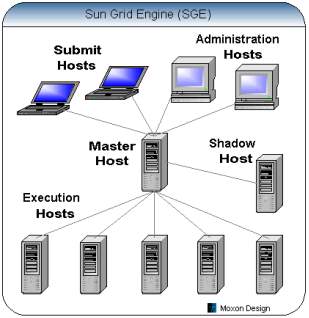

Sun Grid Engine [22], [23]

Sun Grid Engine (SGE) was a grid computing computer cluster software system (otherwise known as a batch-queuing system), then improved and supported by Sun Microsystems. The original Grid Engine open source project website closed in 2010, but versions of the technology are still available under its original Sun Industry Standards Source License. Grid Engine is typically used on a computer farm or high performance computing (HPC) cluster and is responsible for accepting, scheduling, dispatching, and managing the remote and distributed execution of large numbers of standalone, parallel or interactive user jobs. It also manages and schedules the allocation of distributed resources such as processors, memory, disk space, and software licenses.

In 2010, Oracle Corporation acquired Sun and thus renamed Sun Grid Engine to Oracle Grid Engine. The Oracle Grid Engine 6.2u6 source code was not included with the binaries, and changes were not put back to the project’s source repository. In response to this, the Grid Engine community started the Open Grid Scheduler and the Son of Grid Engine projects to continue to develop and maintain a free implementation of Grid Engine. On January 18, 2011, Univa announced that it had hired the principal engineers from the Sun Grid Engine team. On October 22, 2013 Univa announced that it had acquired Oracle Grid Engine assets and intellectual property making it the sole commercial provider of Grid Engine software. Univa Grid Engine 8.0 was the first version, released on April 12, 2011. It was forked from SGE 6.2u5, the last open source release. It adds improved third party application integration, license and policy management, enhanced support for software and hardware platforms, and cloud management tools. Univa Grid Engine 8.3.1 was released on August 28, 2015. This release contained all of the new features in Univa Grid Engine 8.3.0. Univa Grid Engine 8.4.0 was released on May 31, 2016. This release supports Docker containers and will automatically dispatch and run jobs with a user specified Docker Image.

Fig-19: Sun Grid Engine Architecture (Daniel 2014)

As you can see from the above diagram, the SGE is built around a Master Host (qmaster) that accepts requests from the Submit and Administration Hosts and distributes workload across the pool of Execution Hosts. The SGE system uses client-server architecture, and uses NFS file systems and TCP/IP sockets to communicate between the various hosts. The new Sun Grid Engine Enterprise Edition (SGEEE) software manages the delivery of computational resources based on enterprise resource policies set by the organization’s technical and management staff. SGEEE software uses the enterprise resource policies to examine the locally available computational resources, and then allocates and delivers those resources.

In Sun Grid Engine, secret key encryption is used for the messages. The public key algorithm is used to exchange the private key. The user has to present certificate to prove identity, and in response he receives a certificate from the system. This ensures that the communication is correct and valid. This establishes a session for communication. After this, the communication continues in encrypted form only. Being valid only for a limited and certain period, the session ends accordingly. To continue the communication, session has to be reestablished.

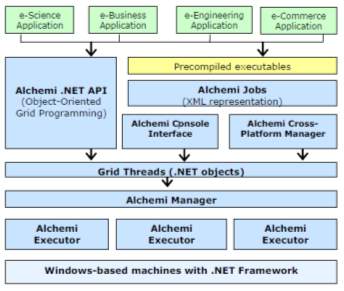

Alchemi [25], [43]

Alchemi, a .NET based framework that provides the runtime machinery and programming environment required to construct enterprise/desktop grids and develop grid applications. It allows flexible application composition by supporting an object-oriented application programming model in addition to a file-based job model. Cross-platform support is provided via a web services interface and a flexible execution model supports dedicated and non-dedicated (voluntary) execution by grid nodes. Alchemi was conceived with the aim of making grid construction and development of grid software as easy as possible without sacrificing flexibility, scalability, reliability and extensibility. Alchemi follows the master worker parallel programming paradigm in which a central component dispatches independent units of parallel execution to workers and manages them. In Alchemi, this unit of parallel execution is termed ‘grid thread’ and contains the instructions to be executed on a grid node, while the central component is termed ‘Manager’. A ‘grid application’ consists of a number of related grid threads. Grid applications and grid threads are exposed to the application developer as .NET classes / objects via the Alchemi .NET API.

Fig-20: Alchemi .Net Architecture (A. Luther et al. 2005)

When an application written using this API is executed, grid thread objects are submitted to the Alchemi Manager for execution by the grid. Alternatively, file-based jobs (with related jobs comprising a task) can be created using an XML representation to grid-enable legacy applications for which precompiled executables exist. Jobs can be submitted via Alchemi Console Interface or Cross-Platform Manager web service interface, which in turn convert them into the grid threads before submitting then to the Manager for execution by the grid. Alchemi middleware system uses a role-based authentication security model, a thread model to execute and submit jobs and a cross platform web service model for interoperability. Jobs are represented as XML files. Base64 encoding decoding is used. But it does not support single sign-on and delegation mechanism. With the use of X.509 proxy certificates, these mechanisms are implemented on the Alchemi framework. In order to perform this implementation, a trusted communication is set up between two hosts. Then the client host will be authenticated by the server host. After authentication, Client gets access of various applications provided by the framework with full delegation rights.

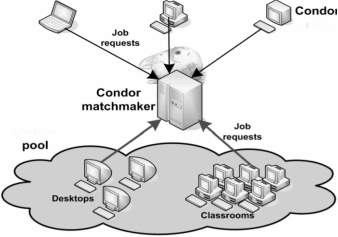

HTCondor [26]

Condor is a distributed environment designed for high throughput computing (HTC) and CPU harvesting. CPU harvesting is a process of exploiting non-dedicated computers (e.g. desktop computers) when they are not used. Condor-G is a High-throughput scheduler supported by Grid Resource Allocation Management (GRAM) component of Globus. It uses non-dedicated resources to schedule the jobs. Processing huge amounts of data on large and scalable computational infrastructures is gaining increasing importance.

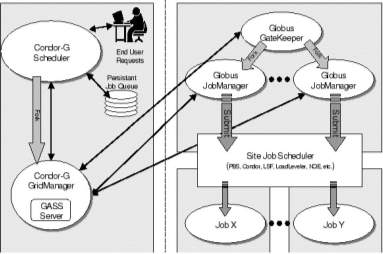

Fig-21: CONDOR Architecture (J. Frey et al. 2001)

Heart of the system is Condor Matchmaker. Users describe their applications with ClassAds language and submit them to Condor. ClassAds allows users to define custom attributes for resources and jobs. On the other side, resources publish information to Matchmaker. Condor then matches job requests with available resources. Condor provides numerous advanced functionalities such as job arrays and workflow support, check pointing, job migration. It enables users to define resource requirements, rank resources and mechanism for transferring files to/from remote machines.

Condor-G is the job management part of Condor. Condor-G allows the users to submit jobs into a job queue having a log maintaining the life eyc1e of the jobs, managing the input and output files. Condor-G uses the Globus Toolkit to start the job on the remote machine instead of using the Condor developed protocols. Condor-G allows users to access both resources and manage jobs running on remote resources. Condor-G manages the job queue and the resources from various sites where the jobs can execute. Using Globus mechanisms Condor-G communicates and transfers the files to and from those resources. Condor-G uses the GRAM for submission of jobs, and it runs a local GASS (Global Access to Secondary Storage) server for file transfers. It allows users to submit many jobs at once and then monitors those jobs with interface and receives notification when job execution completes or job fails to execute.

Fig-22: Condor Globus Interaction (J. Frey et al. 2001)

Fig. 22 shows how Condor-G interacts with Globus toolkit. It contains a GASS (Global Access to Secondary Storage) server which is used to transfer the executable, standard input, output and error files to and from the remote job execution site. Condor-G uses the Globus Resource Allocation Manager (GRAM) to contact the remote Globus Gatekeeper to start a new job manager and to monitor the progress of job. Condor-G has the ability to detect and intelligently handle, if the remote resource crashes.

In HTCondor (High throughput computing Condor) the security is applied through authentication mechanism by using authentication methods like GSI authentication, Windows authentication, Kerberos authentication, file system authentication etc. Users use public key certificates in GSI authentication as a result problem of identity spoofing persists. Checkpoint servers are also used but they can be replaced maliciously resulting in poor retrieval of data. In Condor system, the problem of DoS persists that is “Denial of Service” if proper access permissions are not set. Even the configuration files and log files can be changed. The user gets full access of the job once he submits it. This means, he even can change the owner of the job.

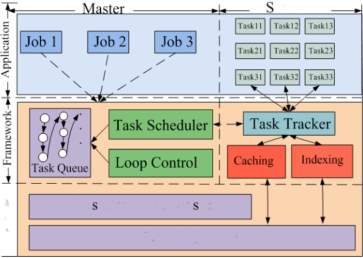

Hadoop [44]

Fig-23: Hadoop Framework Architecture (K. Shvachko et al. 2010)

Fig. 23 shows the Hadoop framework architecture. A Hadoop cluster makes use of the slave nodes to execute map and reduce tasks. Each slave node has a fixed number of map slots and reduces slots that they are executed simultaneously. Slave node sends a heartbeat signal to the master node. Upon receiving the signal from a slave node that has empty map/reduce slots, the master node invokes the Map-Reduce scheduler to assign tasks to the slave node. The slave node then reads the contents of the corresponding input data block, parses input key/value pairs out of the block, and passes each pair to the user defined map function. The output of map function is the intermediate key/value pairs, which then buffered in memory, and written to the local disk and partitioned into R regions by the portioning function. The locations of these intermediate data are passed to the master node. The master node forwards these locations to reduce tasks. A reduce task uses remote procedure calls to read the intermediate data generated by the M map tasks of the job. Each reduce task is responsible for a region of intermediate data and it has to retrieve its partition of data from all slave nodes that have executed the M map tasks. This process of shuffling involves many-to-many communications among slave nodes. The reduce task then reads the intermediate data and invokes the reduce function to produce the final output data (i.e., output key/value pairs) for its reduce partition. The input data is stored in the local disk of the machines that makes up the cluster so that the network bandwidth is conserved. Thus, a Map-Reduce scheduler often takes the input file’s location information and schedules the map task on the slave node that contains the replica of the corresponding input data block. Hadoop is useful for data staging, operational data store architecture and for ETL to ingest massive amount of data so that it eliminates the need to predefine the data schema before loading the data in Hadoop. It provides the parallel nature of execution. Data Transformation and Enrichment process can be done easily using the raw data in Hadoop environment. It provides the flexibility of creating structure for the data. Flat scalability is the main importance of Hadoop instead of using other distributed systems. Hadoop will not results in good performance while executing a limited amount of data on small number of nodes as the indicated overhead in starting Hadoop program is relatively high. MPI (Message Passing Interface), a distributed programming model performs much better on two, three or probably on thousands of machines but it requires high cost to improve the performance and engineering effort by adding more hardware to increase the data volumes. Handling partial failure is very simple as tasks are not dependent and Hadoop is a failure tolerant synchronous distributed system.

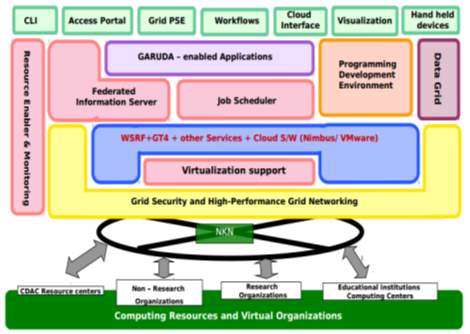

GARUDA [27]

The Indian Grid Certification Authority (IGCA), a certification authority for Grid, has received accreditation from Asia Pacific Grid Policy Management Authority (APgridPMA) to provide access of worldwide grids to Indian researchers. Indian researchers can now request user and host certificates to IGCA, part of Centre for Development of Advanced Computing (C-DAC) and get access to worldwide grids. Indian researchers mainly constitute users of GARUDA Grid, Foreign collaborators or institutes related to Grid research and scientific collaborations from India that allows researcher to access the grid.

GARUDA (Global Access to Resource Using Distributed Architecture) is India’s Grid computing initiative connecting 17 cities across the country. The 45 participating institutes in this nationwide project include all the IITs and C-DAC centers and other major institutes in India. GARUDA is a collaboration of science researchers and experimenters on a nationwide grid of computational nodes, mass storage and scientific instruments that aims to provide the technological advances required to enable data and compute intensive science. One of GARUDA’s most important challenges is to strike the right balance between research and the daunting task of deploying that innovation into some of the most complex scientific and engineering endeavors being undertaken today. GARUDA has adopted a pragmatic approach for using existing Grid infrastructure and Web Services technologies. The deployment of grid tools and services for GARUDA will be based on a judicious mix of in-house developed components, the Globus Toolkit (GT), industry grade & open source components. The foundation phase of GARUDA is based on stable version of GT4. The resource management and scheduling in GARUDA is based on a deployment of industry grade schedulers in a hierarchical architecture. At the cluster level, scheduling is achieved through Load Leveler for AIX platforms and Torque for Linux clusters.

Fig-24: Garuda Architecture (GBC Pune Aug 2012)

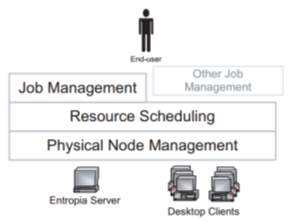

Entropia [28], [29]

The Entropia DCGrid aggregates the raw desktop resources into a single logical resource. This logical resource is reliable and predictable despite the fact that the underlying raw resources are unreliable (machines may be turned off or rebooted) and unpredictable (machines may be heavily used by the desktop user at any time). This logical resource provides high performance for applications through parallelism, and the Entropia Virtual Machine provides protection for the desktop PC, unobtrusive behavior for the user of that machine, and protection for the application’s data.

Fig-25: Entropia Desktop Distributed Computing Grid Architecture

(A. Chien et al. 2003)

The Entropia server-side system architecture is composed of three separate layers as shown in Figure. At the bottom is the Physical Node Management layer that provides basic communication to and from the client, the naming (unique identification) of client machines, security, and node resource management. On top of this layer is the Resource Scheduling layer that provides resource matching, scheduling of work to client machines, and fault tolerance. Users can interact directly with the Resource Scheduling layer through the available APIs, or alternatively, users can access the system through the Job Management layer that provides management facilities for handling large numbers of computations and files.

A range of encryption and sandboxing technologies are used in the Entropia security service. Through sandboxing, a single machine virtualization is provided. This helps in enforcement of security policies and management of identity on a single application and the resources. Security is provided by providing cryptography on the applications that communicate and allocating access rights of file system, the interface etc to the jobs. But this increases the cost and reduces the high performance capability of the system.

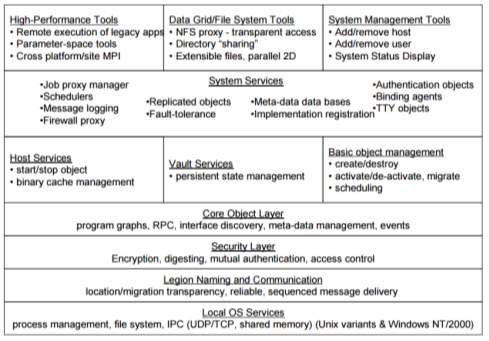

Legion [45]

Legion has been designed as a virtual operating system for distributed resources with OS-like support for current and expected future interactions between resources. The bottom layer is the local operating system – or execution environment layer. This corresponds to true operating systems such as Linux, AIX, Windows 2000, etc. We depend on process management services, file system support, and inter-process communication services delivered by the bottom layer, e.g., UDP, TCP or shared memory. Above the local operating services layer we build the Legion communications layer. This layer is responsible for object naming and binding as well as delivering sequenced arbitrarily-long messages from one object to another. Delivery is accomplished regardless of the location of the two objects, object migration, or object failure. For example, object A can communicate with object B even while object B is migrating from one place to another place or even if object B fails and subsequently restarts. This is possible because of Legion’s three-level naming and binding scheme, in particular the lower two levels.

Fig-26: Legion Architecture (A. S. Grimshaw and M. A. Humphrey 2003)

The lower two levels consist of location-independent abstract names called LOIDs (Legion Object IDentifiers) and object addresses specific to communication protocols, e.g., an IP address and a port number. Next is the security layer on the core object layers. The security layer implements the Legion security model for authentication, access control, and data integrity (e.g., mutual authentication and encryption on the wire). The core object layer addresses method invocation, event processing (including exception and error propagation on a per-object basis), interface discovery and the management of meta-data. Above the core object layer are the core services that implement object instance management (class managers) and abstract processing resources (hosts) and storage resources (vaults). These are represented by base classes that can be extended to provide different or enhanced implementations. For example, the host class represents processing resources. It has methods to start an object given a LOID, a persistent storage address, and the LOID of an implementation to use, stop an object given a LOID, kill an object, and so on.

The Legion team presently is focusing on issues of fault-tolerance (autonomic computing) and security policy negotiation across organizations.

Xgrid [30]

Single sign-on is permitted in Xgrid. This is done through Kerberos. For establishing a connection, a password is required to be sent by the client to the server for executing a job. In response, the server again uses a password for authentication. The encryption method used is MD5. For different users, different permissions are set. No certificate authentication or any other mechanism is used. Problem of identity spoofing persists in Xgrid.

iDataGuard [31]

It is an interoperable security grid middleware. Users are allowed to outsource their file system to heterogeneous data storage providers such as Rapidshare and Amazon. It uses cryptographic techniques to maintain data integrity and confidentiality. Normally data at client side is stored in plaintext and the cryptography is applied on the storage provider. Due to this, outside and inside attacks can be possible. iDataguard solves this problem by applying cryptography. It also provides index based search of keyword. But certificate authentication is not supported.

Middleware for Mobile Computing [32]

A survey of middleware paradigms for mobile computing presented several research projects that have been started to address the dynamic and security aspects of mobile distributed systems. It is clear from the paper that traditional middleware approaches based on technologies like CORBA and Java RMI which support object oriented middleware are successful in providing heterogeneity and interoperability but they are not able to provide the appropriate and required support for modern and advanced mobile computing applications.

Selma [33]

One of the mobile agent based middleware is Selma. This middleware provides neighborhood discovery and wireless communication for distributed application in mobile multiple ad-hoc networks. Traditional technologies like CORBA and Java RMI are not suitable for middleware supporting mobile ad-hoc networks. In mobile network based middleware there will be multiple nodes distributed across the network. All of these nodes can be accessed through the above technologies. The paper is concluded by arguing that a complete middleware solution still do not exists that provides and fulfills all the required functionalities for a middleware concerning security aspects and resource management. No certification is used for the authentication.

XtremWeb [34]

It is a open source middleware for peer to peer distributed grid applications and desktop grids. In this, any participant can be a user. The access rights provided are UNIX based and sandboxing technology is also used. It uses public key algorithm for authentication. Problem in this is the grid security policy is breached as the pilot job owner i.e. the one who started the job can be different from the final job owner.

2. 4 Grid Monitoring Systems

A grid environment involves large-scale sharing of resources within various virtual organizations (called VO’s in common grid terminology). There arises a need for mechanisms that enable continuous discovery and monitoring of grid entities (resources, services and activity). This can be quite challenging owing to the dynamic and geographically distributed nature of these entities. Information services thus form a critical part of such an infrastructure, and result in a more planned and regulated utilization of grid resources. Any grid infrastructure should therefore have a monitoring system dedicated to this task, which should provide at a minimum – dynamic resource discovery, information about resources, information about the grid activity, and performance diagnostics.

The goal of grid monitoring[46]–[50] is to measure and publish the state of resources at a particular point in time. Monitoring must be end-to-end which means all components in an environment must be monitored. This means the components which should be monitored are applications, services, processes and operating systems, CPUs, disks, memory and sensors, and routers, switches, bandwidth and latency. Methods like fault detections and recovery mechanisms need the monitoring data to help determine that the parts of an environment are functioning correctly or not. Moreover, a service might use monitoring data as input for a prediction model for forecasting performance which in turn can be used by a scheduler to determine which components to use for prediction [46]–[50].

Monitoring of a grid can be a challenging task given the fact that restricted quality of service, low level of reliability, and network failure are the rule rather than the exception while dealing with globally distributed computing resources. For such a system to be robust there should not be a centralized control of information. The system should exhibit its minimalist behavior for a maximum possible subset of the grid-entities even if certain information access points fail to perform their function. This robustness can only be achieved if:

- The information services are themselves distributed, and geographically reside as close to the individual components of a grid as possible.

- The monitoring is performed in as decentralized a fashion as possible under the constraints of the underlying resources’ architecture.

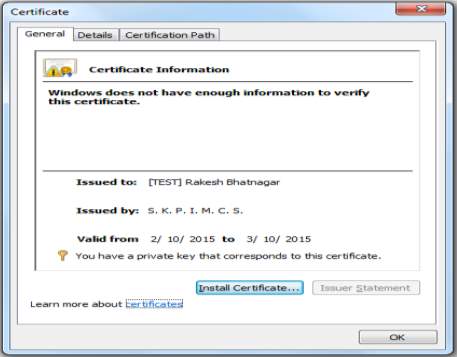

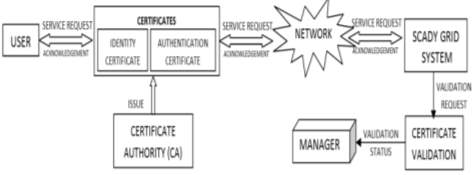

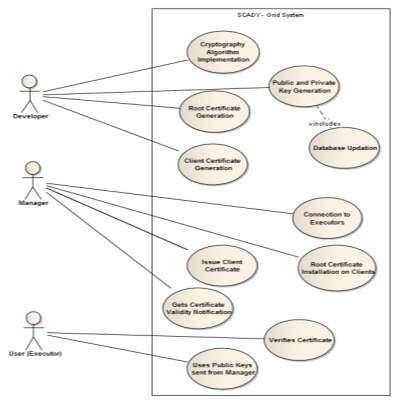

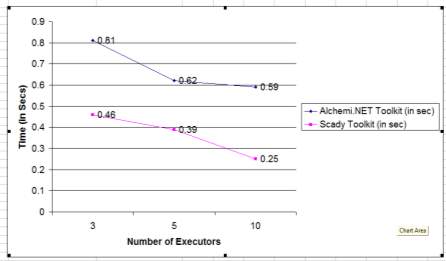

A grid monitoring system should therefore, make sure that unavailable and/or unreachable services and resources do not interfere with its normal functionality. Also, if there is a provision for the awareness of such defaulting entities, it makes the monitoring more effective in reducing the turnaround time for the grid to recover from failures of its participating entities.