How Is Intuition and Rational Deliberation Shaped by Characteristics of the Decision-making Environment?

Info: 9063 words (36 pages) Dissertation

Published: 11th Dec 2019

Tagged: EconomicsConsumer Decisions

How is the relative accuracy of intuition and rational deliberation shaped by characteristics of the decision-making environment?

Table of Contents

2. Intuition and rational deliberation

3. The Structure of the Environment

5.2. Accountability and social norms

6. Expertise of the decision-maker

Summary

Humans have traditionally been viewed as rational agents, acting only after careful conscious deliberation. Intuition, mediated by judgement heuristics, reduces effort, but the classical perspective implies that heuristics are associated with greater error, for they often ignore information and violate normative laws of logic and probability. However, in many real-world environments, the assumptions underlying normative rational models are not met. Real-world environments are often social, complex and unpredictable, and these environments shape both the goals and outcomes of decisions. In this dissertation, the ways in which the structure and complexity of the environment affect the outcome of simple and computationally complex strategies is assessed, with reference to the bias-variance dilemma. Further, social contexts are discussed using the framework of Game Theory, to demonstrate that classically rational decisions are not necessarily accurate. Finally, the way in which expertise, or specialist knowledge in a familiar environment, shapes the relative performance of intuition and rational deliberation is evaluated. In this way, this dissertation evaluates empirical and computational studies in a variety of contexts, in order to establish when and how these environmental characteristics determine when intuition will outperform rational deliberation, and vice versa.

1. Introduction

Humans are adaptable creatures, residing in a world that is often social, complex and unpredictable. Neglecting the real-world context of decision-making leads to the emulation of human choice in a vacuum, with no regard to the environment which shapes and guides judgements and decisions. The literature has seen a proliferation of studies fixated on how people make mistakes at the point of decision, when it is the accuracy of different strategies which bear relevance to real-world decision-making. In this manner, judgement and decision-making has in many ways become disconnected from cognitive psychology, limiting its theoretical development and its influence on other fields such as economics, finance, law, medicine and marketing. This dissertation aims to redress this balance, through thorough investigation of research that sheds light on how an environment in which a decision is borne shapes its outcome, with a focus on the relative accuracy of intuitive and deliberative thought.

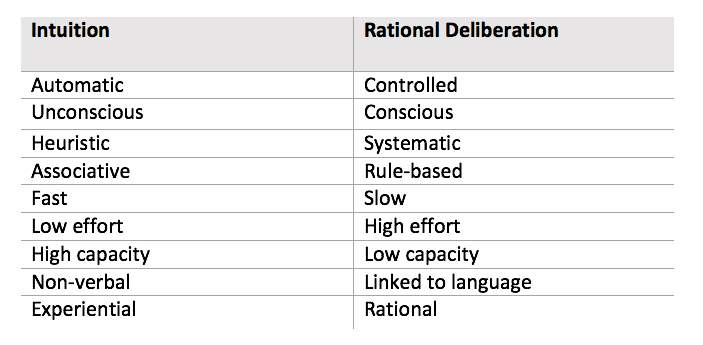

Although, instinctively, humans believe their judgements and decisions to be a product of conscious reflection, most are actually guided by unconscious cognitive processes from which intuitions arise (Epstein, 2010). Dual process theories of reasoning posit that cognitive activity may be divided into 2 interacting systems (Stanovich and West, 2000). Intuition is linked to an effortless, automatic System 1 characterised by the activation of associative memory. On the other hand, rational deliberation is a function of the effortful System 2 that utilises working memory, explaining its slow, flexible and low capacity nature which registers in consciousness (Smith & DeCoster, 2000). While System 1 processes are automatic, often associated with baseline functioning, System 2 has been described as more computationally demanding and thus used to monitor and override system 1 when more deliberative control is required. Table 1 summarises a number of attributes that typically belong to the intuitive System 1, and rational System 2.

Table 1: Adapted from Evans (2008): attributes associated with dual systems of thinking.

The distinction between intuition and reasoning has been extensively debated: critics of dual process theory dispute the notion that there are two qualitatively different cognitive systems, each with a characteristic array of attributes. However, much of the criticism is directed to dual process theory as a generalised category, yet it exists in several distinct forms in the literature which are not easily mapped onto one another (Evans & Stanovich, 2013). This may in part owe to multiple implicit processes responsible for producing intuition (see Glöckner & Witteman, 2010). Research would benefit from elucidation of these processes, in order to avoid confusion between different forms of dual process models in future work. Although the exact cognitive processes underlying intuition and rational deliberation are not fully understood, dual processes remain a valid and useful framework which potentially describes the ways in which humans make everyday judgements.

Research directed at determining whether intuition or rational deliberation is more accurate may have wide-reaching implications on decision-making within both public and professional spheres, and thus is of crucial importance. Experiments may gauge accuracy by determining whether judgements predict known behaviour, agree with other judgements, or most prevalently, correlate with normative statistical probabilities. However, accuracy, defined by how close a judgement or decision comes to a criterion (Kruglanski, 1989), proves problematic in applied psychology, where ‘ideal’ criteria may be subjective and often require judgement in themselves. Throughout, this dissertation will challenge present notions of ‘accuracy’ through the lens of context, as defined by comparison against putatively normative models which are believed to produce optimal judgements in social contexts. It first defines and discusses intuition and rational deliberation, with reference to the notion of rationality. This is followed by a discussion of the recent trend of ‘fast-and-frugal’ research which emphasises the greater accuracy of intuition in certain environments, over more complex computational strategies (e.g. Gigerenzer & Gaissmaier, 2011). Therefore, heuristics, although associated with bias, may produce more accurate judgements in complex and unpredictable environments. These features of human habitats are augmented further by sociality, considered in the manner in which decisions might involve or impact the lives of others, whose personalities, judgements and future decisions are uncertain. Finally, this dissertation discusses how familiarising the world to the decision-maker leads to the development of expertise, which shapes the way in which intuition or rational deliberation may be employed, and consequently affects the relative accuracy of decision outcomes.

2. Intuition and rational deliberation

Traditionally, conscious deliberation has been viewed as producing more rational, and therefore accurate outcomes, than intuition (Koriat, Lichtenstein, & Fischhoff, 1980). Deliberation comes into play when System 1 encounters difficulties, providing that sufficient capacity and motivation is available. It involves multiple steps, including searching for relevant evidence, weighting and coordinating it with theories, and reaching a decision. Some of these steps may be performed subconsciously, or be subject to biases and errors, but at least two must be computed consciously for the process to be deliberative (Kuhn, 1989). Conscious deliberation is often associated with classical ideas of rationality, which is defined by thinking or behaving in an internally consistent way that obeys the rules of logic. Rational decisions are thought to maximise expected utility, with agents acting according to their preferences at the time of acting. These rational processes may be defined by a function that assigns a numerical expected utility to every outcome and gamble, in such a way that the expected utility is equal to the sum of the utilities of its components, weighted by their probabilities (Savage, 1954).

The study of decision-making has been largely dominated by these economic models of rationality. They are valuable because they are formally explicit, tractable, and can be used to test quantitatively precise predictions about human decision making in a wide variety of circumstances. Yet more recent research has produced evidence demonstrating that in practice, these models are not an adequate description of human behaviour in real-world contexts, and rational deliberation does not always produce perfectly rational solutions. This is often discussed in terms of bounded rationality: people must operate under limited cognitive capacity and the constraints imposed by the environment (Simon, 1955). In order to work within these boundaries, people employ a variety of techniques to reduce the effort of making decisions, such as integrating less information and reducing the difficulty associated with retrieving cue values (Shah & Oppenheimer, 2008). This results in unconscious and high-capacity cognitive activity, giving rise to intuition. Intuitive judgements can be defined as those which appear quickly in consciousness, which are strong enough to act upon, and whose underlying reasons we are not fully aware of (Kahneman, 2003).

The high capacity of System 1 is made possible by its characteristic and frequent use of judgement heuristics, simple rules which attempt to extract only the most important information from a complex environment and ignore the rest (Gigerenzer, Hertwig, & Pachur, 2011). These ‘mental shortcuts’ allow humans to make rapid and effortless judgements and decisions that guide everyday life, and are mostly accurate (e.g. Kahneman & Klein, 2009; Epstein, 2010). Numerous heuristics have been named and studied (see Shah & Oppenheimer, 2008); there seem to be myriad ways in which humans simplify and solve complex problems. However, a multitude of empirical studies describe the biases (systematic deviations from a rational or expected answer) which have been attributed to the implementation of heuristics (Tversky & Kahneman, 1974). Many psychologists criticise intuition as being systematically flawed and error-prone, for when making intuitive judgements about probability, people often ignore information, violate laws of logic, and fail to apply basic statistical reasoning (Tversky & Kahneman, 1974). Many accounts of bounded rationality therefore assume that to act within their cognitive restrictions, people often must rely on intuition as a means of reducing effort, despite the biases and thus the insult to accuracy that may result (Kahneman, 2003).

Yet, to the contrary, several researchers have argued in recent years that heuristics are ecologically rational. That is to say, appropriate heuristics selected from an ‘adaptive toolbox’ (Gigerenzer, 1999)

may produce decisions in certain social contexts that are not only more efficient, but also just as accurate, as rational deliberation. The justification for the greater accuracy of heuristics in certain environments stems from the bias-variance dilemma, a fundamental problem in statistical inference, which states that all model outcomes are a function of variance, as well as bias (Gigerenzer & Brighton, 2009). Therefore, heuristics with high bias, but low variance, may in many cases lead to more accurate outcomes than an unbiased strategy with high variance. For example, using the 1/N rule to allocate money equally between N assets introduces bias, for it ignores data of past returns on portfolios, but has no variation in the model outcome, for the prediction is always simply 1/N. In seven allocation problems, the 1/N rule outperformed 14 complex policies based on data over the past 10 years (DeMiguel, Garlappi, & Uppal, 2009). Although these broad and flexible policies had low bias, they made less accurate predictions than the simple 1/N rule, since incorporating noisy past data led to high variance. This bias-variance dilemma is encountered whenever a judgement must be made about the world. It provides a formal explanation accounting for why decisions may be more accurate when made with an adaptive toolbox of biased heuristics in some environments, and flexible rational deliberation in others. Therefore, the lens of context is crucial in determining how accuracy will vary with mode of thinking, as a function of environmental characteristics.

Studies of human decision-making must investigate the real-world contexts from which they are often so far separated, for context determines whether normative models of rationality do or do not produce optimal decisions. Rationality, as defined by Simon (1955) requires knowledge of all options, their consequences and probabilities. In other words, classical rationality requires predictable small worlds where no relevant information is unknown or must be estimated from small samples (Savage, 1954). The relevance of these so-called ‘small worlds’ to real, large world decision-making is questionable. Real-world scenarios are complex, uncertain and unpredictable, far removed from isolated studies of risk rife in the decision-making literature. As such, Gigerenzer & Gaissmaier (2011) argue that current norms of probability and logic are inappropriate because conditions in which rational models define optimal reasoning may not be met in these real-world situations. The next sections review evidence in order to determine if normative models truly reflect optimal reasoning in natural environments.

3. The Structure of the Environment

The way in which information is structured in the environment is of critical importance to the relative performance of intuition and rational deliberation. To achieve reasonable accuracy and minimise bias, predictions made using a heuristic must be suited to the problem; for example, those made on the basis of a single variable must use a variable that is highly correlated with the attribute to be judged. Structural factors are investigated more thoroughly by Hogarth & Karelaia’s (2007) use of statistical tools to model how the accuracy of five models of heuristic or linear judgement varies as a function of environmental characteristics. They constructed various sets of 3-cue environments which were designed to mimic real-world environments, by systematically varying cue validities and cue redundancy. Cue validities could be equally weighted, compensatory, or non-compensatory; they were non-compensatory when the validity of each cue was greater or equal to the sum of those smaller than it, when ordered by magnitude. Redundancy was defined by average intercue correlation. Five predictive models were tested in these constructed environments, and their performance in predicting between binary sets of choice alternatives compared. All models used represent feasible psychological processes. Algebraic linear combination (LC) was used as a model of rational deliberation, and is a good process description when limited information is available for integration. The other models correspond to heuristics. For instance, the single variable (SV) rule may model any heuristic based on only one variable, such as availability or representativeness (Tversky & Kahneman, 1974). The equal weighting (EW) rule, on the other hand, gives all cues equal weight and combines them.

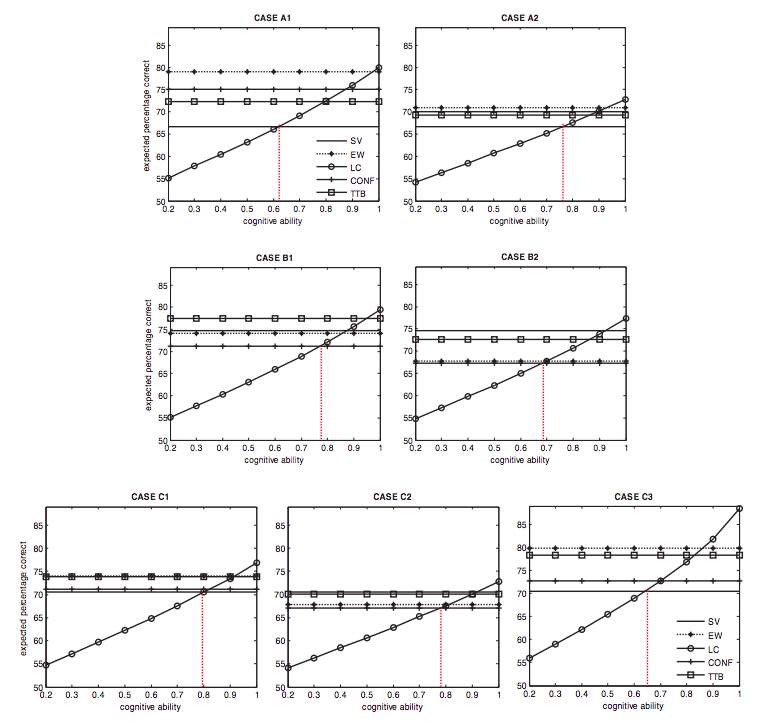

Figure 1. Hogarth & Karelaia (2007). Model performance as a function of cognitive ability.

5 models were compared: SV = single variable; EW = equal weighting; LC = linear combination; CONF = a strategy that looks for confirming evidence; TTB= take-the-best.

Environments: A= equal weighting, B= non-compensatory, C= compensatory. 1= small levels of redundancy; 2= large levels of redundancy; 3= involves negative intercue correlation.

Models were tested in constructed environments, under varying levels of cognitive ability. Their performance was judged by the expected percentage of correct predictions. LC only was seen to vary under differing levels of cognitive ability. The mean LC model never performs best, compared to simpler heuristic models.

As shown in Figure 1, heuristics perform better than algebraic linear combination when their characteristics match those of the environment. For instance, irrespective of redundancy, the equal weighing rule performs best in equal-weighted environments (case A1, A2). SV bases its decision on a single cue that carries greater validity than any other, and thus performs best in non-compensatory environments, when redundancy is high (B2). Hence, the accuracy of a heuristic depends on whether the appropriate heuristic is chosen for an environment, and also if there are errors in ordering the cues according to validity.

The mean LC model never performs best, compared to simpler heuristic models. Cognitive ability (ca), a measure of how well LC is used in terms of matching weights and consistency of execution, must be high before LC can compete with heuristic models. However, errors in the application of heuristics means that LC can be more accurate at lower levels of cognitive ability. For example, in a case of equal weighting and low redundancy (see fig. 1; A1), using an inappropriate heuristic (SV) allows LC to make more accurate judgements with relatively low cognitive ability (0.6).

Thus, assuming that the environment may be modelled as linear, this study demonstrates that the relative accuracy of deliberative linear combination and heuristics depends on how appropriate selected rules are to the structure of the environment. Within novel contexts when one is unable to select an appropriate heuristic, and cognitive ability is high, rational deliberation may produce more accurate judgements. However, it is of note that increasing the number of cues in this paradigm in order to make the problem more complex could well have had an impact on the relative performance of deliberative or heuristic models. To address this issue, the next section introduces a broader discussion of complexity.

4. Environmental complexity

The real world is indeed far more complex than empirical research would suggest, with many component parts interacting in multiple ways. In particular, many of the studies used to identify biases associated with heuristics require participants to solve relatively simple problems, often requiring a judgement to be made on the basis of a single attribute. For example, Kahneman & Tversky (1973) demonstrated how the availability heuristic, in which one estimates the probability of an event by the experienced ease of recall of that event, could lead to bias. They required participants to listen to a list of 39 names, and asking them either to answer how many of the names were female and male, or to write down as many names as they could recall. When the list comprised 19 famous women and 20 less famous men, participants retrieved more of the famous names than less famous names, presumably because famous names were more available and thus easier to retrieve. Furthermore, 80 out of 99 participants judged the gender category that contained more famous names to be more frequent, though there were fewer names in that category. In this manner, the availability heuristic has been associated with bias in the literature. Yet this study, in which only one attribute was to be judged, remains far removed from most real-world scenarios, where multiple attributes exist which could be judged and incorporated into a decision. Although studies such as these, in which decisions are straight-forward, are valuable in describing decision-making phenomena, they do not give a good estimate of how accurate intuitive processes or rational processes are in more complex contexts.

Assuredly, a strategy which disregards too many parameters limits its flexibility to fit diverse patterns of data, and leads to sub-optimal outcomes. However, recent research suggests that a good predictive strategy must achieve a balance between simplicity and complexity. As such, an inverse U-shaped function between computational model complexity and predictive power has been identified (Pitt, Myung, & Zhang, 2002), such that the relative accuracy of simple intuition and more flexible rational deliberation depends on the complexity of the environment. Research findings in support of this model consistently demonstrate simple heuristics to be more accurate than standard statistical methods that have access to the same or more information in complex large worlds. For instance, Gigerenzer & Todd (1999) demonstrate that across 20 real-world environments, even prediction strategies using a single variable may outperform multiple linear regression, which applies sophisticated computations that utilise all available cues in the model. These results demonstrate the ‘less-is-more effect’, as there seems to be a point where more information or computation is detrimental to accuracy. Complex strategies incorporate existing data that often includes arbitrary information in the environment, which increases variance in outcomes. In comparison, simple heuristic rules ignore previous information, and are thus less prone to errors in the data. As such, it appears that problems in extremely complex environments may well be better solved by simple solutions.

5. Social environments

As emphasised by the Machiavellian intelligence hypothesis (Whiten & Byrne, 1997), the native features of social worlds may be largely responsible for producing complexity and unpredictability. It follows that the world in which humans live and make choices is tremendously complex, for it is uniquely social. Intelligent agents must predict and react to the actions of countless other intelligent agents, and handle change over time to other agents, their relationships, and the system itself. As a result of this augmented complexity, and due to the rules and constraints set by social contexts, normative models of classical rationality do not necessarily lead to the most accurate nor beneficial decisions.

5.1. Game theory

It is impossible to extract people from their social context, and certainly, it seems that most important decisions are not the product of isolated individuals, but rather, of interactions among members of a group, where individuals have only partial control over the outcomes. Colman (2003) argues that in collaborative decisions, it is difficult to use rational choice theory to define individual rationality in terms of expected utility maximisation, for unless one makes assumptions about how other participants will act, expected utility is undefined. Obvious features of human interaction are inadequately explained by such models of rationality.

On the other hand, John Nash’s advances in game theory and non-cooperative problem solving (1951) model conflict and cooperation between rational decision-makers. Game theory involves not only rationality, but also prediction of other players’ chosen strategies. Players are required to solve social dilemmas, such as the Prisoner’s Dilemma Game (PDG) in which rationality is self-defeating. In the PDG, players take on the role of one of two prisoners in a police interview, and may choose to either cooperate with each other or defect.

| Cooperate | Defect | |

| Cooperate | 1 , 1 | 5 , 0 |

| Defect | 0 , 5 | 3 , 3 |

Figure 2. Adapted from Axelrod (1980). Outcome Matrix for Each Move of the Prisoner’s Dilemma. The two players are represented by the colours red and blue. Numbers correspond to the number of years in prison for each player. They players may decide to either ‘cooperate’ or ‘defect’. If both players choose to cooperate with each other, they are both sentenced for only 1 year in prison. If both players defect, they both sentenced to 3 years in prison. However, if Red cooperates while Blue defects, Red is sentenced to 5 years in prison, while Blue is freed, and vice versa.

As shown above, if both players cooperate, outcomes are good for both; if both defect and betray the other, outcomes are worse for both. Defecting is a rational and strongly dominant strategy, for is in the interest of the player regardless of the other player’s decision. However, if both players choose dominated cooperative strategies, then each enjoys a better outcome than if both choose dominant defective strategies: the defect-defect outcome is pareto-inefficient in that another outcome would be preferred by both players (Axelrod, 1980). Although deliberating rationally might lead one to the conclusion that the most logical solution is to always defect, this decision is unlikely to lead to beneficial outcomes for the player in the long-term. The prisoner’s dilemma encapsulates the tension between individual rationality, in which there is incentive for both sides to act selfishly, and group rationality, in that there is a higher payoff to both sides for mutual cooperation than mutual defection.

The prisoner’s dilemma is an excellent model of important real-world contexts. In international political theory, the iterated prisoner’s dilemma game is often used to demonstrate strategic realism, which holds that in international relations, all states will act in their rational self-interest (Majeski, 1984). Majeski argues that arms races in particular contain the key aspects of prisoner’s dilemma games, in that conflict between participants is present but both parties can obtain a desirable joint outcome by cooperating. Arms races represent a series of prisoner’s dilemma-type encounters; for example, during the arms race of the Cold War, the opposing alliances of NATO and the Warsaw Pact had the choice to arm or disarm (Myrdal, 1977). On either side, disarming while the other side armed would bring about fear of military inferiority. If both sides chose to arm, fear of Mutually Assured Destruction would prevent either side from attacking, but at the high expense of developing nuclear weapons. The most mutually beneficial outcome would be for both sides to disarm, for this would prevent war without cost. However, the individually rational decision was for both sides to arm, and this is what transpired in the Cold War between NATO and the Warsaw Pact (Myrdal, 1977). Rational deliberation, in an attempt to make economically rational decisions, may therefore lead to choices in social environments in which both players suffer (Majeski, 1984).

In this manner, Game Theory eludes to the complexity of decision-making in a dynamic social environment. It could be argued that the nature of social interactions is far too complex to be perfectly replicated in experimental studies within the laboratory. Perhaps decision-making may be better studied ‘in vivo’, in the real institutional settings in which they occur. Further innovative research is required that incorporates aspects of the social environment in decision-making, and also attempts to mirror the uncertainty of decisions, rather than remaining fixated on risk. The prisoner’s dilemma highlights these conditions of uncertainty: not only of decision outcomes, but of how other intelligent agents will act and react to them. In fact, there is an elegant and absolute counter to uncertainty: trust. Trust may be considered an intuitive heuristic, a cognitively simple rule which relies on beliefs and prior attitudes and allows humans to deal with complexities that would require unrealistic effort in rational reasoning (Cummings, 2014). Placing trust in another resolves the ambiguity of probabilistic outcomes in the environment, increasing cognitive certainty by believing those who are trusted will assuredly do what is expected. In unpredictable and uncertain situations, trust allows cooperative choices which depend on others and may lead to better outcomes for all involved, so as long as these trustees are reliable. Most humans appear to be inherently trusting in nature, women more than men (DePaulo, 1994), and game theory suggests a system in which intuitively depending on others might confer evolutionary advantage. As such, depending on the social context of the situation, intuitive trusting may lead to better judgements than rational deliberation, which follows the same laws of logic that place individual rationality over group welfare, and as a result may produce suboptimal outcomes for all players involved.

5.2. Accountability and social norms

Despite the fact that the majority of real-world decisions and outcomes influence, and are influenced by, others in society, research on human judgement and decision making often neglects social context entirely. This is a serious gap in the literature, for to disregard social context is to disregard the nature of human choice, which is largely shaped by societal expectations, constraints and norms. If the laws of logic and probability do not lead to accurate judgements in real-world social environments, then normative models cannot be used as a criterion against which to compare the accuracy of human reasoning. One must take into account social norms, expectations, and goals, which may well necessitate the violation of certain logical laws. Perhaps the greatest social expectation, and pervasive feature of natural decision environments, is the accountability that people bear for the judgements they express and decisions they make. Accountability of conduct is a universal feature of natural decision environments, as it provides a regularity of shared rules and practices which allows for the organisation of social systems (Tetlock, 1985). This places certain restrictions upon future actions, as deviating from social norms may lead to disapproval or punishment, depending on the gravity of the offence.

For instance, Hertwig and Gigerenzer (1999) propose contextual grounds for violating Property , a basic principle of internal consistency in multi-alternative choice. This property dictates that relative preference for one option over another is not affected by the inclusion of further alternatives in the choice set. If a tray with only one pastry left is passed to you at a dinner party, there is a choice of taking nothing (x) or taking the pastry (y). If you know one of the guests has not yet had a pastry, you might choose x. However, if the tray had contained another pastry (z), you might have chosen to eat the pastry (y) instead. This violates Property and thus defies classical rationality. However, it is rational in the social context, for it is polite, which in the future might lead to cooperation and avoidance of conflict (Hertwig & Gigerenzer, 1999). As such, just as classical rationality is bounded by cognitive capacity and the environments, social rationality is constrained by the norms, procedures and resources of the institutions in which individuals reside. This suggests that the most accurate judgement in a social context is likely to differ from that of a non-social one. Therefore, individuals must be examined not in a vacuum, but in relation to the society and organisations to which they belong. Research on heuristics and biases has implicitly assumed that the goal is known, and yet in social contexts, these goals may be borne of accountability to others, rather than from pure individual rationality. Societal constraints may even necessitate violation of statistical laws, often leading to ‘irrational’ yet ultimately more beneficial decisions. If these norms are indeed inappropriate, due to ignoring content and context of real judgements and decisions, then biases produced by heuristics may not necessarily lead to more inaccurate predictions, and one cannot think of them as inherently error-prone.

6. Expertise of the decision-maker

A common solution to complexity arising from both social and non-social environments is expertise, which shapes the interaction between individuals and certain environments and may affect the relative accuracy of intuition and rational deliberation as a result. The more expertise an individual gains in navigating complex large worlds, the simpler and more decomposable a problem will appear. Knowledge is arranged with different degrees of structure depending on an individual’s experience, with experts thought to organise knowledge according to highly sophisticated schemas, enabling them to quickly identify and process relevant information (Chi, Glaser, & Rees, 1981). Thus, experience and problem complexity may be viewed as inversely related. Further, as decision-making strategy interacts with problem complexity, one might infer that there may be an interaction between strategy and expertise.

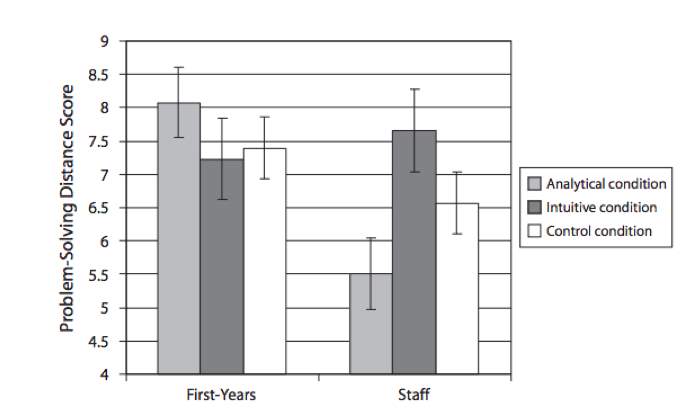

Pretz (2008) examined this hypothesis. Two groups of participants were asked to solve ill-defined and complex practical problems related to college life. One group consisted of inexperienced first year students. The second consisted of relative ‘experts’, older junior and senior students with experience as staff who had undergone extensive training (120-180 hours) in dealing with everyday problems. Groups of participants were randomly assigned to an experimental condition; controls received no instructions regarding strategy use, and others were instructed to use either an analytical strategy, or to trust their intuition. Scores were computed by calculating the Mahalonobis distance of each individual’s response profile from the consensus of the experienced students in the sample, with lower distance equating to more accurate outcomes.

Figure 3. Pretz (2008): Problem solving distance of first-years and experienced students (staff) under instruction to employ different strategies. Problem-solving performance depends on an interaction of strategy instruction and level of experience. Higher scores reflect worse problem-solving performance. Error bars represent standard errors.

Unsurprisingly, the main effect of experience showed that undergraduate staff members had lower scores, which reflect more accurate judgements, than did 1st year students, as illustrated by Figure 3. All 3 strategy conditions performed comparably when implemented by 1st years. However, among more experienced students, the trend showed that analysis led to better performance compared to those in the intuition and control group. Moreover, the analytical strategy was significantly more successful for experienced students than for 1st year students. These findings are reflected in a statistically significant interaction between experience and decision strategy employed. They suggest that novices make the most accurate judgements using intuition, while analysis is more appropriate for more experienced individuals, presumably because they are able to explicitly perceive the logic and structure of the problem.

However, it is of note that in this study, the experienced students, despite going through intensive training, were still relatively inexperienced in comparison to true experts who have accumulated years of experience in their domain (Ericsson, 2006). Perhaps this explains why these findings, which imply that experts should use analytical strategies for solving complex problems, seem to contradict empirical evidence that suggests that the expert intuition of professionals is highly accurate (Reyna & Lloyd, 2006).

It has been proposed that the intuition of true experts could be considered to be the result of an automatisation of previously explicit processes; a different kind of intuition to that employed by novices. Experts may acquire gist knowledge that allows intuitive control cognitive mechanisms, while less experienced individuals must rely on explicit analytic reasoning. According to fuzzy-trace theory (Reyna & Lloyd, 2006) experts intuitively solve problems by matching it to a similar gist representation in associative memory acquired through experience. Thus, as expertise increases, different processing strategies will yield the more accurate results. This is reflected in Baylor’s (2001) U-shaped model of expertise and intuition, which proposes that intuition is used by both novices and experts, but that intermediate experts use a more analytical strategy. According to this model, novices that gradually gain knowledge in a certain subject area become intermediate experts who are able to perceive the structure and logic of problems, utilising analytical strategies for the most accurate judgements. As knowledge eventually becomes part of an automatised expert schema, and as analytical verbatim-based reasoning is supplanted by intuitive gist-based reasoning, they become true experts who can rely on inferential intuition to make decisions and solve problems. This transition from intermediate to true expertise is supported by many empirical studies, such as conducted by Reyna & Lloyd (2006). They found that cardiac physicians who make decisions within their area of expertise do so in ways consistent with gist-based processing, processing fewer dimensions of information. This gist-based intuition led cardiac experts to produce more accurate diagnoses of risk for myocardial infarction, as judged against evidence-based practice guidelines, than did non-expert physicians in other fields who analysed far more patient attributes to form judgements.

The relative accuracy of intuition or rational deliberation evidently depends on how able decision-makers are to employ each strategy, and this is determined in part by their level of expertise and depth of knowledge pertaining to a particular environment. However, the intermediate and mature intuition areas of Baylor’s proposed curve require more research to be better characterised, as the exact processes by which greater knowledge drives a transition to a different form of intuition are currently unspecified. This might be facilitated by efforts to distinguish between various unconscious processes underlying intuition, and to better understand their fundamental cognitive mechanisms. Perhaps an avenue of research may be to compare the ways in which novices and true experts make intuitive decisions, by testing them against each other on a series of tasks designed to determine their underlying processes. This could expand current understanding not only of how different forms of intuition compare against rational deliberation in various environments, but also how these forms fare against each other.

7. Conclusion

Few scholars doubt the extensive use of intuitive heuristics by decision-makers. Yet if one examines the literature, they might deem intuition to be clumsy, inaccurate, and prone to miscalculation, for it is evident that the focus in decision-making research has shifted from accuracy to error. Analytical deliberation has therefore been traditionally considered more powerful and less susceptible to biases. Perhaps it is not surprising that deliberation was long thought to the ‘default’ processing mode, given that the study of science itself requires rational progression of thought that can be articulated verbally. This dissertation, however, has challenged laws of logic as a norm against which intuition ought to be measured in real-world contexts. Although they may hold in simple and predictable small worlds, they are fallible models for optimal decision-making in more realistic, complex situations; for instance, violating these laws in order to remain within societal constraints may lead to ‘irrational’ but fundamentally beneficial decisions. As such, context shapes goals which may be held at an individual or group level, and determines which outcomes are constructive in reaching that goal or not. In this manner, which decisions are classed as accurate may be subjective, or dependent on the environment in which they are made. Normative models are therefore inappropriate to judge the accuracy of heuristics within real environments, and thus we cannot use them to consider heuristics error-prone.

In examining the relative accuracy of intuition and rational deliberation, this paper has exposed a gap in the literature, which currently places emphasis on simple, context-free decisions under risk; situations that are largely irrelevant to real-world environments. This is a troubling oversight, for neither intuition or rational deliberation are intrinsically accurate or inaccurate, but rather, dependent on the context in which each strategy is employed. An analysis of the body of available statistical and empirical research indicates how complexity, sociality and expertise affect the relative performance of intuition or rational deliberation, and suggests that different strategies are appropriate for distinct environments. Rational deliberation generally performs better when cognitive ability and motivation is high, when there is sufficient relevant experience to the task at hand, and when the problem is simple and well-defined. These conditions are more likely to occur in non-social environments. On the other hand, intuition generates more accurate judgements in complex situations, when the individual is either a novice or a true expert, and when appropriate heuristics which match the environmental characteristics are chosen. Therefore, given that everyday social environments are often incredibly complex and challenging, and complex problems may be best solved with simple solutions, the most accurate decisions may often be made intuitively. Some of these findings are condensed into Table 2. However, note that this table is oversimplified, for not all factors have been considered, and environmental factors interact. For instance, social environments tend to be more complex, and problems are made simpler and more decomposable by expertise. The precise way in which this interaction affects the relative accuracy of decision strategies requires further investigation. Further, sociality, expertise and complexity exist as continua, rather than as discrete levels as seen in Table 2; they are shown as such for the sake of simplicity.

|

Intuition |

Rational deliberation |

||

|

Environmental complexity |

Simple | | |

| Complex | | ||

|

Sociality |

Social | | |

| Non-social | | ||

|

Expertise |

Novice | | |

| Intermediate | | ||

| Expert | | ||

Table 2. Intuition vs rational deliberation. A simplified table to show how the accuracy of decision strategy depends on contextual factors such as environmental complexity, sociality and expertise. Check marks indicate the dominant strategy in each situation.

Future research is still required, to elucidate understanding in areas in which our knowledge remains lacking. In particular, there is a pressing need within the dual process framework to differentiate between the different kinds of unconscious processes, due to the confusion that may arise from the broad unification of heuristics as an umbrella term, under which multiple distinct effort-reducing strategies are defined and described. Focus must shift away from identifying further heuristics and associated biases, and towards determining the accuracy and suitability of intuition in real-world contexts, for it is this study which bears relevance to practical applications of research. To this end, individual variation represents a significant avenue of further research, for it is likely to have a significant impact on performance; the compounding and interaction with contextual factors already discussed would be of great interest in determining the relative performance of intuitive and deliberative processes. Notably, fluid intelligence may have a significant effect on both the ability of executive function to manipulate information in rational analyses, or in selecting the correct strategies or heuristics appropriate in particular environments. Finally, there would be immense value in mathematically modelling the trade-off between the error in selecting and implementing heuristics, and the error in executing rational computations, as environmental and individual factors are varied. This model could be used to determine whether formal models, which may be developed to specify how environmental characteristics shape the accuracy of judgement strategies, can account for observed results in various contexts across the literature. As such, it would help elucidate the cognitive processes underlying intuition and rational deliberation, and would advance a greater understanding of how these strategies might interact with the environment.

8. References

Axelrod, R. (1980). Effective Choice in the Prisoner’s Dilemma. Journal of Conflict Resolution, 24(1), 3–25.

Baylor, A. L. (2001). A U-shaped model for the development of intuition by level of expertise. New Ideas in Psychology, 19(3), 237–244.

Chi, M. T. H., Glaser, R., & Rees, E. (1981). Expertise in Problem Solving. Advances in the Psychology of Human Intelligence, 1, 1–75.

Colman, A. M. (2003). Cooperation, psychological game theory, and limitations of rationality in social interaction. Behavioral and Brain Sciences, 26(2), 139–153.

Cummings, L. (2014). The ‘Trust’ Heuristic: Arguments from Authority in Public Health. Health Communication, 29(10), 1043–1056.

DeMiguel, V., Garlappi, L., & Uppal, R. (2009). Optimal Versus Naive Diversification: How Inefficient is the 1/N Portfolio Strategy? The Review of Financial Studies, 22(5), 1915–1953.

DePaulo, B. M. (1994). Spotting Lies: Can Humans Learn to Do Better? Current Directions in Psychological Science, 3(3), 83–86.

Epstein, S. (2010). Demystifying Intuition: What It Is, What It Does, and How It Does It. Psychological Inquiry, 21(4), 295–312.

Ericsson, K. A. (2006). The influence of experience and deliberate practice on the development of superior expert performance. The Cambridge Handbook of Expertise and Expert Performance, 38, 685–705.

Evans, J. S. B. T. (2008). Dual-Processing Accounts of Reasoning, Judgment, and Social Cognition. Annual Review of Psychology, 59(1), 255–278.

Evans, J. S. B. T., & Stanovich, K. E. (2013). Dual-Process Theories of Higher Cognition: Advancing the Debate. Perspectives on Psychological Science, 8(3), 223–241.

Gigerenzer, G., & Brighton, H. (2009). Homo Heuristicus: Why Biased Minds Make Better Inferences. Topics in Cognitive Science, 1(1), 107–143.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic Decision Making. Annual Review of Psychology, 62(1), 451–482.

Gigerenzer, G., Hertwig, R., & Pachur, T. (Eds.). (2011). Heuristics: the foundations of adaptive behavior. Oxford ; New York: Oxford University Press.

Gigerenzer, G., & Todd, P. M. (1999). Fast and frugal heuristics: The adaptive toolbox. In Simple heuristics that make us smart (pp. 3–34). New York, NY, US: Oxford University Press.

Glöckner, A., & Witteman, C. (2010). Beyond dual-process models: A categorisation of processes underlying intuitive judgement and decision making. Thinking & Reasoning, 16(1), 1–25.

Hertwig, R., & Gigerenzer, G. (1999). The ‘conjunction fallacy’ revisited: how intelligent inferences look like reasoning errors. Journal of Behavioral Decision Making, 12(4), 275.

Hogarth, R. M., & Karelaia, N. (2007). Heuristic and linear models of judgment: Matching rules and environments. Psychological Review, 114(3), 733–758.

Kahneman, D. (2003). A perspective on judgment and choice: Mapping bounded rationality. American Psychologist, 697–720.

Kahneman, D., & Klein, G. (2009). Conditions for intuitive expertise: A failure to disagree. American Psychologist, 64(6), 515–526.

Kahneman, D., & Tversky, A. (1973). On the psychology of prediction. Psychological Review, 80(4), 237–251.

Koriat, A., Lichtenstein, S., & Fischhoff, B. (1980). Reasons for confidence. Journal of Experimental Psychology Human Learning & Memory, 6(2), 107–118.

Kuhn, D. (1989). Children and adults as intuitive scientists. Psychological Review, 96(4), 674.

Majeski, S. J. (1984). Arms races as iterated prisoner’s dilemma games. Mathematical Social Sciences, 7(3), 253–266.

Myrdal, A. (1977). The Game of Disarmament: How the United States and Russia Run the Arms Race. Manchester University Press.

Nash, J. (1951). Non-Cooperative Games. Annals of Mathematics, 54(2), 286–295.

Pitt, M. A., Myung, I. J., & Zhang, S. (2002). Toward a method of selecting among computational models of cognition. Psychological Review, 109(3), 472–491.

Pretz, J. E. (2008). Intuition versus analysis: Strategy and experience in complex everyday problem solving. Memory & Cognition, 36(3), 554–566.

Reyna, V. F., & Lloyd, F. J. (2006). Physician decision making and cardiac risk: effects of knowledge, risk perception, risk tolerance, and fuzzy processing. Journal of Experimental Psychology. Applied, 12(3), 179–195.

Savage, L. J. (1954). The Foundations of Statistics. New York, Wiley.

Shah, A. K., & Oppenheimer, D. M. (2008). Heuristics made easy: An effort-reduction framework. Psychological Bulletin, 134(2), 207–222.

Simon, H. A. (1955). A Behavioral Model of Rational Choice. The Quarterly Journal of Economics, 69(1), 99–118.

Smith, E. R., & DeCoster, J. (2000). Dual-process models in social and cognitive psychology: Conceptual integration and links to underlying memory systems. Personality and Social Psychology Review, 4(2), 108–131.

Stanovich, K. E., & West, R. F. (2000). Individual differences in reasoning: implications for the rationality debate? The Behavioral and Brain Sciences, 23(5), 645–665; discussion 665–726.

Tetlock, P. E. (1985). Accountability: The neglected social context of judgment and choice. Research in Organizational Behavior, 7, 297–332.

Tversky, A., & Kahneman, D. (1974). Judgment under Uncertainty: Heuristics and Biases. In D. Wendt & C. Vlek (Eds.), Utility, Probability, and Human Decision Making (pp. 141–162). Springer Netherlands.

Whiten, A., & Byrne, R. W. (1997). Machiavellian Intelligence II: Extensions and Evaluations. Cambridge University Press.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Consumer Decisions"

The consumer decision making process involves how consumers identify their needs and gather and process information prior to a purchase. Consumer decisions involve how the emotions and preferences of consumers can impact their buying decisions.

Related Articles

DMCA / Removal Request

If you are the original writer of this dissertation and no longer wish to have your work published on the UKDiss.com website then please: