Spatial Audio for Broadcast: Fundamentals of Established Techniques and Recent Developments

Info: 4348 words (17 pages) Dissertation

Published: 17th Dec 2019

Tagged: Engineering

Contents

Table of Contents

1. Spatial Audio – the Fundamentals

1.2 Stereo – the original “Spatial Audio”

1.3 Spatial Audio for Broadcast – Fundamentals of Established Techniques

2.2 Recent Developments in Binaural Sound

3.2 Recent Improvements in Ambisonics

4.2 Wave Field Synthesis (WFS)

4.3 Vector Base Amplitude Panning (VBAP)

Abstract / Introduction

Spatial Audio is an area of sound engineering that offers the potential to deliver a full 3D listening experience similar real-world audio. This report provides an overview of the fundamentals of Spatial Audio and how it pertains to broadcasting, and explores the major technologies for enabling it and recent technical developments in the field. Specifically it looks at the improvements in Binaural and Ambisonic recording and reproduction and the benefits these provide in terms of delivering a true Spatial Audio experience to listeners.

1. Spatial Audio – the Fundamentals

1.1 Background

Sound quality reproduction has improved dramatically over the last few decades. Today, things like distortion and signal to noise ratio are no longer really an issue for most listeners. Sound recording systems are now good enough to capture the dynamic and frequency ranges at quality levels beyond what the human ear can perceive. The last major obstacle remaining to be overcome in the pursuit of fully realistic sound experiences is to provide listeners with a true 3D like sound experience. [10]

Pioneers in the field have long sought to provide listeners with a realistic Spatial Audio experience. Thanks to recent advances in technology, the ultimate realisation of this goal is drawing much closer.

Spatial Audio attempts to record the most salient parts of a sound field and reproduce them in a way that the human listener perceives the spatial characteristics of the original sound scene, i.e. so that the listener almost feels like they were there [6].

1.2 Stereo – the original “Spatial Audio”

Stereo is the most common way that consumers listen to audio content. Stereo was the first attempt at providing listeners with some degree of a spatialised audio experience by recording with 2 microphones on either side of the sound source. In most cases stereo reproduction is provided by two loudspeakers, typically with an aperture of 60° and offers only a mild illusion of spatial sound, since reproduction is from the front only [9].

This is because with loudspeakers both ears receive sound signals from both speakers. Two-channel stereo, without any form of special psychoacoustic processing, is limited in its ability to provide all-round sound images and reverberation [10].

This has proven sufficient for most consumers as they’re typically faced towards the sound source, while listening to music or watching television. Conventional stereo has proven acceptable on headphones for most consumers, and music producers and device manufacturers alike have been slow to go to the expense of distributing music in multiple formats [6].

Surround sound techniques with arrays of speakers, such as Dolby 5.1 sought to improve on this approach, but don’t really provide a realistic, immersive 3D sound experience to listeners [9]. Furthermore given the need for a plethora of speakers, most consumers have not installed surround sound systems in their homes, contenting themselves with standard stereo provided by their TV or music systems [10].

With the advent of new media via the internet and improved technological developments, particularly for providing immersive experiences in virtual environments; there is a growing demand for a more realistic spatialised audio experience [4].

Much of the theory around how to reproduce spatial audio and how it relates to creating a 3D like audio experience, more realistic sound than stereo, has existed since the 70s. As the applications weren’t clear and the quality of the technology wasn’t adequately developed, the field of Spatial Audio didn’t advance considerably [10].

With the growing popularity of Virtual Environments (VEs), clearer applications and improved technology over the last 4 decades, Spatial Audio is now better able to create a 360° experience. Demand for a more realistic Spatial Audio is steadily increasing as consumers are looking for more immersive experiences in things like virtual environments for computer games, live concerts, sports events and business applications like video conferencing.

1.3 Spatial Audio for Broadcast – Fundamentals of Established Techniques

Rumsey (2001) stated that the aim of high quality recording and reproduction should be to create as believable an illusion of ‘being there’ as possible. He rightly asks, what is the ultimate goal of enhanced audio? Is it trying to place, “the listener in the concert hall or bring the musicians into the living room?” Spatialised audio holds out the promise of providing such an experience to the listener [10].

Spatial Audio Applications for Broadcast

Broadcast media such as TV, Cinema and Radio would seem ideal to exploit the benefits of 3D sound to bring the listener closer to “being there”. Owing to the limitations of technology historically, these types of applications such as live concerts, cinema and TV had been better suited to multichannel audio systems, using loudspeakers positioned around the listening area [10].

To provide an enhanced spatialised audio experience to listeners for creative or artistic applications over broadcast media, a big challenge has been that listeners my not be positioned in a predictable location relative to the sound system; making it much more difficult to manage the sound signals they receive [10].

With traditional channel based reproduction, even including more advanced surround sound configurations (5.1, 7.1, 9.1, 22.2) which include loudspeakers for height dimensions, these find their limits with their incompatibilities between formats and their inability to provide realistic spatial audio experience to the listener. It is necessary to remove these limitations to provide a 3D audio listening experience [6].

2. Binaural Sound

2. Binaural Sound

Binaural sound seeks to replicate real world human hearing by providing stereophonic audio cues over 2 channels; interaural time, level and spectral differences, which are often referred to as “ear signals” [5].

Unlike Stereo, the key to producing a realistic real-world sound experience with binaural sound is the differences in signal received by 2 ears; the interaural time difference (ITD) and the interaural level difference (ILD) [9]. Stereo, and even surround sounds lack a sense of depth, as there’s no differential hearing.

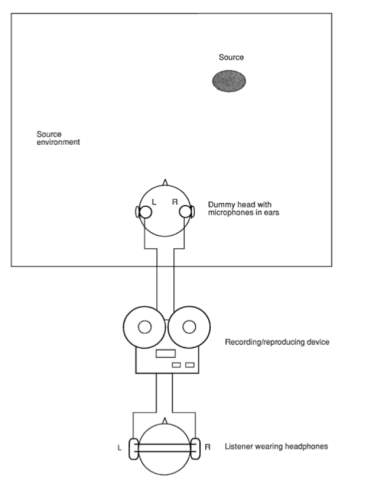

Binaural playback aims to reproduce allof the cues that are needed for accurate spatial perception, as illustrated in Fig 1. While this may seem straight forward in theory, in practice there are a number of very complex challenges to overcome to provide the listener with a credible spatial audio experience [10].

To understand this, we must first look at the psychoacoustics of spatial hearing. Bégault (1995) defines these as, “the relationship between the objective physical characteristics of sound and their subjective interpretation by listeners.” [7]

2.1 Binaural Recording

The basic principle of binaural recording is to place two microphones, one at the position of each ear in the source environment, and to reproduce these signals through headphones to the ears of a listener [10].

The Neumann KU100 model, pictured below, is commonly used for binaural recording.

For binaural reproduction to work well, the HRTFs of sound sources from the source environment must be accurately recreated at the listener’s ears upon reproduction. This requires capturing the time and frequency spectrum differences between the two ears accurately [10].

Challenges with Binaural Sound

Many of the challenges to the successful implementation of 3D sound have been or are in the process of being overcome. In his 1991 paper, Begault outlined the principle challenges:

- “Eliminating front to back reversals and intracranially heard sound, and minimising localisation errors

- Reducing the amount of data necessary to represent the most perceptually salient features of HRTF measurements. Everyone’s HRTFs are different and even though there are some common features, historically it was difficult to generalise about which HRTF features should be incorporated in commercial for mass market appeal.

- Resolving conflicts between desired frequency and phase response characteristics and measured HRTFs” [2]

The solution to many of these challenges has been to employ generalised functions at the expense of absolute accuracy. Rumsey (2001) noted that subjects gradually adapt to new HRTFs, when listening to binaural sound and that they notice errors less over time [10].

In recent years, technology has improved to make it much easier to provide a binaural reproduction experience, particularly using headphones. Using audio metadata, sound can now be rendered optimally for each playback scenario, e.g. with a non-standard configuration for loudspeakers or a binaural rendering for headphones [6].

Binaural sound however still tends to sound excessively narrow at low frequencies when replayed on loudspeakers, as there is very little difference between the channels that has any effect at a listener’s ears [10].

2.2 Recent Developments in Binaural Sound

As Rumsey noted, “The potential of Binaural recording has fascinated researchers for years but it had received very little commercial attention until recently.”

This was due in large part that historically it was very difficult to reproduce binaural sound for a wide range of different speakers and headphone types. This was further compounded by the limited compatibility between headphone and loudspeaker listening, and most consumers rely on both [10].

Improvements in signal processing for synthesising binaural signals have made it much more cost effective to deal with the conversion required between headphone and loudspeaker listening. This means it’s now cheaper and easier to provide the required 3D directional sound cues for virtual environment with improved digital signal processors [10].

While binaural reproduction works best over headphones, more recently they can also be successfully used with a pair of loudspeakers that employ a cross talk cancellation filter. A limitation of this however is that the effect is confined to a very narrow listening area like watching television in the home, and would be unsuited for listening in larger spaces [5].

Head tracking

People use their head movements to resolve directional confusion in natural listening. These had proven very difficult to incorporate in reproduction situations for broadcasting, particularly where loudspeakers are used [10].

For broadcasting purposes with binaural sound however, the visual cues required are often missing, and these usually have a major impact on the listening experience. One solution to this is Head Tracking.

Generally this kind of head tracking is only practical in real-time interactive applications. Head tracking can be used to modify the binaural cues that are reproduced so that head movements give rise to realistic changes in the signals sent to the ears [4].

This has resulted in the visual cues problem with binaural sound largely being solved in virtual environments that can combine 3D visual information with the audio cues to provide a truly immersive visual and audio experience to the listener [4].

Some broadcasters, most notably the BBC are experimenting with binaural sound for broadcasting purposes, using it in shows such Dr Who, the BBC Proms and the Turning Forest VR fairy tale [14]. This has become possible as more and more people are listening to broadcast programs online and using headphones.

3. Ambisonics

While Binaural recording can provide a spatialised sound experience superior to stereo, it still has a number of challenges and limitations outlined above.

Ambisonics is another technology that can provide a true 3D sound experience to listeners as it records sounds in a spherical way capturing a real three dimensional sound field. As noted by Michael Gerzon (1985) who pioneered the technique in the seventies, “Ambisonics is unique in being a total systems approach to reproducing or simulating the spatial sound field in all its dimensions” [3].

It aims to provide a complete approach to directional sound recording, transmission and reproduction [10] (Rumsey, 2001). Unlike the limitations of other technologies, it can be reproduced in, “mono, stereo, horizontal surround-sound, or full ‘periphonic’ reproduction including height information.” In contrast to Quadraphonic sound, which requires the use of four loudspeaker channels, Ambisonics can be encoded in any number of channels [3].

3.1 Ambisonic Recording

3.1 Ambisonic Recording

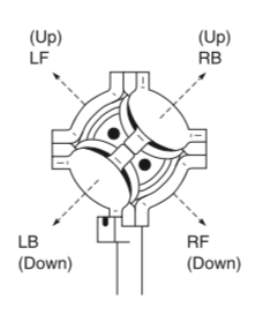

For ambisonic recording, a tetrahedral mic is typically used, which contains four sub-cardioid capsules orientated as outlined in the diagram on the right [1].

The are 4 basic formats for signals in the Ambisonic system –

- A-format for microphone pickup,

- B-format for studio equipment and processing,

- C-format for transmission,

- D-format for decoding and reproduction

The A-format consists of the four signals from the tetrahedral microphone pick-up. These signals need to be equalised so as to represent the soundfield at the centre of the tetrahedron [10].

The B-format consists of four signals that represent the pressure and velocity components of the sound field in any direction. The four channels in first-order B-format are called W, X, Y and Z [1]. Such signals should be equalised so as to represent the soundfield at the centre of the tetrahedron, since the capsules will not be perfectly coincident. This is referred to as First Order Ambisonics [10].

C-format contains the signals for stereo-compatible transmission or recording, including the height information [1].

D-format signals are distributed to loud speakers, and can adapt based on the loudspeaker layout. This is derived from the B or C format using an decoder [10]

3.2 Recent Improvements in Ambisonics

Higher Order Ambisonics (HOA)

As Arteaga (2018) notes, one of the main drawbacks of first order Ambisonics is, “the poor directionality” [1]. Higher Order Ambisonics allows for additional channels for improved directional encoding which can cover a larger listening area. These higher order components are often referred to as spherical harmonics [10].

HOA also provides more coefficient signals allowing for increased spatial selectivity, which enables the rendering of loudspeaker signals with less crosstalk, helping to reduce timbral artefacts. Additionally HOA can do this independently of the loudspeaker layout. HOA is however still relatively new and not widely used for recording and reproduction yet [6].

Advantages of Ambisonics

As Arteaga (2018) notes HOA has a number of other additional advantages over other technologies. For example it can provide a very smooth listening experience without “jumping artifacts” unlike other spatial audio technologies such as Vector Base Amplitude Panning (VBAP) [1].

Additionally it can be employed with a small number of speakers for playback, unlike competing technologies like Wave Field Synthesis that require a large number of speakers [1].

Sennheisers Ambeo – An Ambisonic Microphone for the Masses

Ambisonics has long been the preserve of high-end audio professionals but with the release of microphones and software such as the Sennheiser Ambeo, ambisonic recording and reproduction are now becoming more accessible. This is a tetrahedral mic that comes with the Ambeo A-B Format Converter software. This software can take the mic’s A-format, four-channel output and convert it to four-channel Ambisonic B-format audio so that users can manipulate it in post-production. Sennheiser’s Ambeo VR solution is regarded by many industry watchers as a very high-quality yet reasonably priced solution producing high quality spatialised audio [13].

4. Recent Developments

4.1 Virtual Environments

The advent of virtual environments has created demands for spatial audio that more traditional technologies have been unable to meet [1] (Arteaga, 2018). By contrast, binaural and ambisonic technologies are well suited to meeting the demands of virtual environments which will be outlined in this section.

Rumsey (2001) highlighted that for applications mostly concerned with accurate sound field rendering, the main purpose of the sound system is linked to spatial interaction with the virtual world. Such environments require very accurate sound cues for movements, locations so that a person can make sense of the scene they’re interacting with [10].

More so than traditional media, it’s critical that the environment adapts to the listener’s actions. Such applications are ideally suited to binaural or ambisonic technology or perhaps even a combination of the two, to provide precise control over the sound cues presented to the user.

Begault (1995) observes that people will probably be able to work more effectively in virtual environments if their actions and what they see are accompanied with appropriate sounds that emanate from their expect source or location. 3-D sound creates a sense of immersivity in a 3-D environment that facilitates this [7].

The benefits of Ambisonics seem ideally suited for these environments as it’s designed to spread sound evenly throughout the three-dimensional sphere to create a smooth, stable and continuous sphere of sound, even when the audio scene rotates (as, for example, when a gamer wearing a VR headset moves her head around). This is because Ambisonics is not pre-limited to any particular speaker array [4].

Ambisonics can deliver an immersive, 360 sound experience, where the listener can hear sounds coming from above and below as well as in front or behind the user [1]. Another benefit of Ambisonic sound is that it can also be post processed for binaural sound playback.

4.2 Wave Field Synthesis (WFS)

Another technology emerging for providing a spatialised audio experience is WFS, based on the Huygens–Fresnel principle. This states that a wavefront can be series of elementary spherical waves, and therefore, any wavefront can be synthesized from these waves. In practice, a computer controls a large array of individual loudspeakers and actuates each one at exactly the time when the desired virtual wavefront would pass through it. While it allows the exact reproduction of sound fields, given the large number of loudspeakers it requires, it can be cost prohibitive for consumer environments [12]

4.3 Vector Base Amplitude Panning (VBAP)

An alternative to Ambisonics, VBAP is an amplitude panning method for “for loudspeaker triplets forming triangles from the listener’s view point. VBAP can be used with any number of loudspeakers in any positioning” developed by Pulkki [8]. VBAP can offer even greater accuracy than HOA but its main main drawback compared to HOA is that virtual sources tend to “jump” from one loudspeaker to the other when using moving sources [11]

4.4 Improved Standardisation

With improving standardisation such as ISO/MPEG-H for 3D Audio, it’s becoming easier and more cost effective to deliver optimised playback on loudspeaker setups as well as headphones [6]. This is important as it will allow for bringing spatialised audio to mass consumer markets more cost-effectively.

Conclusions

Owing to improvements in technology, it’s now becoming possible to realise the promised benefits of spatial audio, heralded by pioneers such as Gerzon. As broadcasters such as the BBC continue to invest in bringing spatialised audio experiences to the general public, it is likely that 3D audio will start to become more mainstream.

Virtual environments are now drawing considerable commercial interest and investment with products like Facebook’s Oculus becoming popular in consumer markets and huge investment in VR and AR firms such as Magic Leap. This coupled with its increasing usage for applications such as industrial training like flight simulators and surgical operations, Spatial Audio is likely to emerge from a niche application into its rightful place as a mainstream sound technology.

References & Bibliography

[1] Daniel Arteaga. 2015. Introduction to Ambisonics.

[2] Durand Begault. 1991. Challenges to the successful implementation of 3-D sound. AES J. Audio Eng. Soc. 39, (December 1991).

[3] Micahel Gerzon. Ambisonics in Multichannel Broadcasting and Video. ResearchGate. Retrieved November 12, 2018 from https://www.researchgate.net/publication/237067898_Ambisonics_in_Multichannel_Broadcasting_and_Video

[4] Francesco Grani, Dan Overholt, Cumhur Erkut, Steven Gelineck, Georgios Triantafyllidis, Rolf Nordahl, and Stefania Serafin. 2015. Spatial Sound and Multimodal Interaction in Immersive Environments. In Proceedings of the Audio Mostly 2015 on Interaction With Sound (AM ’15), 17:1–17:5. DOI:https://doi.org/10.1145/2814895.2814919

[5] H. Hacihabiboglu, E. De Sena, Z. Cvetkovic, J. Johnston, and J. O. Smith III. 2017. Perceptual Spatial Audio Recording, Simulation, and Rendering: An overview of spatial-audio techniques based on psychoacoustics. IEEE Signal Process. Mag. 34, 3 (May 2017), 36–54. DOI:https://doi.org/10.1109/MSP.2017.2666081

[6] J. Herre, J. Hilpert, A. Kuntz, and J. Plogsties. 2015. MPEG-H 3D Audio—The New Standard for Coding of Immersive Spatial Audio. IEEE J. Sel. Top. Signal Process. 9, 5 (August 2015), 770–779. DOI:https://doi.org/10.1109/JSTSP.2015.2411578

[7] Dave Madole and Durand Begault. 1995. 3-D Sound for Virtual Reality and Multimedia. Comput. Music J. 19, 4 (1995), 99. DOI:https://doi.org/10.2307/3680997

[8] Ville Pulkki. 2001. Spatial sound generation and perception by amplitude panning techniques. Helsinki University of Technology, Espoo.

[9] Ville Pulkki. 2002. Microphone Techniques and Directional Quality of Sound Reproduction. (2002), 18.

[10] Francis Rumsey. 2004. Francis Rumsey, Spatial Audio. Focal Press, Oxford. 240 Pp. Softback. ISBN: 0-240-51623-0. Org Sound 9, 1 (April 2004), 117–117. DOI:https://doi.org/10.1017/S1355771804220155

[11] Laurent S R Simon, Hannes Wuethrich, and Norbert Dillier. 2017. Comparison of Higher-Order Ambisonics, Vector- and Distance-Based Amplitude Panning using a hearing device beamformer. DOI:https://doi.org/10.5167/uzh-139661

[12] Sascha Spors and Rudolf Rabenstein. 2006. Spatial Aliasing Artifacts Produced by Linear and Circular Loudspeaker Arrays used for Wave Field Synthesis. (2006), 14.

[13] Rob Tavaglione. Review: Sennheiser Ambeo VR Microphone. ProSoundNetwork.com. Retrieved November 12, 2018 from https://www.prosoundnetwork.com/gear-and-technology/review-sennheiser-ambeo-vr-microphone

[14] Sounding Special: Doctor Who in Binaural Sound – BBC R&D. Retrieved November 12, 2018 from https://www.bbc.co.uk/rd/blog/2017-05-doctor-who-in-binaural-sound

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Engineering"

Engineering is the application of scientific principles and mathematics to designing and building of structures, such as bridges or buildings, roads, machines etc. and includes a range of specialised fields.

Related Articles

DMCA / Removal Request

If you are the original writer of this dissertation and no longer wish to have your work published on the UKDiss.com website then please: