Using Computer Games to Advance Scientific Research

Info: 5118 words (20 pages) Example Research Project

Published: 20th Feb 2025

Tagged: Research Methodology

Introduction

A huge number of different games created by scientists for non-academic people are developed in the modern world. They aim to deliver results that drive real research through user action. In addition, these games can show people that such sciences, which many perceive as unattainable or esoteric, are actually understandable.

There are many examples of games designed to advance scientific research. For instance, players of the online game Foldit solve different puzzles such as modelling shapes of proteins (Khatib et al. 2011). Generally, the more scientists know about how certain proteins fold, the better new proteins they can design to combat the disease-related proteins and cure the diseases. Similarly, physicists have also created a game called Quantum Moves, where people can help in solving scientific difficulties in the effort to develop a quantum computer (Ornes 2018). Another example is a game EyeWire made for “citizen neuroscientists” where they can reconstruct different neurons (Kim et al. 2014). Therefore, user contributions allow scientists to advance in the study of the brain. According to the “citizen science” project aggregator, at the moment there are more than 1300 different active games available (SciStarter 2020).

Due to the rapid increase in the number of such games, it is important to consider not only their benefits for science, but also the validity of their use for scientific inferences, as well as their impact on the society involved. In this essay we delve deeper into EyeWire to understand the challenges and opportunities in considering the scientific and ethical validity of the given approach to scientific research.

A brief description of the study and the obtained results

EyeWire software transform scientific work on 3D reconstruction of neurons into a game of coloring serial electron microscopy images. Overall, the data obtained from this game helps scientists understand how the mammalian retina detects movement. Contribution of more than 100 thousand registered users in cooperation with professionals and digital technology led to such findings as:

1) There are several types of bipolar cells (BC) with different time lag.

2) Some BC types prefer to contact starburst amacrine cells (SAC) dendrites close to the SAC soma, whereas others prefer to contact far to the SAC soma.

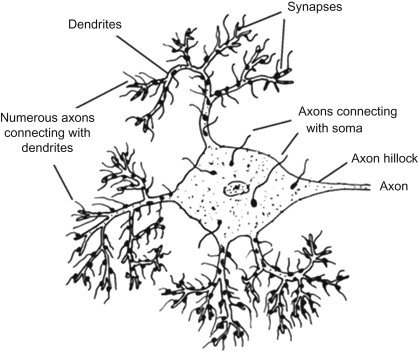

To summarize the novelty of the study, they place a time lag in front of the BC - SAC synapses, whereas the previous model places it after and has many weaknesses. Thus, it was determined that dendrites (see Fig. 1) "prefer" to move outward rather than inward, and scientists were able to understand how SAC dendrites are wired with BC types with different time delays. Notably, it has been shown that reconstruction of neurons can provide surprising insight into neural function. However, it took enormous human effort to obtain all these results.

Fig. 1 Dendritic tree with many synapses (Dharani 2015)

Epistemological validity of the approach

Scientists in collaboration with “citizen neuroscientists” and digital technologies trying to pursue three important epistemic goals by EyeWire: reliability, scalability and connectivity (Watson and Floridi 2016). Further we will consider some of the difficulties in achieving these goals encountered by scientists when developing the program.

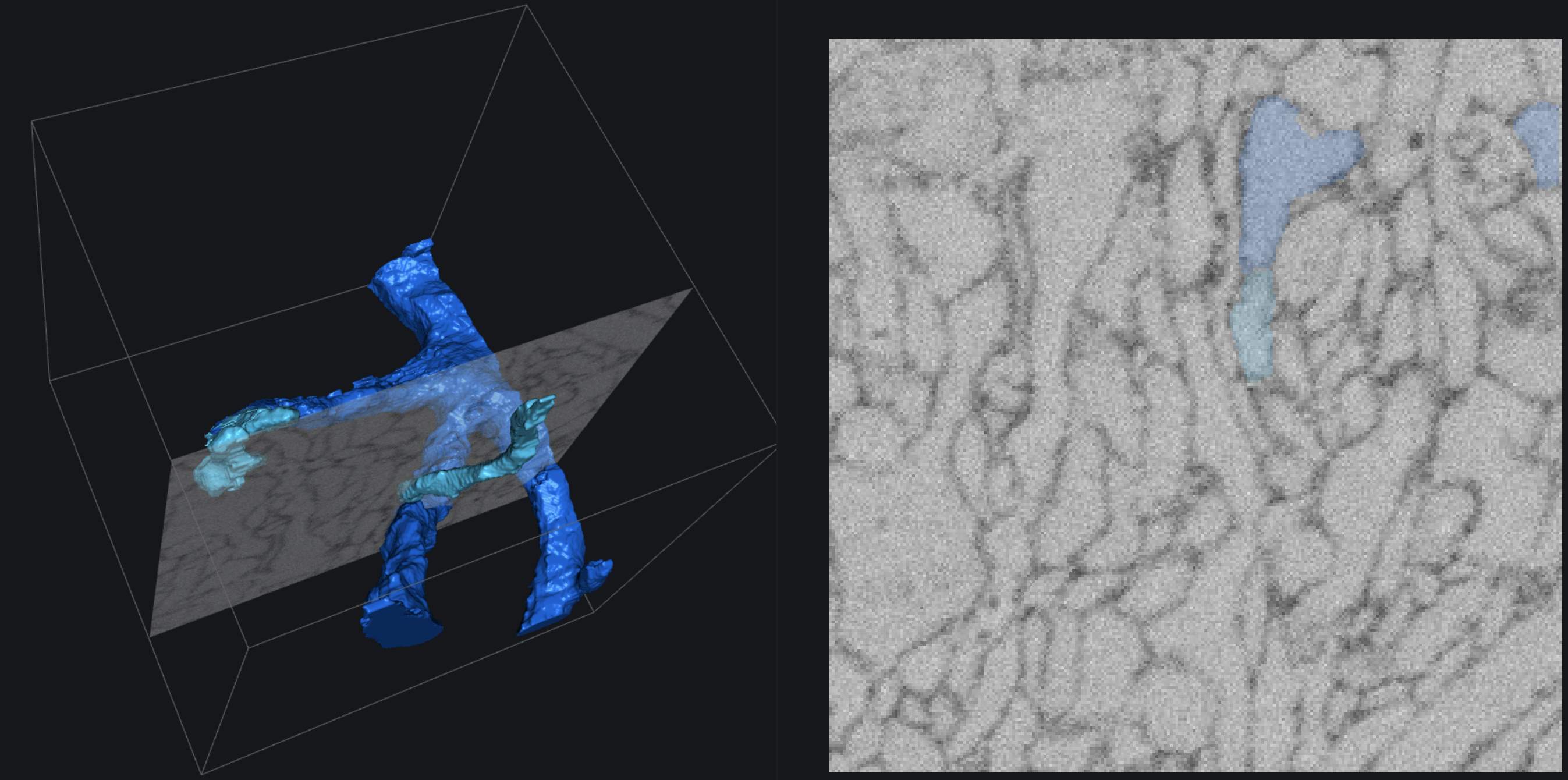

To begin with, EyeWire has two different activities available: coloring the image near the location and choosing a new location to color (see Fig. 2). Deep convolutional neural networks were used to detect boundaries between neurons and produced a graph connecting nearest neighbor voxels. Consequently, the obtained segments that were subsets of individual neurons called supervoxels are presented to users and available for coloring.

Fig. 2 3D and 2D views in the neuron reconstruction game.

One of the most important challenges in SAC reconstruction is the fact that interiors of many SAC boutons comprise irregular darkening because of the unclear for scientists reasons (perhaps they appear due to the intracellular structure). As a result, they could falsely emerge as cellular boundaries. Since the extracellular staining procedure wasn’t intended to mark these intracellular structures, it is worth considering in the future whether there are more sensitive algorithms that can be used to provide more accurate segmentation.

On the other hand, artificial intelligence (AI) is also used to color supervoxels. It’s extremely important how entertaining is it for a user to “complete a level” and start working on the next cell because scientists are interested in making the most of the game. The more the game looks like a complex scientific program for processing data the less entertaining it is for people and the smaller the scope of the audience involved in the research (Stasieńko 2013). As a result, this method of coloring is faster than previous manual approach and thereby increases the speed of cell reconstruction and due to its simplicity allows to attract a wider audience of players.

Another significant point necessary to maximize reliability of the program is the existence of a tutorial for novices. These training sessions allow new users to try filling out a cell that has been previously colored by an expert and to get feedback on the accuracy of your result. This training is a necessity since at the next “levels” EyeWire will not know the correct coloring. Interestingly, the game will consider that the most accurate solution to reconstruct each cell is the solution chosen by the majority of users, and this data will be saved in the system. For this reason, from my point of view, the biggest epistemological challenge is persuading users to reconstruct each cell as accurately as possible and to implement in the game a tool that tracks falsely correct branches built by users.

Turning to the first problem, a scoring system has been developed that rewards users with accurate colouring. According to it, players earn high scores if they colour the same supervoxels as other players. In fact, the scoring system rewards the agreement between users, which tends to be the same as rewarding accuracy. This consensus tends to enhance accuracy of the whole system and has proven to be more accurate than any individual EyeWirer. When considering the difficulties faced by developers, this aspect seems to be the weakest point since the system can be undermined by unscrupulous users, and even tools for getting rid of erroneous decisions may not work. However, the tool called GrimReaper was implemented for cases when reconstruction errors slip past the consensus mechanism. For instance, it chops off false branches when they become long enough and obvious to detect. At the same time, scientists also detect errors by visual inspection in the “overview” mode. In this case there is a necessity and a clear opportunity to develop additional measures to prevent the growth of such branches or to train GrimReaper to identify false branches when they are shorter. As these methods become more efficient, the precision of in-game data collection will increase because users will not waste time developing wrong branches.

Another significant difficulty in reconstruction process arises from very thin SAC dendrites. Some of them can be mistakenly terminated by users due to limited spatial resolution and deficient staining. However, GrimReaper can also check how the reconstructed cube fits into the entire neuron. Therefore, this additional context can be used to disambiguate difficult cubes by the knowledge about the typical appearance of the SAC.

Apart from the technicalities, one of the most important underlying issues also remains. At all times, the basis of scientific research was the fact of the reliability of the data obtained by the scientific community. To what extent can we trust the results that were made by a crowd of amateurs, even after analyzing them using machine learning tools?

For instance, we can refer to a Jury Theorem defined by Condorcet, which tell us that group of people facing a binary choice combined with simple majority rule leads to the fact that they are more likely to make the correct decision than any particular individual. Many studies tried to extend this theorem to a case of polychotomous choices such as we have in EyeWire when reconstructing. Mathematicians proved that results can be reliable if all participants are independent (Lam et al. 1996). Within the framework of the game, this condition can be considered fulfilled. However, the majority is always more likely to be wrong than any expert if a jury composed of people with a less than 0.5 chance of making the right decision (Watson & Floridi 2016). In order to provide the necessary condition for the correct conclusions of the program, all previously mentioned tools, such as the GrimReaper, are applied. However, there is no evidence that they are enough to allow to stay on the right side of the Jury Theorem.

As an additional narrative point, it is compelling to consider the game not from the perspective of the developers, but from the point of view of the users participating in EyeWire. Let's examine this platform as a market analogy. If we consider the scientific research from this outlook, it turns out that in fact we have only one employer (EyeWire itself). Therefore, this type of system can be called monopsony and must obey the rules and dynamics that belong to it. Thus, this system behaves like a highly non-competitive market. In this case a minimum wage could increase employment at the same time it increases wages, whereas in a competitive labor market, a raise in the minimum wage decrease employment and increases unemployment. We can see it by analyzing Figure 3, which illustrates how monopsony works.

Fig.3. Minimum wage and monopsony

Average cost (AC) increases as the firm takes more workers. However, marginal cost (MC) curve is twice as steep as AC curve. When the monopsony firm wishes to employ more workers, they have to offer a higher market wage, what means that costs are going to increase. The wage that prevails in the monopsony market is depressed and it’s lower (wage 1). However, the imposition of a minimum wage, if it’s higher than the preferred one, makes the dashed sections of the AC and MC curves irrelevant. A marginal cost curve is thus a horizontal line (Minimum wage). Therefore, under new conditions a marginal cost curve and marginal revenue product curve now intersect at N1 so that employment increases. Thus, unlike a competitive market, with a monopsony market, the minimum wage can increase employment while increasing wages (Rittenberg & Tregarthen 2013).

Drawing an analogy with the scientific platform under consideration, I can say that based on the conclusions made about the monopsony market, we can be sure that improving the reward system for EyeWire players according to the rules that can be applied to it will undoubtedly increase the “employment” of volunteers who decide to take part in the game.

In conclusion, it is worth noting that, despite the ambiguous gaps in the game, the result of using data obtained from game users is impressive, as it allowed scientists to advance in the study of the BCs and SACs functions. Further we will consider the ethical aspects of the program and raise issues that are important to consider when developing and using programs such as EyeWire.

Ethical aspects of the approach

Besides the fact that EyeWire helps to develop scientific research, it can also spark interest in neuroscience in thousands of people. The spread of scientific games can create people’s interest in science (Stuchlikova et al. 2017). Therefore, by the wide distribution of EyeWire in society, it becomes possible to introduce thousands of people to neurobiology and influence their interest in biology and neuroscience.

As it was mentioned earlier, over 100 000 users are registered players of EyeWire, and the research conducted by scientists was supported by tremendous human effort. As a consequence, a big question arises: should scientists reward active users who contribute to the design of the research, and if so, how?

On the one hand, such relationship that arise between a platform and people can be described as exploitative. For instance, there is one more good example such as Amazon Mechanical Turk (MTurk). It is a crowdsourcing marketplace that uses distributed workforce to solve problems and optimize processes of different businesses through collective intelligence and skills. Provided benefits are simple: optimized efficiency, increased flexibility and reduced costs. Participants are compensated for their work but with small awards (Buhrmester et al. 2016). Overall, remuneration for the amount of work performed on MTurk is not commensurate with how much money the company would spend on the required amount of work.

Therefore, it can be argued that all such crowdsourced platforms can be viewed as purely utilitarian that in some way disregards human dignity. This conclusion boils down to the question of whether these platforms violate human rights or not, because human dignity, as it was argued by J. Tasioulas (2013), is directly connected with interests in generating human rights. Deontological theorists, in turn, claim that a conception of dignity even fully defines human rights. From this point of view, crowdsourcing projects must pay special attention to issues arising because of this matter.

On the other hand, W. Soliman and V. Tuunainen in “Understanding Continued Use of Crowdsourcing Systems: An Interpretive Study” argue that crowdsourcing platforms can’t be described as utilitarian nor hedonic, but they do include components of both. In their opinion, initial usage of these systems is caused by selfish motives such as curiosity and financial reward, while the subsequent continuous use requires additional social motivations as altruism or publicity.

In addition to the mentioned comparisons, I would like to consider this phenomenon from the point of view of various philosophical schools. From the Hayek’s perspective, it can be argued that the data obtained can and should be used freely in order to obtain the public good in the form of a scientific achievement as, from this outlook, the progress will be more effective and will lead to more scientific achievements. In my opinion, in our context, where data is obtained directly through a program created by scientists, it makes sense to consider the phenomenon from the point of view of Weber's approach, which states that more powerful technology and increasing knowledge have both sides (Schroeder 2019). Hence, obtained data can’t be endorsed as an unquestionable positive development, nor necessarily critiqued as inherently exploitative. However, it’s crucial to strive for the most rational use of data and appropriate treatment of the players. Several suggestions for supporting participants will be offered below.

A number of different options for supporting users of crowdsourcing projects can be offered. For example, let’s consider the question of co-authorship. In many countries there are different reward systems for undergraduate students who take part in the research of their supervisors. Moreover, differences in approach vary from subject to subject. For example, young chemistry, biology, and physics students involved in research in Russia receive broad support from supervisors and laboratories and are almost always included as co-authors in published scientific articles. Therefore, in the scientific community it is considered correct for senior students to include the heads of their laboratories in a project in which they have had minor participation. However, often in many Western countries, students have to work in a laboratory for at least several years to convince their supervisors to include them as co-authors of collaborative research, but mentors don’t always expect to be included as co-authors in the articles of graduate students.

For instance, EyeWire can inherit experience of implementing co-authorship from other platforms. In particular, another crowdsourced project called Zooniverse, which allows users to take part in real cutting edge research in various fields across humanities and sciences, mobilizes a crowd of volunteers to participate in their research by providing the opportunity to earn co-authorship by playing. One of the examples is the case when the Dutch schoolteacher Hanny van Arkel discovered the astronomical phenomena called Hanny’s Voorwerp, while she participated in the Zooniverse project Galaxy Zoo (Hagen 2020). Although this case is exceptional, the platform includes this option, which, in addition to providing the participant with the opportunity to receive a well-deserved reward, is an extra motivation for others to participate in the project.

Despite the fact that there are different studies on how co-authorship should be organized (Frassl et al. 2018, Grobman 2009), this question has different answers in different societies and is highly dependent on the history of the development of science in a particular place. Therefore, in my opinion, EyeWire scientists can develop their own reward system for the most active users. For instance, if a player helped to reconstruct the regulated number of cubes / cells then he can become a co-author in future work. Moreover, as a side effect, it will increase the spread of information about the game and thus increase the connectivity.

Another study conducted by D. Goh et al. (2017) showed that if a crowdsourced game evokes such feelings as happiness, pleasure, excitement or frustration then players are more keen to invest more in terms of time and effort. Moreover, this research showed that the more complicated reward system, such as Badges instead of just earning points, in a game results with more cognitive enjoyment for players. Thus, considering that EyeWire has only a primitive reward system in the form of points, there is a great potential for complication in order to increase players’ desire to improve their performance.

Consequently, the following question arises. Currently, less accurate EyeWirers are given less weigh in the vote of choosing the right coloring and therefore receive fewer rating points. Scientifically, it is reasonable and it is designed to obtain the most accurate results that can help in platform development and lead to high quality knowledge contributions (Lou et al. 2011). However, speaking about the possible reward system, it is necessary to determine whether it is fair to assume that the contribution of a person with less accurate solutions, but with a larger number of them, contributed less or more.

In summary, when conducting scientific research that way, it is important to consider all the side effects that arise from the relationship between software and society. Undoubtedly, these factors and the consequences of the limited capabilities of scientific games lead to limitations in their application.

Implications and limits of the research

Scientific programs as EyeWire demonstrate new outstanding ways in which digital technology can unite with professionals and “citizen scientists” to form a cohesive sociotechnical system. EyeWire serves not only as a research tool, but also as a source of information on user decisions. Therefore, it can and should be used to upgrade AI based on the received data and identified gaps in the application.

One of the main goals of the project is to help guide changes to AI neural networks by understanding real intelligence so that they can learn not with millions examples but with only few how to identify similarities between objects that they have never faced before. Unfortunately, one game is not enough to achieve this goal. However, EyeWire invites the world to solve puzzles alongside cutting-edge AI to reconstruct about 100 000 neurons and find 1 billion synapses. This knowledge about the cells will allow scientists to explore completely new information for humanity, which could shed light on a more detailed understanding of brain function.

In comparison with old techniques of brain study, EyeWire gives an opportunity to accelerate research. However, there are still time challenges and a possibility to shorten research time by attracting more and more users. For instance, science games can be introduced into school education to help children better understand a related topic. There are a number of studies that show that games can increase student interest and motivation in the classroom (Videnovik 2020, Sanina 2020). Thus, EyeWire can be used as an addition to biology lessons about the nervous system.

Despite a fairly in-depth analysis of the features associated with the game, this study is limited from the point of view of direct understanding of the operation of the program code. From a machine learning perspective, users cannot trust that developers will act in their best interest without a fiduciary relationship (Mittelstadt 2019). When this aspect is superimposed on a given scientific game, it is tantamount to the fact that without the necessary levels of transparency, certain difficulties arise in terms of public confidence in the scientific nature of the data obtained through EyeWire.

Here I argue that it is important to turn to the current trend of auditing studies and try to apply it. Thus, it will be possible to increase the transparency of the used algorithm and make sure that the applied techniques provide valid scientific results and, more importantly, to check if the reward system in the game actually evaluates user input correctly and fair. There are a number of different auditing techniques such as crowd sourced audit, noninvasive user audit, scraping audit and etc. However, usually all approaches are divided into 3 groups: code, impact and functionality audits.

For instance, code audit analyzes the code in a way independent of its societal context, may not be able to capture the interplay between the algorithm and its societal context. In my opinion, despite the fact that code audit is considered to be the least useful in terms of platform analysis in general, however, for this scientific program, it is it that can provide the most positive effect and useful feedback for developers. The main idea is that any researcher worried about algorithmic misbehavior can simply obtain a copy of the relevant algorithm (Sandvig et al. 2014). Unfortunately, if the developers of EyeWire consider their algorithms to be valuable intellectual property and are unwilling to share their code, then the possibility of this audit will become impossible. The scheme of this approach is shown in the Figure 4.

Adding to this, it is also a helpful practice to create a datasheet for obtained datasets. In this case, when developers use the received data only for themselves, this is not urgent. However, from the point of view of code auditing and data processing during audit, information about the dataset can help an independent researcher to analyze faster and more efficiently in order to mitigate unwanted biases, facilitate greater reproducibility of the results and to ensure transparency and accountability (Gebru et al. 2018).

Fig.4. The scheme of the code audit.

In addition to addressing the technical features of the scientific game of neuron reconstruction, this essay also examined the ethical considerations associated with the development and implementation of this type of platform. This work and the mentioned thoughts related to the raised issues can act as a basis for future developers of similar platforms, as well as for the creators of EyeWire in terms of optimization and consideration in future developments.

Conclusion

In the modern world the pace of scientific research is far ahead of the pace of development of science in the past. Modern scientists can collaborate with digital technologies and non-academic people to achieve their goals. This became possible due to the worldwide Internet and modern artificial intelligence, which has become an irreplaceable part of any research.

First, this paper reviewed various AI mechanisms applied by developers of EyeWire to enable efficient neuron reconstruction work. The analysis of the validity of the results obtained using these methods was carried out and questions regarding the further verification of the scientific accuracy of the output such as implementation of Jury Theorem were raised.

Second, examples of various similar crowdsourcing platforms were presented and ethical issues related to their impact on society from the point of view of various philosophical schools were considered. Additionally, the system of rewarding the participants in the game was also analyzed and options for improving it in terms of attracting more players were proposed.

Finally, the identified limitations in the analysis as well as the areas of application in which this work and crowdsourcing platforms can be useful were mentioned.

Overall, this essay examined various aspects of the scientific validity of approaches, as well as ethical challenges and opportunities in EyeWire. Despite the enormous usefulness of scientific games, weak points have been discussed and ideas for improvement have been suggested.

References

- Khatib, F., Cooper, S., Tyka, M. D., Xu, K., Makedon, I., Baker, D., et al. (2011). Algorithm discovery by protein folding game players. Proceedings of the National Academy of Sciences of the United States of America, 108(47), 18949–18953.

- Ornes, S. (2018). Science and Culture: Quantum games aim to demystify heady science. Proceedings of the National Academy of Sciences of the United States of America, 115(8): 1667–1669.

- Kim, J. S., Greene, M. J., Zlateski, A., Lee, K., Richardson, M., Turaga, S. C., et al. (2014). Space-time wiring specificity supports direction selectivity in the retina. Nature, 509(7500), 331–336.

- SciStarter. (2020). Project finder. Retrieved from https://scistarter.com/finder

- Dharani, K. (2015). Physiology of the Neuron. The Biology of Thought, 31–52.

- Watson, D., & Floridi, L. (2016). Crowdsourced science: sociotechnical epistemology in the e-research paradigm. Synthese, 195(2), 741–764.

- Stasieńko, J. (2013). Why are they so boring?–the educational context of computer games from a design and a research perspective. Neodidagmata, 35, 47–64.

- Lam, L., & Suen, C. (1996). Majority vote of even and odd experts in a polychotomous choice situation. Theor Decis 41, 13–36.

- Rittenberg, L., & Tregarthen, T. (2013). Principles of Microeconomics. FlatWorld.

- Stuchlikova, L., Benko, P., Kosa, A., Kmotorka, I., Janicek, F., Dosedla, M., & Hrbacek, J. (2017). The role of games in popularization of science and technology. 15th International Conference on Emerging eLearning Technologies and Applications (ICETA).

- Buhrmester, M., Kwang, T., & Gosling, S. D. (2016). Amazon's Mechanical Turk: A new source of inexpensive, yet high-quality data? Methodological issues and strategies in clinical research, 133-139.

- Tasioulas, J. (2013). Human dignity and the foundations of human rights. Understanding human dignity, 294, 291-312.

- Schroeder, R. (2019). Marx, Hayek, and Weber in a Data-Driven World. Society and the Internet: How Networks of Information and Communication are Changing Our Lives.

- Soliman, W., & Tuunainen, V. (2015). Understanding Continued Use of Crowdsourcing Systems: An Interpretive Study. Journal of Theoretical and Applied Electronic Commerce Research, 10(1), 1–18.

- Frassl, M., Hamilton, D., Denfeld, B., de Eyto, E., Hampton, S., Keller, P., et al. (2018). Ten simple rules for collaboratively writing a multi-authored paper. PLOS Computational Biology, 14(11), e1006508.

- Hagen, N. (2020). Scaling up and rolling out through the web: The “platformization” of citizen science and scientific citizenship. Nordic Journal of Science and Technology Studies, 8, 1.

- Goh, D. H.-L., Pe-Than, E. P. P., & Lee, C. S. (2017). Perceptions of virtual reward systems in crowdsourcing games. Computers in Human Behavior, 70, 365–374.

- Grobman, L. (2009). The Student Scholar: (Re)Negotiating Authorship and Authority College Composition and Communication, 61(1), W175-W196.

- Lou, J., Lim, K. H., Fang, Y., & Peng, Z. (2011). "Drivers Of Knowledge Contribution Quality And Quantity In Online Question And Answering Communities". PACIS 2011 Proceedings, 121.

- Videnovik, M., Trajkovik, V., Kiønig, L.V. et al. (2020). Increasing quality of learning experience using augmented reality educational games. Multimed Tools Appl, 79, 23861–23885.

- Sanina, A., Kutergina, E., & Balashov, A. (2020). The Co-Creative approach to digital simulation games in social science education. Computers & Education, 103813.

- Mittelstadt, B. (2019). Principles alone cannot guarantee ethical AI. Nat Mach Intell, 1, 501–507.

- Sandvig, C., Hamilton, K., Karahalios, K., & Langbort, C. (2014). Auditing Algorithms: Research Methods for Detecting Discrimination on Internet Platforms.

- Gebru, T., Morgenstern, J., Vecchione, B., Vaughan, J.W., Wallach, H., Daumé, H., & Crawford, K. (2018). Datasheets for Datasets. ArXiv, abs/1803.09010.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allRelated Content

All TagsContent relating to: "Research Methodology"

Research methodology describes the specific methods and techniques used in a study in order to justify the approach used in ensuring reliable, valid results.

Related Articles

DMCA / Removal Request

If you are the original writer of this research project and no longer wish to have your work published on the UKDiss.com website then please: