OCR Based Vehicular Recognition Using Segmentation (OVRS)

Info: 21009 words (84 pages) Dissertation

Published: 11th Dec 2019

Tagged: Information SystemsTechnology

CHAPTER 1

INTRODUCTION

“OCR based Vehicular Recognition using Segmentation (OVRS)” system is best system which uses Optical Character Recognition (OCR) software to convert vehicle registration numbers which are in the form of image into information in the form of text for real time or retrospective matching with law enforcement and other databases. This system is an integrated hardware-software device which reads the vehicles number plate and give the output of the number plate number in ASCII – to some data processing system. This type of data processing system is also known as Number Plate Recognition (NPR) and Automatic Number Plate Recognition (ANPR) systems. It is called automatic number plate recognition because we are designing a system which automatically convert the number plate image information to the text form. By using this method we can reduce the manual errors and we can also save the time.

It is estimated by analyzing that there are currently more than half a billion cars as well as automobiles driving on the roads worldwide. All those vehicles have their Vehicle Identification Number as their primary identifier and it is a unique identifier by which we can able to extract the information about the vehicle’s owner. The vehicle identification number is actually a license number which states a legal license to participate in the public traffic. All vehicle world-wide should have its license number – written on a license plate – mounted onto its body (at least at the back side) and vehicle without properly mounted should not run on the roads. The vehicles with well visible and well readable license plate should only allowed to run on the roads.

The license number is the most important identification data a computer system should treat when dealing with vehicles. If the data is already feed or saved in the computer then most of these tasks are rather easy to be carried out.

Suppose a company’s security manager would require a system which precisely tells at every moment where the cars of the company are located: in the garage or out on roads or any where else. By registering every single drive-out from and drive-in to the garage, the system could always able to tell the information that includes which car is out and which is in. The most effective or key issue of this task is that the registration of the movement of the vehicles should be done automatically by the system, otherwise it would require manpower Which will effect in reduction in efficiency of the system.

Concisely, OCR based Vehicular Recognition system(OVRS) is automation of data input, where data represents the registration number of the vehicle. This system replaces, redeems the task of manually typing the number plate of the bypassing vehicle into the computer system.

OVRS system is an important system of technique, which has many applications especially in Intelligent Transportation System. OVRS is an advanced machine vision technology used to identify vehicles by their number plates without direct human intervention. It is an important area of research due to its many

applications in different fields. The development of Intelligent Transportation System (ITS) provides the data of vehicle’s numbers which can be used in follow up, analyses and monitoring. OVRS is very important in the area of traffic problems, at highways during toll collection, at borders and custom security, premises where high security is needed, like Parliament, Legislative Assembly, and so on. Real time OVRS system plays a significant role in automatic watching of traffic rules and maintaining enforcement on public roads. as a result of each vehicle carries a novel vehicle plate, no external cards, tags or transmitters ought to be recognizable, solely vehicle plate [1]. The OVRS work is mostly framed into many steps: range plate extraction, character segmentation and therefore the character recognition. From the whole input image that is captured, solely the quantity plate is detected and processed any within the next step of character segmentation. In character segmentation section every and each character is isolated and segmental. supported the choice of outstanding options of characters, every character ought to berecognized, in the character recognition phase. Extraction of number plate is difficult task in overall system, essentially due to: Number plates normally occupy a small portion of whole image; difference in different number plate formats, and influence of some environmental factors. This step can affects the accuracy of character segmentation as well as recognition work. There are Different techniques developed for number plate extraction. OVRS system is also known as automatic vehicle identification, car plate recognition, automatic number plate recognition, and optical character recognition (OCR) for cars. The variations in the plate types or environments can cause challenges in the detection and recognition of license plates. They are summarized as follows.

1) Plate variations:

a) location: plates are exist in different locations of an image;

b) quantity: an image may contain no plate region at all or some times many plate areas;

c) size: plate regions may have different sizes due to the camera distance

d) color: plate areas may have various characters and background colors

2) Environment variations:

a) Illumination: input images may have different types of illumination, mainly due to the environmental lighting and some vehicle headlights;

b) Background: the image background may contain several patterns similar to plate areas, such as numbers stamped on a vehicle, bumper with vertical patterns, and some textured floors [2].

1.1 OVRS-SYSTEMS

With OVRS-systems it is possible to solve a multiple set of tasks. In this Paper five types of applications are presented. Table-1 Which lists these applications and discusses which applications permit the encrypting of the number plate strings

Table 1: Appliance of OVRS-systems [3]

| Application | Encrypting of the number plate strings |

| Vehicle classification No. | Authentic string required to identify vehicle class. |

| Travel time measurements | Yes. Encrypting does not reduce the sample size of observed vehicle |

| Determination of traffic volume | Yes, but it reduces the quality of results as this application requires the complete sample of vehicles. If not all vehicles are detected the detected vehicle volumes must be projected. |

| Analysis of route choice Behavior | Yes, but it reduces the quality of results as this application requires the complete sample of vehicles. If not all vehicles are detected, the detected vehicle volumes must be |

| projected using additional count data. | |

| Estimation of O-D matrices | Yes, if only the second part of the string |

| from the area code | is encrypted and not the area code. |

Automatic Number plate recognition system consists of three stages: first is Number plate extraction,second is extracting the characters,and third is Character recognition. The purpose of this technique is to provide researchers a systematic survey of existing OVRS research by categorizing the present strategies in step with the options they used, by analyzing the execs and cons of those options, and by scrutiny them in terms of the popularity performance and process speed, and to open some problems for the long run analysis .In this paper, there square measure following sections.

There square measure many range of alternative potential difficulties that the package should be ready to address. These include:

Poor image resolution, actually because the plate is simply too far-off however typically it may be ensuing from the utilization of a low-quality camera.

Blurry pictures, significantly the motion blur

Poor lighting and low distinction thanks to overexposure and reflection or shadows

An object obscuring (part of) the plate, very often a tow bar, or some dirt on the plate

A different font, standard for vainness plates (some countries don’t permit such plates, eliminating the problem)

1.2 PROBLEM STATEMENT

OVRS is implemented to help the human to automatically detect the number plate without human supervision. Previously human is needed to observe and list the user’s car number plate manually. So this project is developing to replace human to monitor the car and automatically capture the image by using computer. Besides that, the system can automatically display the status of the car which it will compare between the numbers of the car’s plate recognized with the database.

1.3 FEATURES

OVRSsystem is a mass surveillance method which uses optical character recognition(OCR) on images to browse the vehicle registration plates. they’ll use existing television or road-rule social control cameras, or ones specifically designed for the task. they’re employed by numerous police forces and as a way of electronic toll assortment on the bottom of pay-per-use roads and cataloguing the movements of traffic or individuals.

OVRS can be used to store the images captured by the cameras as well as the text from the number plate. Systems commonly use infrared lighting to allow the camera to capture the picture at any time of the day. OVRS technology tends to be region-specific, due to plate variation from place to put.

Concerns concerning these systems have centered on privacy fears of state pursuit citizens’ movements, misidentification, high error rates, and raised government disbursement.

1.4 PROJECT BACKGROUND AND WORKING

Massive integration of information technologies into all aspects of modern life might be caused demand for process vehicles as abstract resources in systems as a result of a standalone data system with none knowledge has no sense, there was conjointly a requirement to remodel data concerning vehicles between reality and knowledge systems. this will be achieved by a person’s agent, or by special intelligent instrumentation which may be ready to acknowledge vehicles by their range plates during a real surroundings and mirror it into abstract resources. thanks to this, varied recognition techniques are developed and range plate recognition systems are used these days in varied traffic and security applications, like parking, access and border management, or pursuit of taken cars. OCR based vehicular recognition using segmentation is an image processing system whereby it is used to recognize the vehicles by identifying their number plate.

In entrance gate, range plates area unit accustomed determine the vehicles. once a vehicle enter into associate input gate, range plate is mechanically recognized and hold on within the info and black-listed range isn’t given permission. once a vehicle later exits the place through the gate, the amount plate is recognized once more and paired with the first-one hold on within the info and it’s taken into consideration. OVRS system can be used in access control. For example, this technology is mainly used in many companies to grant access solely to vehicles of licensed personnel.

In some countries, OVRS systems are put in on country borders mechanically detected monitor border crossings. Each vehicle is registered in a central database and compared to a black list of stolen vehicles. In traffic control, vehicles are directed to different lanes for a better congestion control in busy urban communications during the rush hours.

1.5 HOW THE VEHICLE REGISTRATION CODE SYSTEM WORKS

Firstly, the vehicle can stop at the automobile frame. The cycle can begin once the vehicle get steps over the detector. it’ll activate an indication to the Vehicle range Plate System of the presence of auto.

Secondly, illumination (infra-red) are activated and {pictures} of the front picture of the vehicle are taken. The system can scan the data within the type of pixels of the vehicle and run the popularity method.

Thirdly, the system can apply sure algorithms to analyze the vehicle’s image. Besides analyzing, the photographs are enhance, find the registration number plate position and extract the characters from the registration number plate.

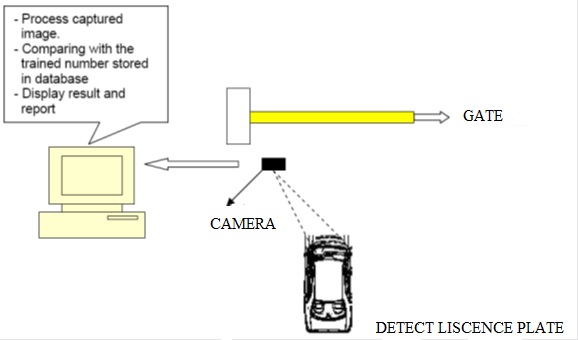

Lastly, the system can try and match the recognized vehicle’s range plate with the automobile plate information. If “Access Granted”, the frame can open and allowed the vehicle to suffer. Diagram is illustrate in Fig one.1.

Fig 1.1 Processing of automatic vehicle plate recognition

Besides, the OVRS system can also offer a bonus by keeping the image of auto within which it’ll be helpful for crime fighting. Camera may specialize in the face of the motive force and put it aside for security reason. There ar some difficulties for OVRS within which it’ll have an effect on the potency and accuracy of the system. it’s essential and vital to work out the facts able to} able to influence the operations and recognition proficiency of system. Next, we tend to additionally got to cross-check different facts of variables that aren’t constant.

Below are the non-constant variables which is able to have an effect on the accuracy of recognition:

- Speed of the vehicle

- Weather condition

- Type of the Vehicle

- Distance between the vehicle’s variety

- number plate and the camera

- Type of the plate ( Rectangular, Bent type)

- Vehicle’s number plate orientation

- Type of vehicle fonts character.

1.6 WORKING

- As the vehicle approaches the secured area, and starts the cycle by stepping over a magnetic loop detector (which is the most popular vehicle sensor used generally). The loop detector senses the presence of the car.

Fig 1.2 A car approaching a License Plate Recognition System

- Now the illumination is activated and the camera (shown in the left side of the gate) takes pictures of the front or rear plates. The images of the vehicle include the plate.

Fig 1.3 Illumination is activated

- Our project (CNPR System) reads the pixel information of this vehicle image and breaks it into smaller image pieces. These pieces are then analyzed to locate the exact location of number plate in the image. Once the area of the number plate (its x and y coordinates) is found the plate is parsed to extract the character from it. These characters are then given to the OCR module. OCR program recognizes those characters and coverts them to text format.

Fig 1.4 Car crossing the gantry

- Once the car number was obtained it can be used according to the application.

1.7 OBJECTIVES

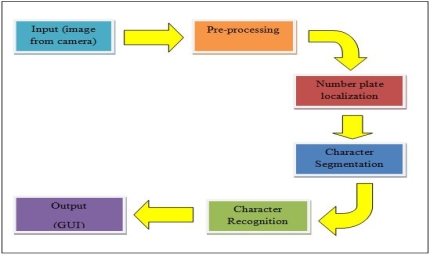

The overall objective of the project is to develop a system to recognize vehicle’s number plate from a automobile at a gate entrance of a parking zone. The software package could lead on to a less expensive and quicker means of enhancing and determinant the performance of the popularity system. The system are supported a private pc specified it’ll generate a report on the vehicle registration code it’s captured.

Once the vehicle range plate is captured, the characters will be recognized and view on the Graphical User Interface(GUI) Besides, the system can also serve as a security purpose whereby it can spot on any wanted or stolen vehicle.

Fig 1.5 Block Diagram of OCR based number plate recognition

1.8 SPECIFICATION:

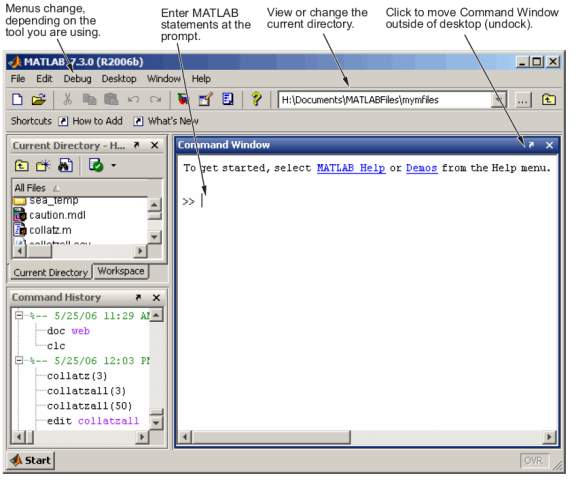

This project is an offline application. Car images are taken by digital camera or traditional camera. Then a program written in MatLab is used to identify the Number Plate

Fig 1.6 System Flowchart

1.9 PSNR and MSE Computations

Peak S/N (PSNR) is usually used as a live of distortion introduced by JPEG compression.

To reckon PSNR, we tend to 1st have to be compelled to calculate the mean square error (MSE) between the initial video sequence and therefore the reconstructed sequence. For video sequences, MSE is just the typical square pixel-by-pixel distinction, that’s a live of the noise power introduced.

Given the MSE, PSNR is outlined as

PSNR =

All present pictures square measure analog in nature. If the amount of pixels is a lot of then the clarity is a lot of. a picture is diagrammatic as a matrix in DIP. In DSP we tend to use solely row matrices. present pictures ought to be sampled and quantity to urge a digital image. a decent image ought to have 1024*1024 elements that is thought as 1k * 1k = 1M pixel.

3.2 Basic steps in DIP:

Image ACQUISITION:

Digital image acquisition is that the creation of digital pictures generally from a entity. A digital image could also be created directly from a physical scene by a camera or similar device. {alternatively|as associate alternative|instead|or else} it is obtained from another image in an analog medium like images, photographic material, or written paper by a scanner or similar device. several technical pictures nonheritable with tomographic instrumentation, side-looking radiolocation, or radio telescopes are literally obtained by advanced process of non-image information.Image enhancement:

The process of image acquisition oft ends up in image degradation as a result of mechanical issues, out-of-focus blur, motion, inappropriate illumination and noise. The goal of image sweetening is to start out from a recorded image and to provide the foremost visually pleasing image.

Image restoration :

The goal of image restoration is to start out from a recorded image and to provide the foremost visually pleasing image. The goal of sweetening is beauty. The goal of restoration is truth. The live of success in restoration is sometimes a blunder live between the initial and therefore the estimate image. No mathematical error operate is understood that corresponds to human sensory activity assessment of error.

Compression:

Image compression is that the application of information compression on digital pictures. Its objective is to scale back redundancy of the image information so as to be ready to store or transmit information in associate degree economical kind.

Morphological processing:

Morphological process may be a assortment of techniques for DIP supported mathematical morphology. Since these techniques swear solely on the relative ordering of picture element values not on their numerical values they’re particularly suited to the process of binary pictures and grayscale pictures.

Segmentation:

In the analysis of the objects in pictures it’s essential that we are able to distinguish between the objects of interest and “the restâ€. This latter cluster is additionally observed because the background. The techniques that square measure accustomed realize the objects of interest square measure typically observed as segmentation techniques.

Object recognition:

The beholding of acquainted objects. In pc vision ,it is the task of finding a given object in a picture or video sequence. Humans acknowledge a mess of objects in pictures with very little effort, despite the very fact that the image of the objects might vary somewhat in numerous viewpoints, in many various sizes / scale or perhaps once they square measure translated or turned. Objects will even be recognized once they square measure partly stopped-up from read.

3.3 IMAGE ACQUISITION :

The important steps in image acquisition are :

a.Sensor( converts optical to electrical energy)

b.Digitizer(It converts analog signal to digital signal)

Interesting phenomena : Our eye is capable of differentiating between various levels of intensity.It accomplishes this variation by changes in overall sensitivity and this development is termed “brightness adaptationâ€. Subjective brightness( intensity as perceived by human eye) may be a log perform of sunshine intensity incident on the attention. a visible system cannot operate over such an oversized vary at the same time. therefore our eye cannot see terribly bright and extremely dim pictures at a time

Light and spectrum : lightweight may be a a part of spectrum which will be seen and detected with the human eye. lightweight travels at a speed of 3*10^8 m/s. The visible radiation are often break up into VIBGYOR from vary of violet ( zero.43micrometres ) to red(0.79micrometres). A substance that absorbs all the colors seems as black, no color as white. A substance that reflects blue can seem as blue. color is that the a part of lightweight spectrum that isn’t absorbed by human eye. lightweight that’s devoid of color is termed monochromatic or achromatic lightweight. the sole property of this lightweight is intensity or gray level.

Properties of sunshine :

a.Radiance : the overall energy that flows from a light-weight supply is termed radiance. Units are watts. Example of sources are sun, bulb etc.

b.Luminance : the quantity of energy that AN observer perceives from the supply is termed luminousness. Units are lumens. Example is seeing the sun with black glasses.

c.Brigthness : Brightness is AN attribute of beholding within which a supply seems to emit a given quantity of sunshine. it’s no units because it is much not possible to live.

In image sensing lightweight energy is born-again into voltage. Image acquisition are often done victimization three principle detector arrangements :Single detector, Line detector/strip detector and Array sensor

If one thing is ever-changing quite seventeen times per second or if the frequency is larger than seventeen then we tend to cannot differentiate it.

SIMPLE IMAGE FORMATION MODEL :

Images are drawn as 2-D functions f(x,y). the worth of f(x,y) at any purpose (x,y) at any purpose (x,y) could be a positive amount.

0 < f(x,y) < time

f(x,y) depends on 2 parameters :

a.Amount of sunshine incident on the scene = i(x,y)

b.Amount of sunshine mirrored from the scene = r(x,y)

f(x,y) = i(x,y) * r(x,y)

0 < i(x,y) < time

0 < r(x,y) < 1

In the last difference zero represents total absorption and no reflection (black) and one represents total reflection and no absorption(white).

0 < f(x,y) < time

imin < i(x,y) < imax

rmin < r(x,y) < rmax

imin * rmin < f(x,y) < imax * rmax

Lmin < l < Lmax

If you concentrate on monochromatic light-weight L = variety of grey levels

If L = thirty then n = five (2^5 > 30)

The interval (Lmin “ Lmax) is termed gray scale that is shifted to (0,L-1) where

l = zero (black) & l = L-1 (is white)

All intermediate stages are reminder grey.

All real time signals are analog in nature. however we want a digital image. however we tend to acquire AN analog image and alter it. For this we want AN A/D device. To convert present pictures into digital type we tend to should alter each coordinates and amplitudes. Digitizing coordinate values is termed sampling and digitizing amplitude values is termed division. thence the standard of digital image can depend upon the amount of samples(sampling) & the amount of grey levels(quantization). additional the samples higher are going to be the standard.

3.3.1 REPRESENTING DIGITAL IMAGES:

The result of sampling and quantization of an image is an array of numbers according to x and y coordinates which is nothing but a matrix of m rows and n columns. Columns and rows depend on number of samples. Brightness depends on number of bits used to represent each pixel. For an image of size m rows and n columns total number of pixels = m*n

If each pixel is represented using k bits then total amount of memory(in bits) required to store the image = m*n*k

A 1M pixel size image requires 1024 rows and 1024 columns. If each pixel is represented using 8 bits then total amount of memory required to store the image = 1k*1k*1 bytes

RESOLUTION :

Resolution is classified into 2 types : Spatial and Gray level Resolution

Spatial Resolution :

It is the tiniest discernible amendment in graylevel. the amount of graylevels ought to be an influence of two. The ordinarily used range of graylevels is 256.

L = 256

2^k = 256

k = eight (8 bits per pixel)

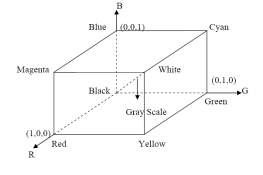

RGB color house

The RGB image is created of color pixels of Associate in Nursing M x N x three array. {the color|the color} house is generally diagrammatically shown as RGB colour cube. The cube vertex consists of the first color (Red, inexperienced and Blue) and therefore the secondary color (Cyan, Magenta and Yellow).The schematic of the RGB cube that illustrate each the first and secondary color a teach vertex is shown

Fig 3.1 RGB schematic block diagram

3.4 Conversion of RGB Images to Gray Images

Signal to noise: For many applications of image processing, colour information doesn’t help us identify important edges or other features. There are exceptions. If there is an edge (a step change in pixel value) in hue that is hard to detect in a gray scale image, or if we need to identify objects of known hue (orange fruit in front of green leaves), then colour information could be useful. If we don’t need colour, then we can consider it noise. At first it’s a bit counterintuitive to “think” in gray scale, but you get used to it.

Complexity of the code: If you want to find edges based on luminance AND chrominance, you’ve got more work ahead of you. That additional work (and additional debugging, additional pain in supporting the software, etc.) is hard to justify if the additional colour information isn’t helpful for applications of interest.

For learning image processing, it’s better to understand gray scale processing first and understand how it applies to multichannel processing rather than starting with full colour imaging and missing all the important insights that can (and should) be learned from single channel processing.

Speed: With modern computers, and with parallel programming, it’s possible to perform simple pixel-by-pixel processing of a megapixel image in milliseconds. Facial recognition, OCR, content-aware resizing, mean shift segmentation, and other tasks can take much longer than that. Whatever processing time is required to manipulate the image or squeeze some useful data from it, most customers/users want it to go faster. If we make the hand-wavy assumption that processing a three-channel colour image takes three times as long as processing a gray scale image–or maybe four times as long, since we may create a separate luminance channel–then that’s not a big deal if we’re processing video images on the fly and each frame can be processed in less than 1/30th or 1/25th of a second. But if we’re analyzing thousands of images from a database, it’s great if we can save ourselves processing time by resizing images, analyzing only portions of images, and/or eliminating colour channels we don’t need.

3.5 Image Deblurring

order to be ready to higher perceive the ways of image de blurring that were applied throughout this project, analysis was done into the mechanism behind a number of the naïve approaches to image de blurring. The investigation went through 3 stages of de blurring techniques of accelerating sophistication so as to make up associate degree understanding of the essential ways and weaknesses of image de blurring. For the remainder of this report, unless otherwise explicit all images will be assumed to be in a gray scale.

3.5.1 General Method of Image Deblurring

The general method of image deblurring is a direct method for obtaining a blurred or deblurred image from the original or blurred image, respectively.

The most basic method of deblurring is using deconvolution.in this method first we are adding the psf function to the original image by using convolution in order to blur it. and then we will add the noise to it. then the resultant blurred image is then converted into frequency domain by using fast fourier transform. Because we cannot use deconvolution directly to time domain signals. Then we are deconvoluting the signal and psf function using basic transfer function formula. Then we will get the original signal in frequency domain. Now by applying inverse fast fourier transform to that function we will get the original image.

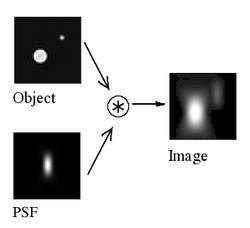

3.5.2 Point Spread Function

point unfold operate (PSF) describes the response of Associate in Nursing imaging system to purpose|some extent|a degree} supply or purpose object. A a lot of general term for the FTO could be a system’s Impulse response, the FTO being the impulse response of a centered optical system. The FTO in several contexts is thought of because the extended blob in a picture that representsAssociate in Nursing unresolved object. In useful terms, it’s the spacial domain,version of the transfer operate of the imaging system. it’s a helpful conception in fourier optics, astronomical imaging, research|microscopy} and alternative imaging techniques like 3D microscopy (like in confocal optical maser scanning microscopy) and microscopy. The degree of spreading (blurring) of the purpose object could be a live for the standard of Associate in Nursing imaging system. In non-coherent imaging systems, like fluorescent microscopes, telescopesor optical microscopes, the image formation method is linear in power and delineate by linear system theory. this suggests that once 2 objects A and B ar imaged at the same time, the result’s adequate to the total of the severally imaged objects. In alternative words: the imaging of A is unaffected by the imaging of B and the other way around, attributable to the non-interacting property of photons. The image of a fancy object will then be seen as a convolution of truth object and also the FTO. However, once the detected lightweight is coherent, image formation is linear within the advanced field. Recording the intensity image then will result in cancellations or alternative non-linear effects

Fig 3.2 Convolution of object with PSF

Fig 3.3 Spherical aberration(disk)

A point source as imaged by a system with negative (top), zero (center), and positive (bottom) spherical aberration. Images to the left are defocused toward inside, images on the right toward the outside. “h=hspecial(‘type’, parameters)”accept filters specified by the type plus additional modifying parameters particular to the type of filter chosen. If you omit these arguments, fspecial uses default values for the parameters.

3.5.3 Motion blur

Motion blur is the apparent streaking of rapidly moving objects in a still image or a sequence of images such as a movie or animation. It results when the image being recorded changes during the recording of a single exposure, either due to rapid movement or long exposure.

3.5.4 Applications of motion blur

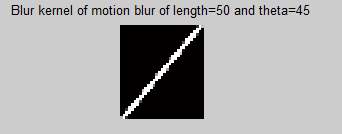

Motion blur finds its applications in photography, biology, animation, computer graphics etc. The MATLAB code for the representation of motion blur is represented below by using fspecial functions is”h=fspecial(‘motion’,Len,theta)” returns a filter to approximate ,once convolved with an image , the linear motion of a camera by Len pixels, with an angle of theta degrees in a counterclockwise direction. The filter becomes a vector for a horizontal and vertical motions.the default Len is 9 and the default theta is 0, which corresponds to horizontal motion of nine pixels.

3.6 Median filter

din signal process it’s usually desirable to be able to perform some reasonably noise reduction on a picture or signal. the median filter could be a nonlinear digital filtering technique, usually wont to take away noise. such noise reduction could be a typical pre-processing step to enhance the results of later process (for example, edge detection on an image). median filtering is extremely wide employed in digital image process as a result of, beneath bound conditions, it preserves edges whereas removing noise (but see discussion below).the main plan of the median filter is to run through the signal entry by entry, exchange every entry with the median of neighboring entries. the pattern of neighbors is termed the “window”, that slides, entry by entry, over the whole signal. for 1d signals, the foremost obvious window is simply the primary few preceding and following entries, whereas for 2nd (or higher-dimensional) signals like pictures, additional advanced window patterns square measure attainable (such as “box” or “cross” patterns). note that if the window has an odd variety of entries, then the median is easy to define: it’s simply the center worth in the end the entries within the window square measure sorted numerically. for a fair variety of entries, there’s over one attainable median, see median for aditional details. to demonstrate, employing a window size of 3 with one entry in real time preceding and following every entry, a median filter are going to be applied to the subsequent easy 1d signal:

x = [2 eighty vi 3]

so, the median filtered sign y can be:

y[1] = median[2 two 80] = two

y[2] = median[2 eighty vi] = median[2 vi 80] = 6

y[3] = median[80 vi 3] = median[3 vi 80] = vi

y[4] = median[6 three three] = median[3 three 6] = 3

medfilt2:median filtering could be a nonlinear operation usually employed in image process to scale back “salt and pepper” noise. a median filter is more practical than convolution once the goal is to at the same time scale back noise and preserve edges

3.7 Morphological Operations

Morphological image processing is a tool for extracting or modifying information on the shape and structure of objects within an image. Morphological operators, such as dilation and erosion , are particularly useful for the analysis of binary images, although they can be extended for use with grayscale images. Morphological operators are non-linear, and common usages include filtering, edge detection, feature detection, counting objects in an image, image segmentation, noise reduction,and finding the mid-line of an object.

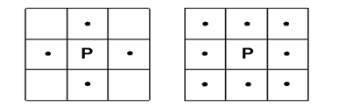

3.7.1 Connectivity

In binary images an object is defined as a connected set of pixels. With two-dimensional images connectivity can be either 4-connectivity or 8-connectivity (Fig. 9.1). In 4-connectivity, each pixel (P) has four connected neighbors (N) – top, bottom, right and left. The diagonally touching pixels are not considered to be connected. In 8-connectivity, each pixel (P) has eight connected neighbors (N) – including the diagonally touching pixels. For three-dimensional images neighborhoods can be 6-connected, 18-connected or 26-connected.

- (ii)

Fig 3.4 Connectivity in two-dimensional images. (i) 4-connectivity – each pixel (P) has four connected neighbors (●) (ii) 8-connectivity – each pixel (P) has eight connected neighbors (●).

3.7.2 Dilation and Erosion

In binary images dilation is an operation that increases the size of foreground objects, generally taken as white pixels although in some implementations this convention is reversed. It can be defined in terms of set theory, although we will use a more intuitive algorithm. The connectivity needs to be established prior to operation, or a structuring element defined (Fig. 8.8).

- (ii)

Fig 3.5 Structuring elements corresponding to (i) 4-connectivity (ii) 8-connectivity.

The algorithm is as follows: superimpose the structuring element on top of each pixel of the input image so that the center of the structuring element coincides with the input pixel position. If at least one pixel in the structuring element coincides with a foreground pixel in the image underneath, including the pixel being tested, then set the output pixel in a new image to the foreground value. Thus, some of the background pixels in the input image become foreground pixels in the output image; those that were foreground pixels in the input image remain foreground pixels in the output image. In the case of 8-connectivity, if a background pixel has at least one foreground (white) neighbor then it becomes white; otherwise, it remains unchanged. The pixels which change from background to foreground are pixels which lie at the edges of foreground regions in the input image, so the consequence is that foreground regions grow in size, and foreground features tend to connect or merge.

Fig 3.6 The effect of dilation in connecting foreground features, using a structuring element corresponding to 8-connectivity.

In contrast erosion is an operation that increases the size of background objects (and shrinks the foreground objects) in binary images. In this case the structuring element is superimposed on each pixel of the input image, and if at least one pixel in the structuring element coincides with a background pixel in the image underneath, then the output pixel is set to the background value. Thus, some of the foreground pixels in the input image become background pixels in the output image; those that were background pixels in the input image remain background pixels in the output image. In the case of 8-connectivity, if a foreground pixel has at least one background (black) neighbor then it becomes black; otherwise, it remains unchanged. The pixels which change from foreground to background are pixels at the edges of background regions in the input image, so the consequence is that background regions grow in size, and foreground features tend to disconnect or further separate

Fig 3.7 The effect of erosion in connecting foreground features, using a structuring element corresponding to 8-connectivity.

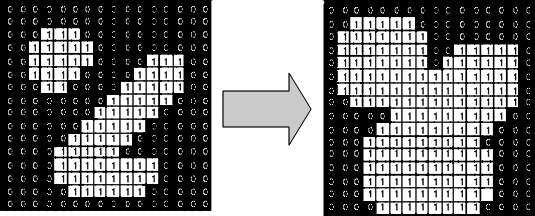

3.8 Conversion of Grayscale Images to Binary Images

In order facilitate following method swimmingly and reducing the interval, the image acquire is being reborn to Binary Image.

Binary Image: It is a picture that quantized into 2 values representing zero and one or in constituent values of zero and 255 representing the color black and white. Binary pictures is that the simplest method and has apply to several different application. it’s helpful because the info we want are often obtained from the silhouette of the article.

Next, Binary pictures area unit obtained by changing the input image into grey scale format, then by changing the grey scale image to binary image by thresholding. The image is formed from a matrix squares that is termed constituent. every constituent within the image encompasses a brightness price that is thought as gray level. The pixel of threshold will be set to 1 and rest to zero.

3.9 Image enhancement

the aim of this method is to extend and improve the visibility of the image. image improvement techniques consists method of sharpening the perimeters image, distinction manipulation, reducing noise, color image process and image segmentation additionally.

3.10 IMAGE FILLING AND THINNING

A hole is the place where dark pixels are surrounded by bright pixels. So is filling of the holes is performed by replacing these holes with the pixel 1 where as Thinning removes pixels so that an object without holes gets shrinked or thinned

3.11 Image Localization

By Filtering, we have a tendency to ar ready to take away or filtrate the unwanted substances or noise that’s not a personality or digits. Lastly, the image is just left with characters and digits during which we have a tendency to have an interest. the two stages for the algorithmic rule is as follows:

1) take away out the tiny objects or connected elements.

2) determine the frame line that’s connected to the digits and separate it.

3.12 IMAGE SEGMENTATION

Image segmentation plays a crucial and important step that cause the analysis of the processed image information. so as to extract and analyzed the thing characteristic, the method got to partition the image into completely different components that may have a robust correlation with the objects

3.13 OPTICAL CHARACTER RECOGNITION

Optical Character Recognition, sometimes abbreviated to OCR, is that the mechanical or electronic translation of scanned pictures of written, written or written text into machine-encoded text. it’s wide accustomed convert books and documents into electronic files, to computerize a record-keeping system in associate workplace, or to publish the text on a web site. OCR makes it potential to edit the text, explore for a word or phrase, store it a lot of succinctly, show or print a replica freed from scanning artifacts, and apply techniques like computational linguistics, text-to-speech and text mining thereto. OCR could be a field of analysis in pattern recognition, computer science and pc vision.

M S E = 1 m n ∑ i = 0 m − 1 ∑ j = 0 n − 1 [ I ( i , j ) − K ( i , j ) ] 2 {displaystyle {mathit {MSE}}={frac {1}{m,n}}sum _{i=0}^{m-1}sum _{j=0}^{n-1}[I(i,j)-K(i,j)]^{2}}

CHAPTER 4

PROPOSED SCHEME USING OCR

4.1 FLOWCHART FOR OVRS SYSTEM

Start

Image Acquisition and preprocessing

Plate localization and extraction

Character segmentation

Character Recognition

Display of data in text file

Stop

Fig 4.1 Overview of the OCR based vehicular recognition using segmentation

4.1.1 IMAGE ACQUISITION AND PREPROCESSING

The initial part of image process for Vehicle car place Recognition is to get pictures of vehicles. Electronic devices like optical (digital/video) camera, digital camera etc may be accustomed capture the non inheritable pictures. For this project, vehicle pictures are soft on a Panasonic FX thirty photographic camera. The images will be stored as JPEG format on the camera.

Fig 4.2 RGB Image Acquisition

Images captured using IR or photographic cameras will be either in raw format or encoded into some multimedia standards like JPEG (Joint Photographic Expert Group), or PNG (Portable Network Graphics). Normally, these images will be in RGB mode or HSV mode. RGB mode effectively has 3 channels whereas HSV has 8 channels. Number of channels defines the amount colour information available on the image. The system under implementation does require only to distinguish two colours. Hence, the image has to be converted to gray-scale leaving two channels.

Preprocessing Techniques:

Gray scale conversion: From the 24-bit color value of each pixel (i,j) the R, G and B components are separated and the 8-bit gray value is calculated using the formula: gray(i, j) = 0.59 * R(i, j) + 0. 30 * G(i, j) + 0. 11 * B(i, j) … (1)

Median filtering: Median filter is a non-linear filter, which replaces the gray value of a pixel by the median of the gray values of its neighbors. This operation removes salt-and-peeper noise from the image [22].

As RGB color space is greatly affected by light, we cannot use it to determine colors.So We need to change the color image to Gray scale image using rgb2gray function

Fig 4.3 Gray scale deblurred image

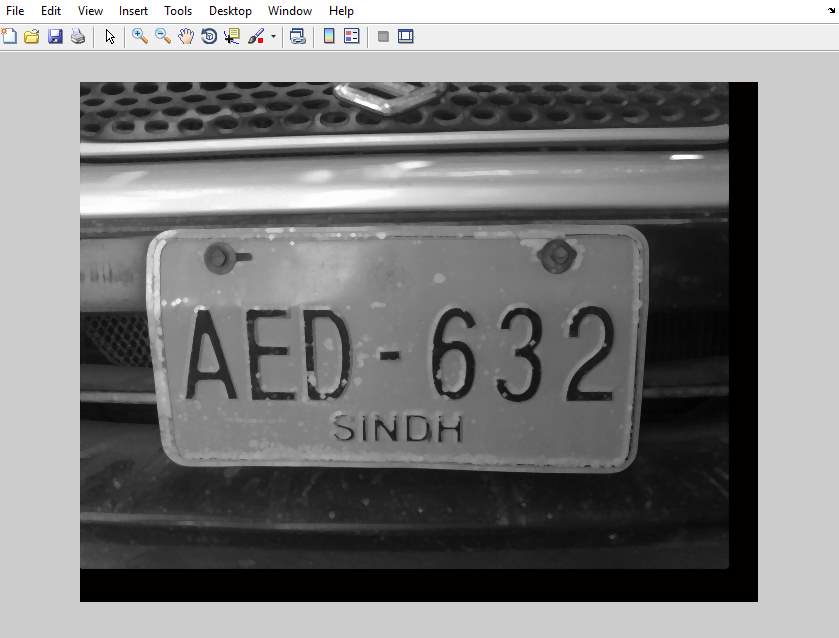

Motion blur with psf

In Image processing, a kernel, convolution matrix, or mask is a small matrix useful for blurring, sharpening, embossing, edge-detection, and more. This is accomplished by means of convolution between a kernel and an image.

Fig 4.4 Blur kernel of motion blur

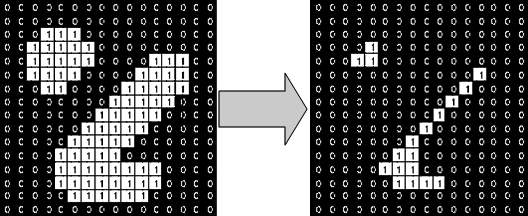

But due to the motion of the vehicle there is an occurrence of motion blur in the image which is obtained due to the convolution of point spread function with grayscale image as shown

Fig 4.5 Motion blurred image

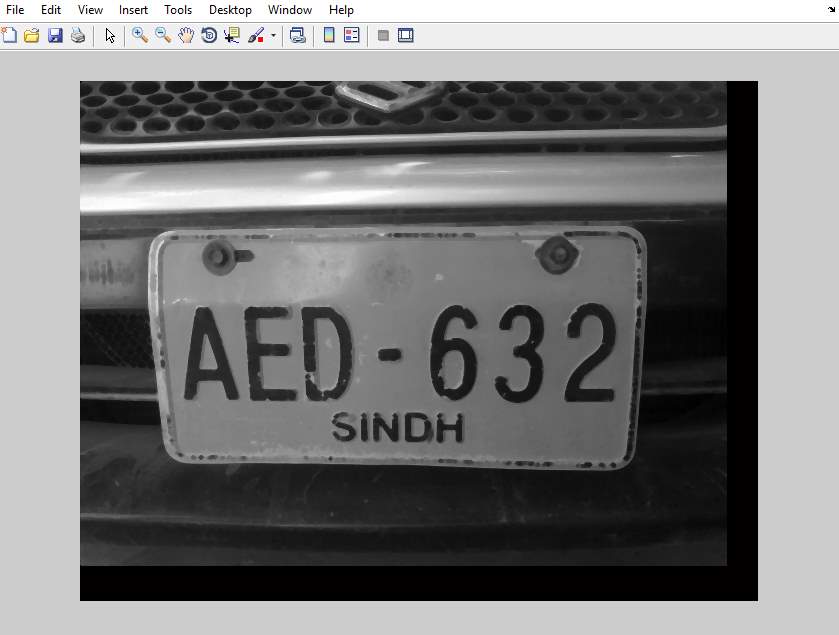

Deblurring is the process of removing blurring artifacts from images, such as blur caused By motion blur. The blur is typically modeled as the convolution of a (sometimes space- or time-varying) point spread function with a hypothetical sharp input image, where both the sharp input image (which is to be recovered) and the point spread function are unknown.The next step is to deblur the image using a deblurring technique which consists of deconvolution of motion blur with the blurred image as shown below

Fig 4.6 Motion deblurred image

Rear or front part of the vehicle is captured into an image. This image will certainly contain most other parts of the vehicle and the environment, which are of very low requirement to the present system. The license-plate is the only portion in the image which we are interested in and need to be localized

There are two purposes for the binarization step:

1.Highlighting characters.

2.Suppressing background.

However, both desired and undesired image areas appear during binarization.

A number of algorithms are suggested for number plate localization such as:

1. Median filtering to remove noise

2. Morphological operations like erosion and dilation on image

3. Subtraction of erosion and dilation for edge detection.

4. Convolution with identity matrix for brightening the image.

5. Double to binary conversion

6. Intensity scaling of the image

7. Filling the holes on the plate

8. Thinning to isolate characters on plate

9. Region properties for extracting the area and bubble sorting the area

10. Remove connected components less than specified area.

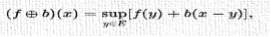

Morphological operations such as dilation and erosion are applied on the number plate as follows:In grayscale morphology, images are functions mapping a Euclidean space or grid E intoR ∪ { ∞ , − ∞ } {displaystyle mathbb {R} cup {infty ,-infty }} , R which R {displaystyle mathbb {R} } is the set of reals, ∞ {displaystyle infty } − ∞ {displaystyle -infty } Grayscale structuring elements are also functions of the same format, called “structuring functions”.Denoting an image by f(x) and the structuring function by b(x),

( f ⊕ b ) ( x ) = sup y ∈ E [ f ( y ) + b ( x − y ) ] , {displaystyle (foplus b)(x)=sup _{yin E}[f(y)+b(x-y)],}where “sup” denotes the supremum.

( f ⊕ b ) ( x ) = sup y ∈ E [ f ( y ) + b ( x − y ) ] , {displaystyle (foplus b)(x)=sup _{yin E}[f(y)+b(x-y)],}where “sup” denotes the supremum.

Fig 4.7 The effect of dilation on number plate.

The next step is the Erosion operation for deletion of pixels and darkens the image as follows, In grayscale morphology, images are functions mapping a Euclidean space or grid E into R ∪ { ∞ , − ∞ } ,where R is the set of reals, denoting an image by f(x) and the grayscale structuring element by b(x), where B is the space that b(x) is defined, the grayscale erosion of f by b is given by

where “inf” denotes the infimum.

where “inf” denotes the infimum.

Fig 4.8 The effect of erosion on number plate.

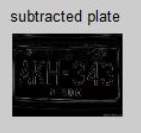

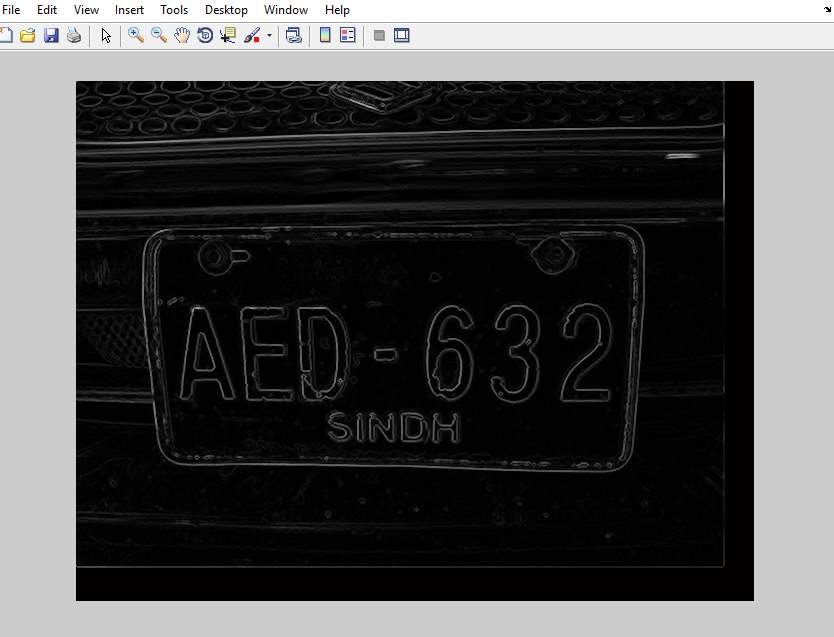

The subtraction of the eroded plate from the dilated plate gives the detection of the edge of required number plate as shown

Fig 4.9 The morphological subtracted plate .

In order to enhance or brighten the edges of the image we have to make use of convolution operation of the image with the identity or unit matrix,

Fig 4.10 The brightened number plate .

The above step is followed by filling of holes inside the number plate so that all the dark pixels surrounded by white pixels will be filled with white pixels

Fig 4.11 The filled number plate .

After performing the region properties inorder to extract the area and bounding box parameters of the image, bubble sorting technique is used for the area in order to arrange the area in increasing order,Now an algorithm is developed in such a way to remove all the connected components less than the specified area,Later the labeling is done for the essential connected components, Now, we have managed to obtain the black and white image of the Vehicle License Plate. It has been cropped to a rectangular shape in which it enhances the algorithm to be more uniformed in the further stages.

In order to eliminate undesired image areas, a connected component algorithm is first applied to the binarized plate candidate.

Connected component analysis is performed to identify the characters in the image. Basic idea is to traverse through the image and find the connected pixels. Label them and extract.

4.1.2 Identify and remove the small connected objects

For this stage, we want to spot the connected parts. The element will have a price of either 4-connected or 8-connected. during this rule, we tend to use 8-connected (8 specify 8-connected objects). when the element has been labeled , they’ll acquire a singular number; the weather of labels (stated within the algorithm)contains number values that may be bigger than or adequate to zero. The constituent of zero represents the background and constituent of one can structure the primary object, the constituent of two can label the second object, therefore on so for.

Next, we’ve apply and bwareaopen (Image process Toolbox) whereby it’ll take away all the connected parts from the binary image that have worth but P pixels, within which it’ll manufacture another binary image.

Binary space open (bwareaopen)

The MATLAB tool chest operate offer a bwareaopen operate that|during which|within which} it removes the connected element which have fewer than P constituent. Thus, it’ll reproduce another binary image. The syntax is as bellowed:

BW2 = bwareaopen(BW,P);

Fig 4.12The Localized plate .

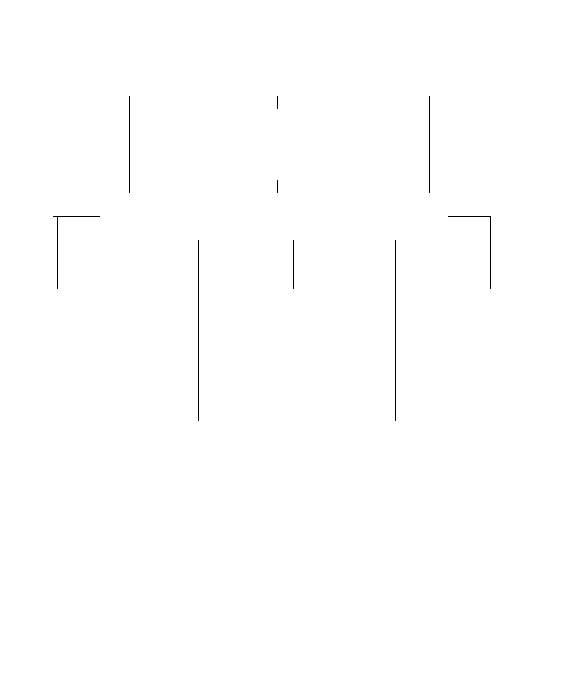

4.1.3 CHARACTER SEGMENTATION

Feature extraction from digital image

The digital image description is depends on the external and mental object. the color or texture of the image is largely the interior illustration whereas the external illustration relies on the characteristic of the shapes. The normalized character description relies on the external characteristics as we have a tendency to solely work for properties on the form of the character. The descriptor vector includes the characteristics because the range of lines, vertical or diagonal edges etc. the method of the feature extraction is to remodel the image information into a variety of descriptor within which additional appropriate for pc.

If we have a tendency to classify the similar character into categories, the descriptor of the character from an equivalent category is near one another within the vector house. this may cause a hit in pattern recognition method.

The rule below can represent however the extraction is being applied and extracted figure is illustrated as below.

[L Ne]=bwlabel(imagen);

disp(Ne);

for n=1:Ne

[r,c] = find(L==n);

n1=imagen(min(r):max(r),min(c):max(c));

imshow(~n1);

end

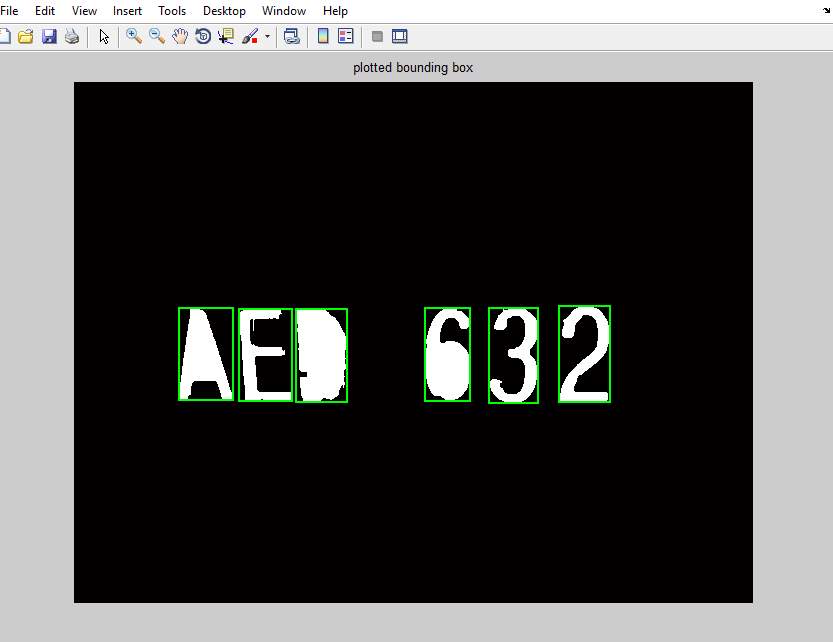

Fig 4.13 Plotted Bounding Box

Segmented Characters from number plate

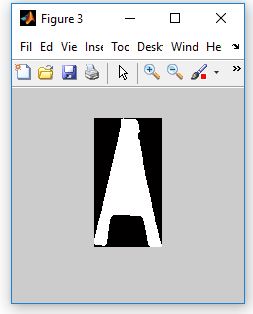

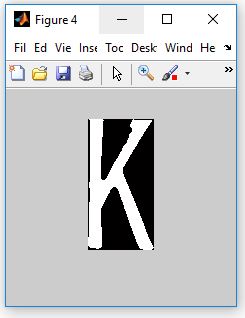

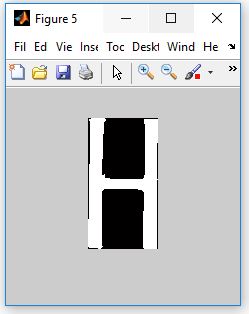

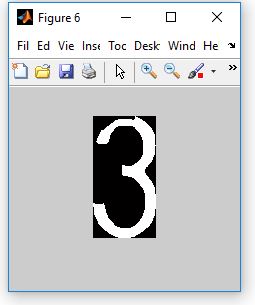

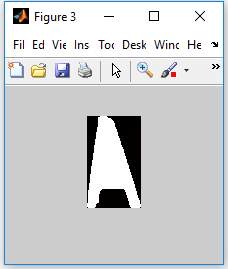

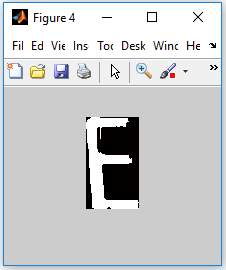

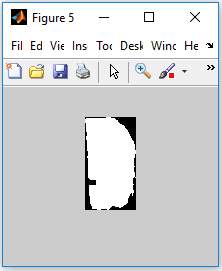

Fig 4.14 Segmented character’A’ Fig 4.15 Segmented character ‘K’ Fig4.16 Segmented character ‘H’

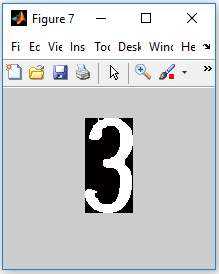

Fig 4.17 Segmented character ‘3’ Fig 4.18 Segmented character ‘4’ Fig 4.19 Segmented character ‘3’

4.1.4 OPTICAL CHARACTER RECOGNITION(OCR)

The goal of Optical Character Recognition (OCR) is to classify optical patterns (often contained in an exceedingly digital image) cherish alphanumerical or alternative characters. the method of OCR involves many steps together with segmentation, feature extraction, and classification.

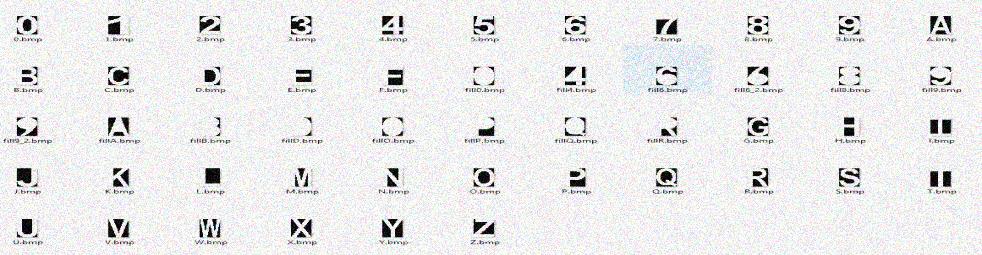

Character Recognition: The description of OCR by 2D Correlation isgiven the chosen license plate and the coordinates that indicate where the characters are, we begin the OCR process by 2D correlation. We correlate every character with either the alphabet or the numeral templates then opt for the worth of every character supported the results of the correlation. the primary 3 characters on the quality License Plates square measure alphabets; so, we have a tendency to correlate all of them with the twenty six alphabet templates. The latter four characters on the quality License Plates square measure numerals; so, we have a tendency to correlate all of them with the ten numeral templates. The result OCR is chosen supported the utmost values of the correlation for every character. However, we have a tendency to accomplished that bound characters square measure ofttimes confused. As a fast resolution, we have a tendency to constituted a theme to show doable alternatives. Those characters that square measure known as simply misinterpreted square measure subjected to a correlation comparison with Associate in Nursing array of alternative characters that’s traditionally better-known to be simply confused with the originals. If there’s a prospect that a letter or range may be confused with quite one character, the characters square measure listed within the output within the order of decreasing chance. This chances are supported the correlation values of the character with the assorted templates, wherever high correlation denotes a decent chance.

However, this theme solely happens if the given letter doesn’t have a awfully high correlation worth (does not land higher than a nominal threshold).

OCR by 2nd correlation is that the possibility that appears to strike the simplest balance between performance and issue in implementation. Details may be discovered within the character isolation portion of the ASCII text file.

Possible problems/Weaknesses

OCR by 2nd correlation is sensitive to the dimensions of the car place, that meant larger or smaller alphabets and numbers within the image. The 2-d correlation was terribly sensitive to the present and regularly gave back wrong results owing to totally different size license plates.

Normalization

In this section, the extracted characters square measure resized to suit the characters into a window. For the project, every character is normalized to the dimensions of (42×24) binary image so follow by reshape to plain dimension before causing the information set to consequent for comparison with the templates.

Template Matching

The correlation between 2 signals (cross correlation) may be a normal approach to feature detection similarly as a part of a lot of subtle techniques. Textbook shows of correlation describe the convolution theorem and therefore the attendant chance of expeditiously computing correlation within the frequency domain victimization the quick Fourier remodel. sadly the normalized kind of correlation (correlation coefficient) most popular in model matching doesn’t have a correspondingly easy and economical frequency domain expression. For this reason normalized cross-correlation has been computed within the abstraction domain. owing to the process price of abstraction domain convolution, many inexact however quick abstraction domain matching strategies have additionally been developed. Associate in Nursing rule for getting normalized cross correlation from remodel domain convolution has been developed, see Lewis . The new rule in some cases provides Associate in Nursing order of magnitude speed over abstraction domain computation of normalized cross correlation.

Fig 4.20 Templates

The steps involved in this process are:

1. Build a template for each of the letters to be recognized. A good first-approximation for a template is to the intersection of all instances of that letter in the number plate. However, more fine-tuning of this template must be done for good performance.

2. Erode the original image using this template as structuring element. All 1 pixels in the resulting image correspond to all matches found for the given template.

3. Find the objects in the original image corresponding to these 1 pixels

Sample segmented character from the number plate which is to be recognized

Can be represented as f(x, y)

Fig 4.21 Segmented Character of the number plate

Template Image which is required to be recognized from number plate.

Can be represented as h(x, y)

Fig 4.22 Template Image

Result of Correlation of f(x, y) and h(x, y).

G(x, y) = f(x, y) * (h*(x, y)).

4.1.5 DISPLAY OF DATA IN TEXT FILE

Finally a new text file is opened and each recognized character is written into that text file and finally stored in the database of the computer.

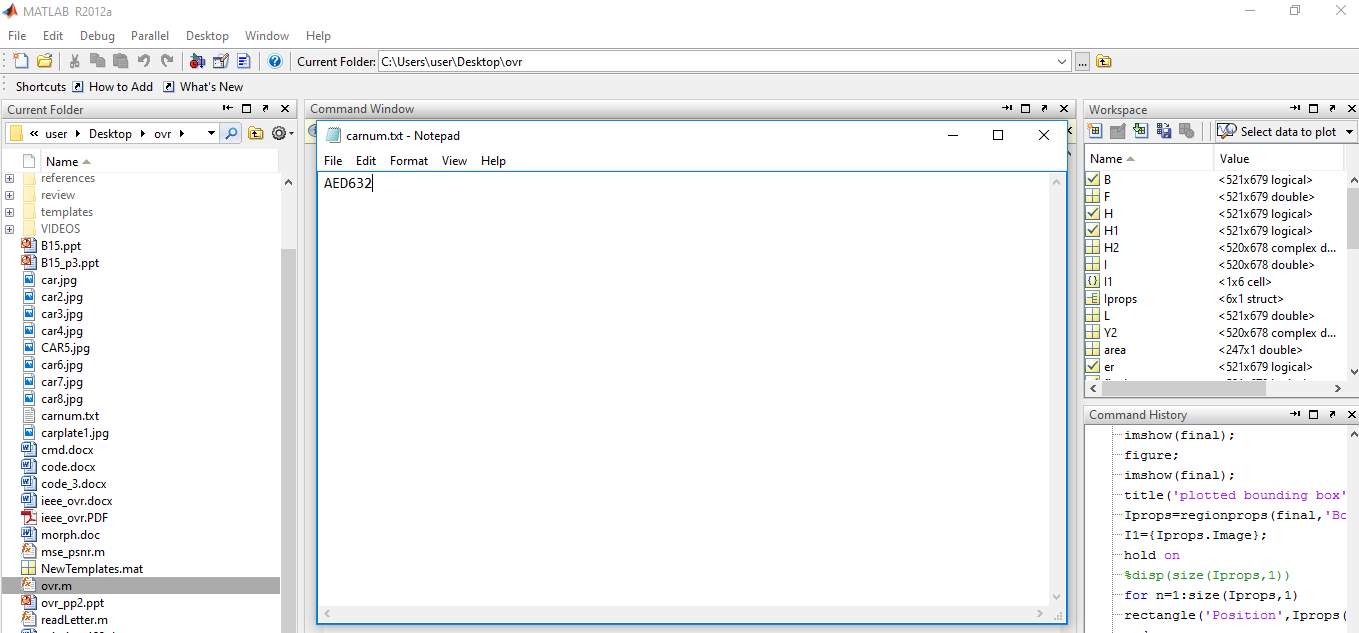

Fig 4.23 ASCII Characters displayed on text file

CHAPTER 5

RESULTS AND ANALYSIS

5.1 SNAPSHOTS

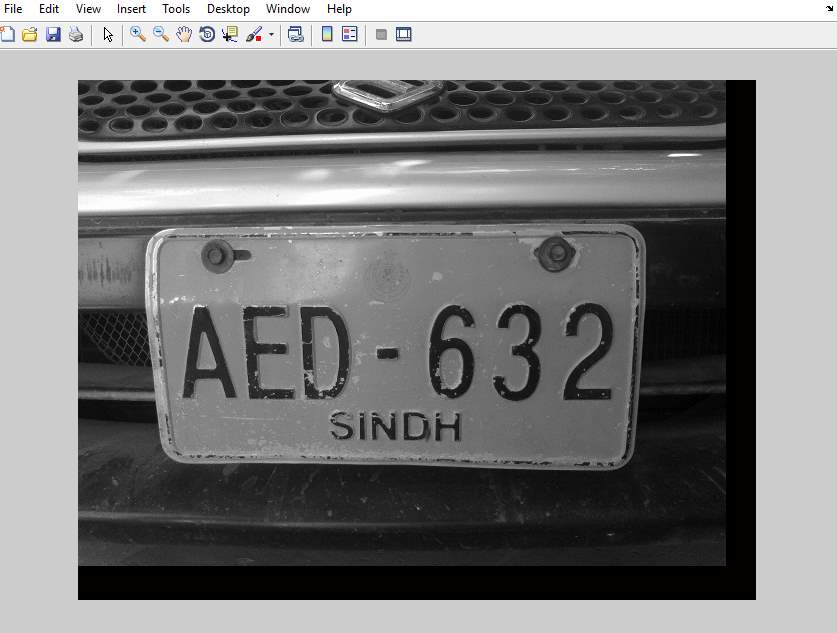

Fig 5.1 Loading car number plate Fig 5.2 Addition of motion blur on the vehicular plate

Fig 5.2 Addition of motion blur on the vehicular plate  Fig 5.3 Deblurred number plate

Fig 5.3 Deblurred number plate

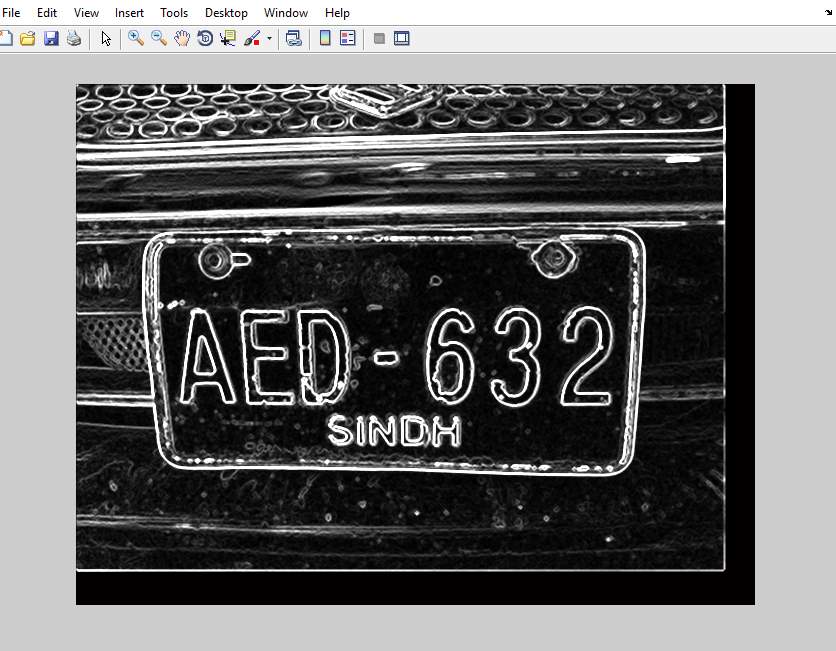

Fig 5.4 Dilated number plate

Fig 5.4 Dilated number plate

The morphological operation of dilation is performed on the de blurred number plate

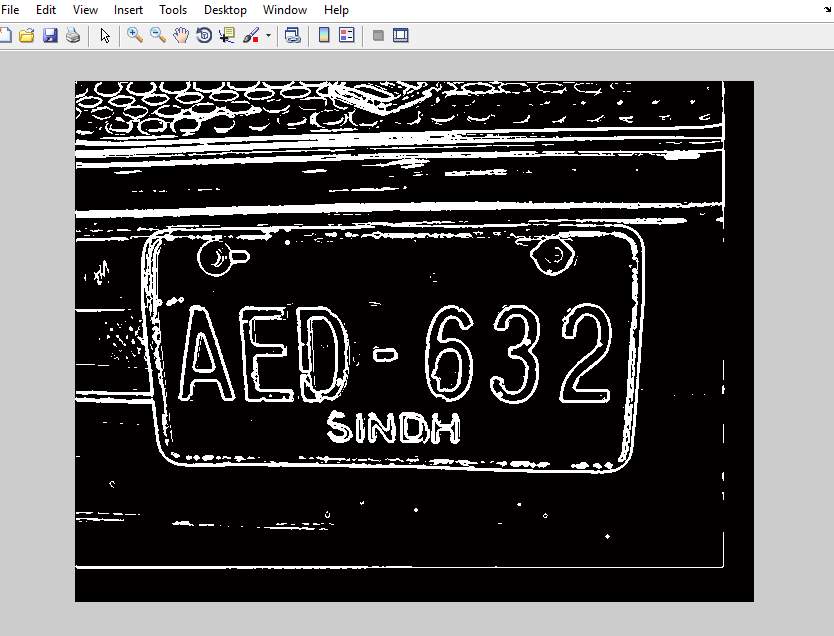

Fig 5.5 Eroded number plate

Fig 5.5 Eroded number plate

The morphological operation of erosion is performed on the de blurred number plate

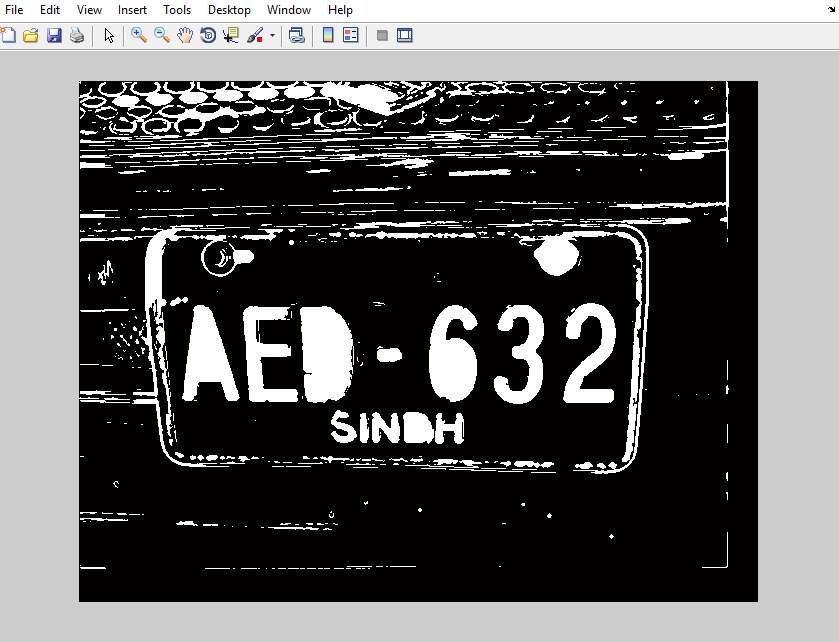

Fig 5.6 Morphologial number plate for edge detection

Fig 5.6 Morphologial number plate for edge detection

Fig 5.7 Brightened number plate

Fig 5.7 Brightened number plate

Brightening operation is performed using convolution on morphological number plate

Fig 5.8 logically binarized number plate

Fig 5.8 logically binarized number plate

Conversion of the brightened number plate to binarized number plate logically

Fig 5.9 Filling the holes in number plate

Fig 5.9 Filling the holes in number plate

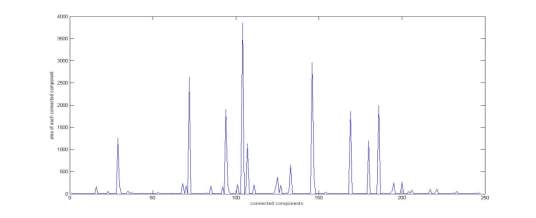

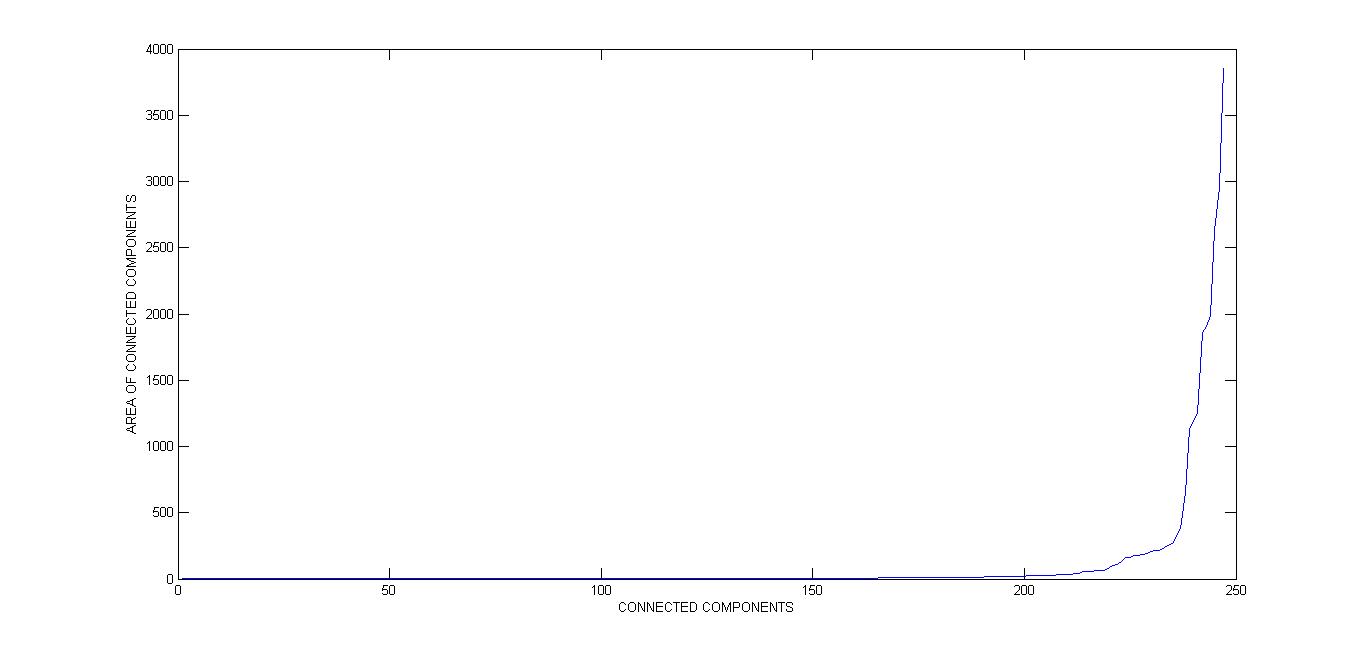

Fig 5.10 Area of the connected components before sorting

Thus the area of all the connected components are plotted as shown above in a graph

Fig 5.11 Area of the connected components after bubble sorting

Fig 5.11 Area of the connected components after bubble sorting

The area is sorted in increasing order with bubble sort to extract 6 characters

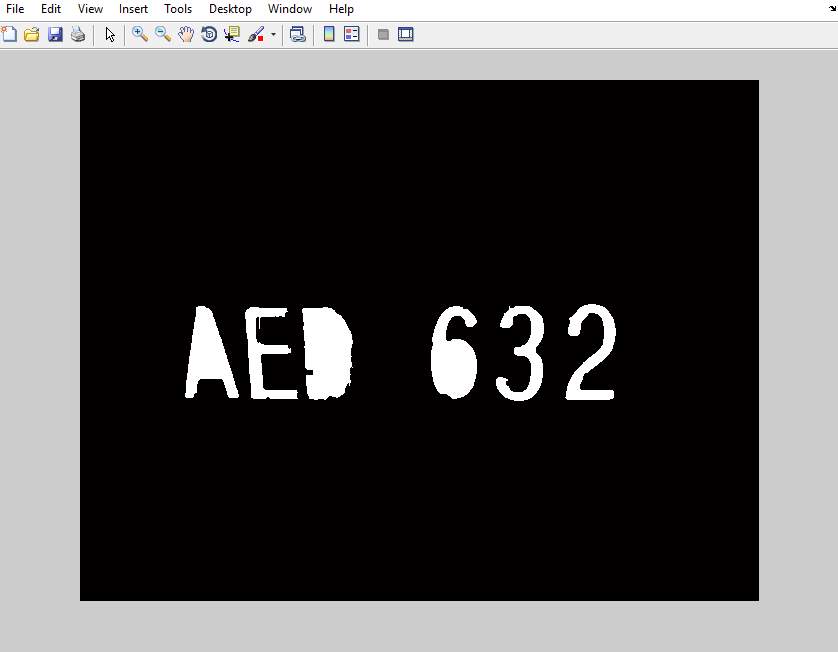

Fig 5.12 Number region extracted

Fig 5.12 Number region extracted

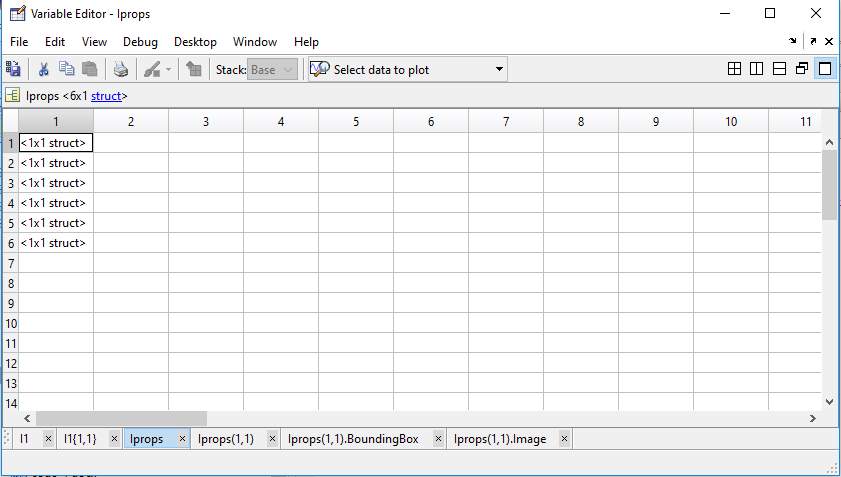

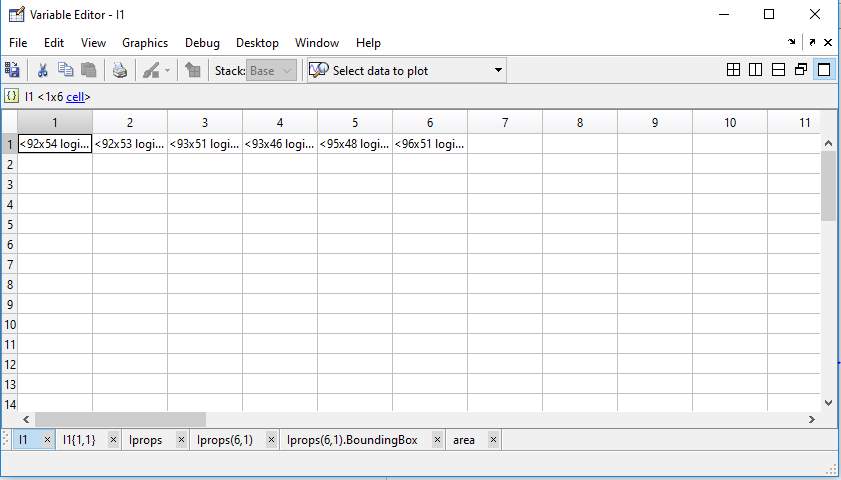

Fig 5.13 Six Connected components extraction using image properties

Fig 5.13 Six Connected components extraction using image properties

The extraction of parameters in a structured format using image properties

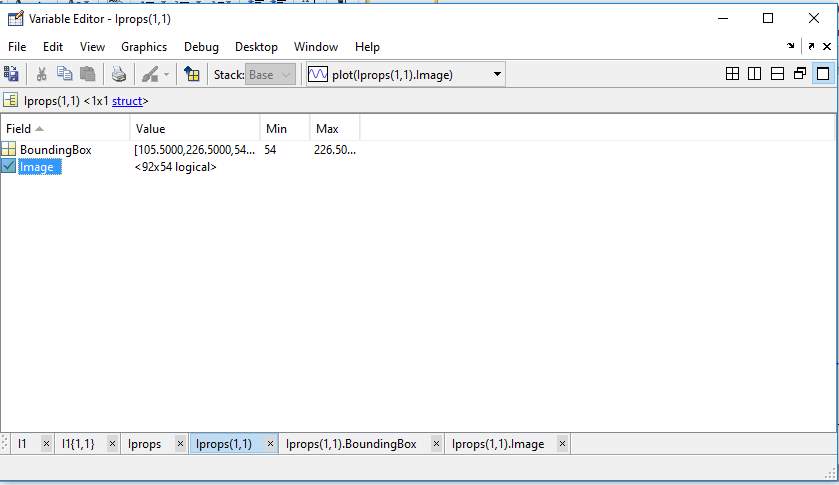

Fig 5.14 First connected component parameters

Fig 5.14 First connected component parameters

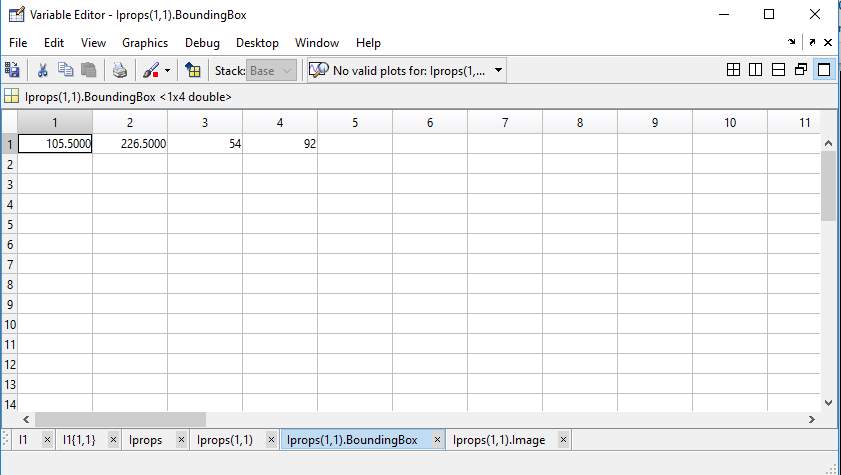

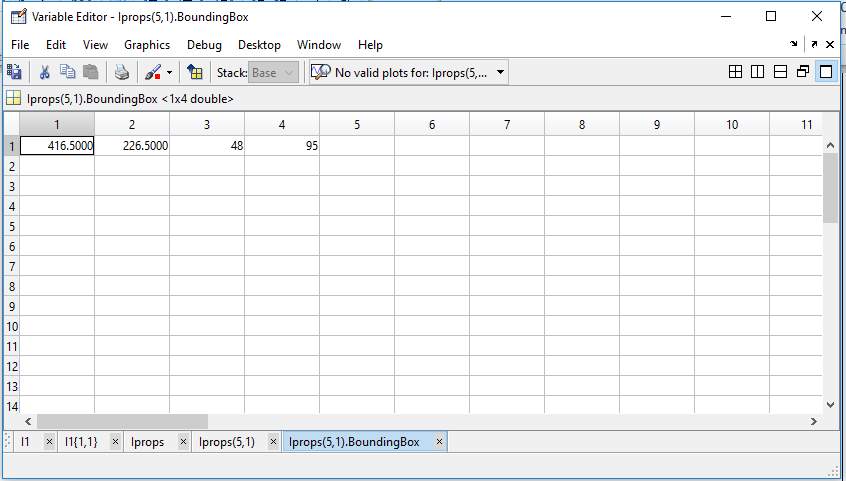

Fig 5.15 Bounding box parameters of first connected component

Fig 5.15 Bounding box parameters of first connected component

Fig 5.16 Bounding box parameters of second connected component

Fig 5.16 Bounding box parameters of second connected component

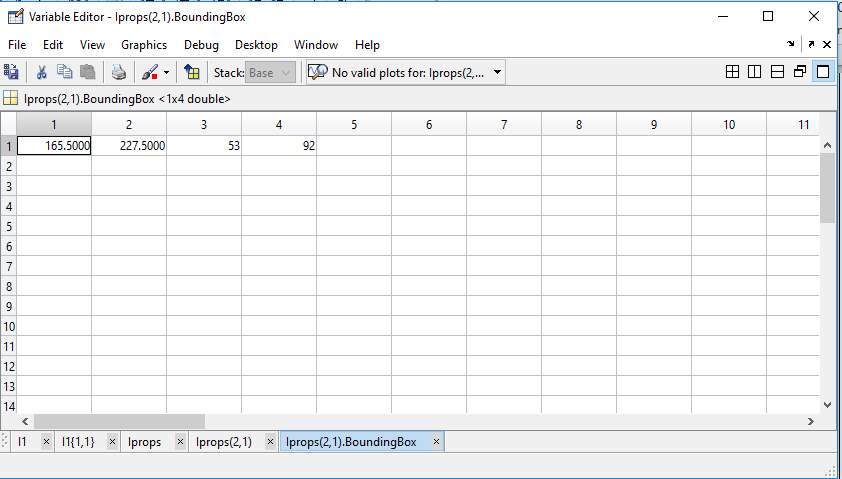

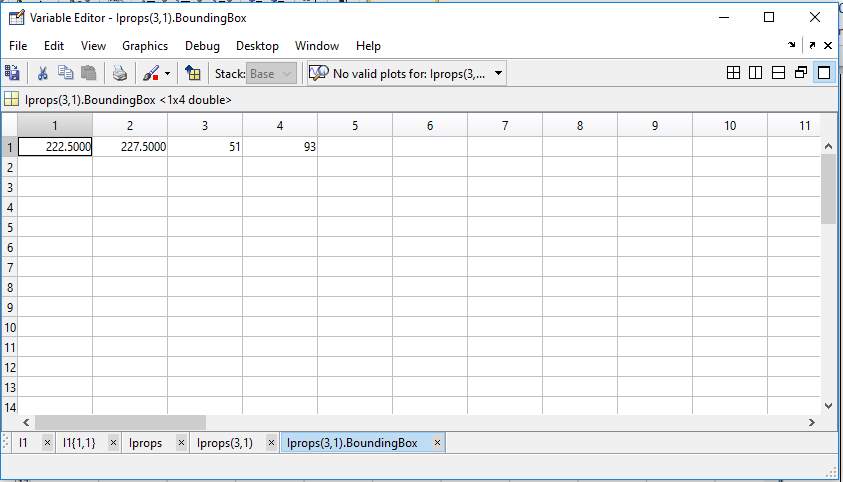

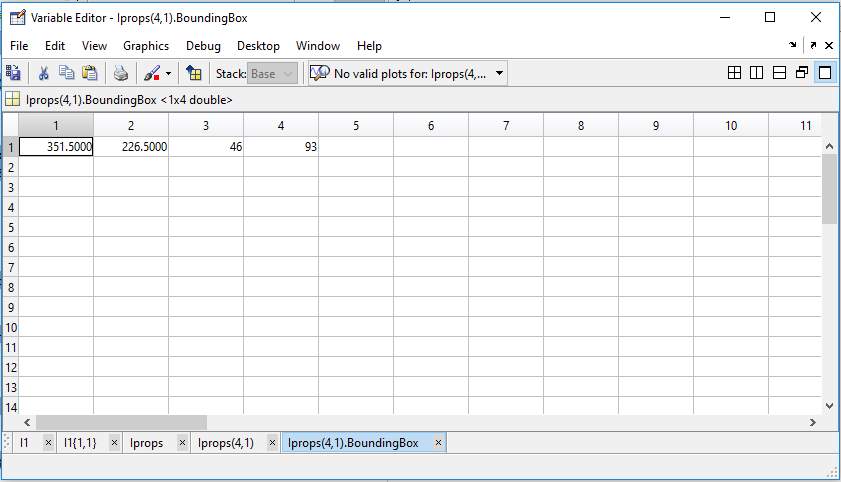

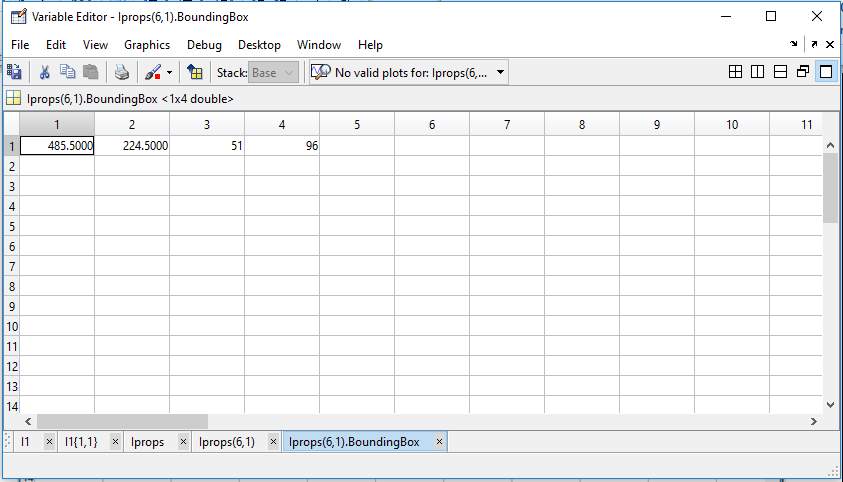

The parameter of each character with starting pixel (x,y), height &width for box plot

Fig 5.17 Bounding box parameters of Third connected component

Fig 5.17 Bounding box parameters of Third connected component

Fig5.18 Bounding box parameters of Fourth connected component

Fig5.18 Bounding box parameters of Fourth connected component

Fig 5.19 Bounding box parameters of Fifth connected component

Fig 5.19 Bounding box parameters of Fifth connected component

Fig 5.20 Bounding box parameters of sixth connected component

Fig 5.20 Bounding box parameters of sixth connected component

Fig 5.21 Segmentation of characters

Fig 5.21 Segmentation of characters

The bounding boxes are plotted for each character using above tables parameters

Fig 5.22 Extraction of each character size with image properties

Fig 5.22 Extraction of each character size with image properties

The sizs of each character is extracted before correlation for resizing before matching

Fig 5.23 Segmented character ‘A’ Fig 5.24 Segmented character ‘E’ Fig 5.25 Segmented character ‘D’

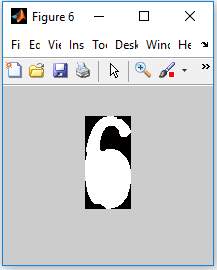

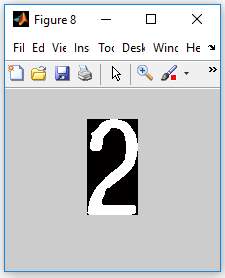

Fig 5.26 Segmented character ‘6’ Fig 5.27 Segmented character ‘3’ Fig 5.28 Segmented character ‘2’

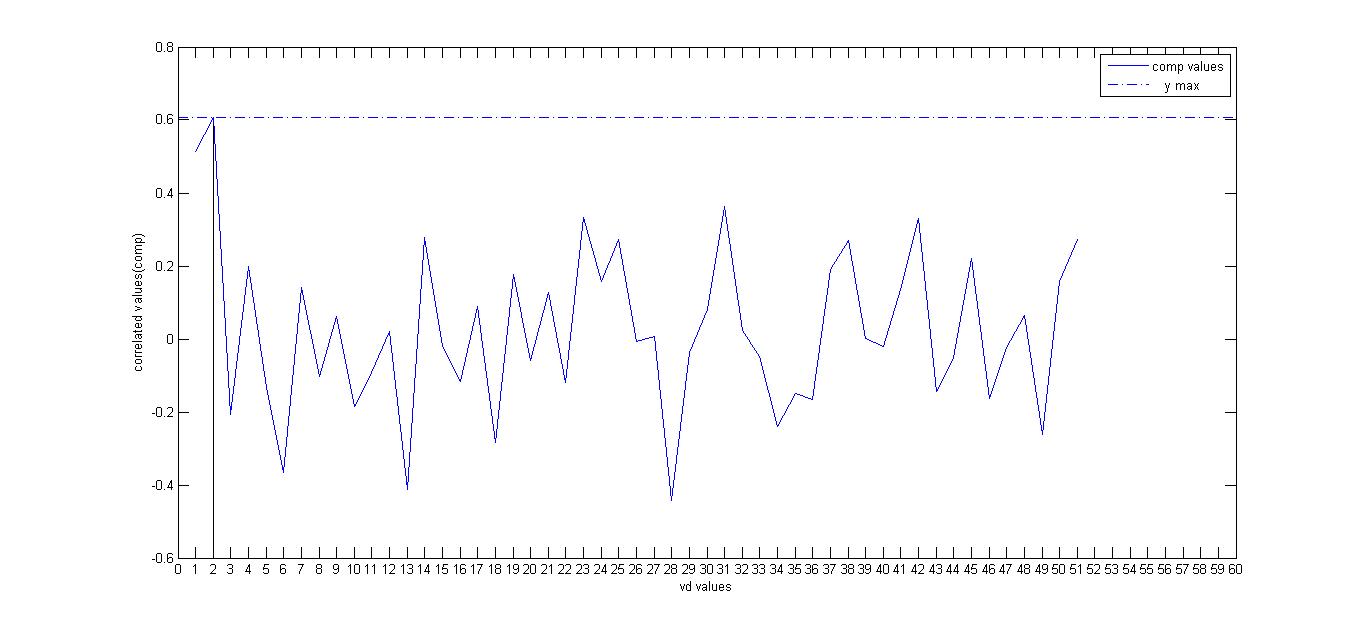

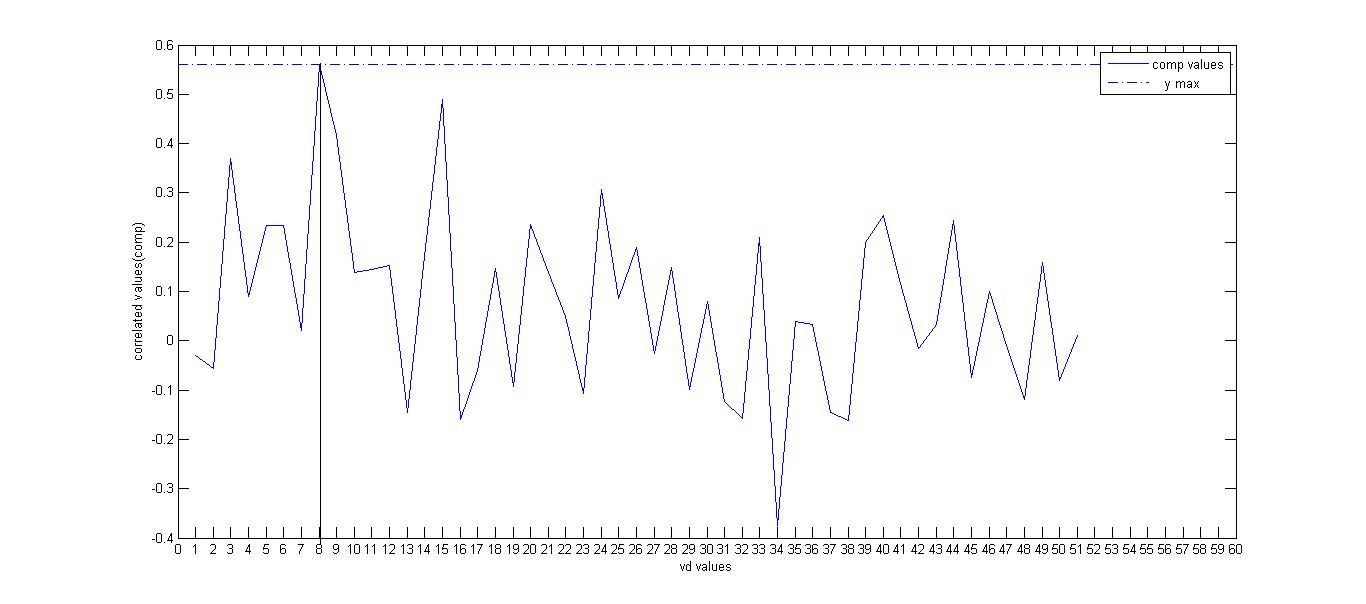

Thus the characters are segmented and plotted and are used for template matching by making use of correlation of each character with all templates and choosing the highest correlated value as value of digit to recognize the character.

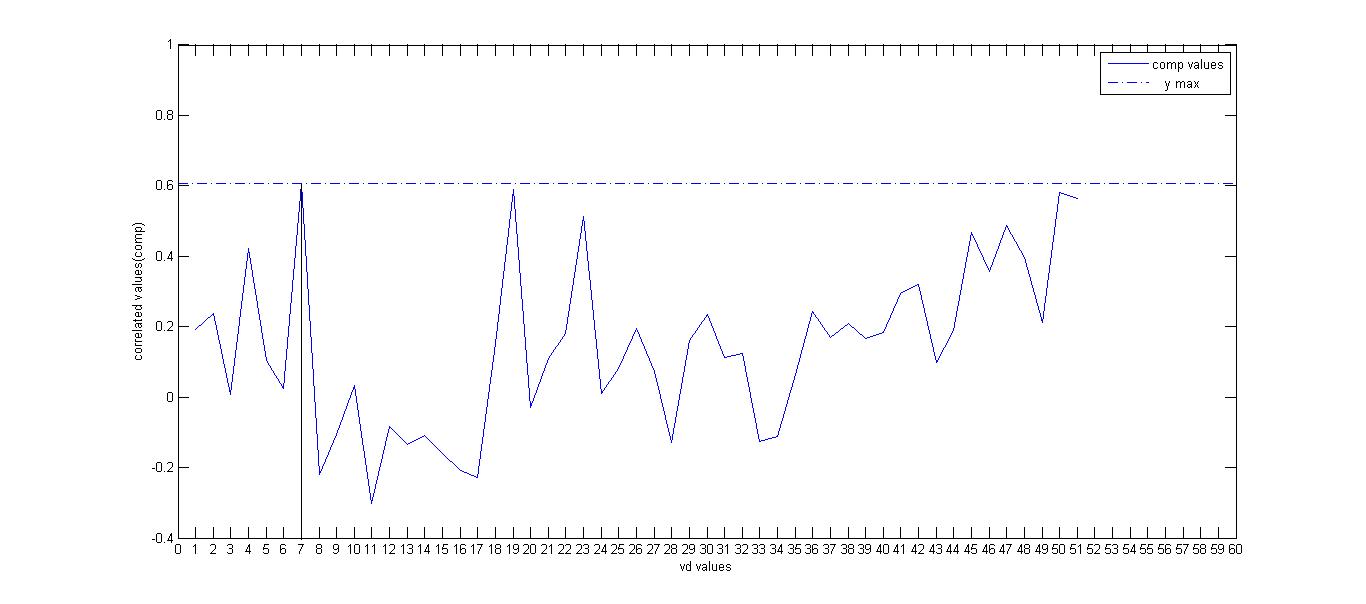

Fig 5.29 Correlated values of first segmented character with all templates for its recognition(vd=2,’A’)

Fig 5.29 Correlated values of first segmented character with all templates for its recognition(vd=2,’A’)

The template database is created and highest correlated value is taken for recognition.

Fig 5.30 Correlated value of second segment character with all templates for its recognition(vd=8,’E’)

Fig 5.30 Correlated value of second segment character with all templates for its recognition(vd=8,’E’)

Fig 5.31 Correlated values of third segmented character with all templates for its recognition(vd=7,’D’)

Fig 5.31 Correlated values of third segmented character with all templates for its recognition(vd=7,’D’)

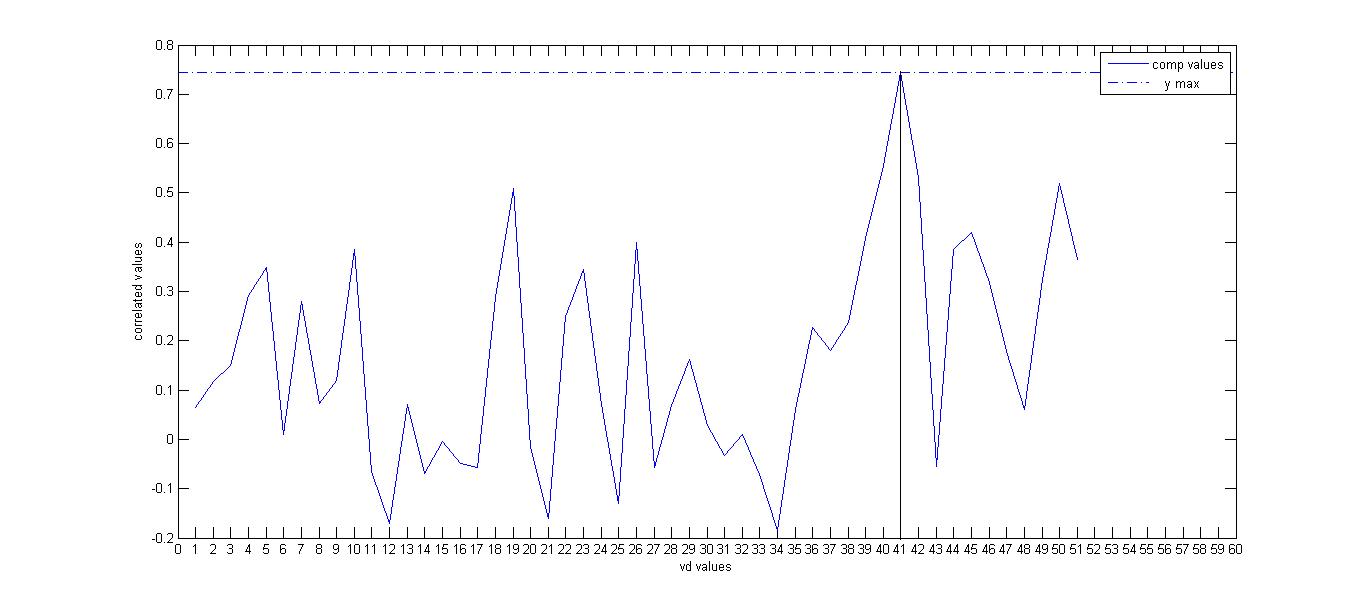

Fig 5.32Correlated values of fourth segment character with all templates for its recognition(vd=41,’6’)

Fig 5.32Correlated values of fourth segment character with all templates for its recognition(vd=41,’6’)

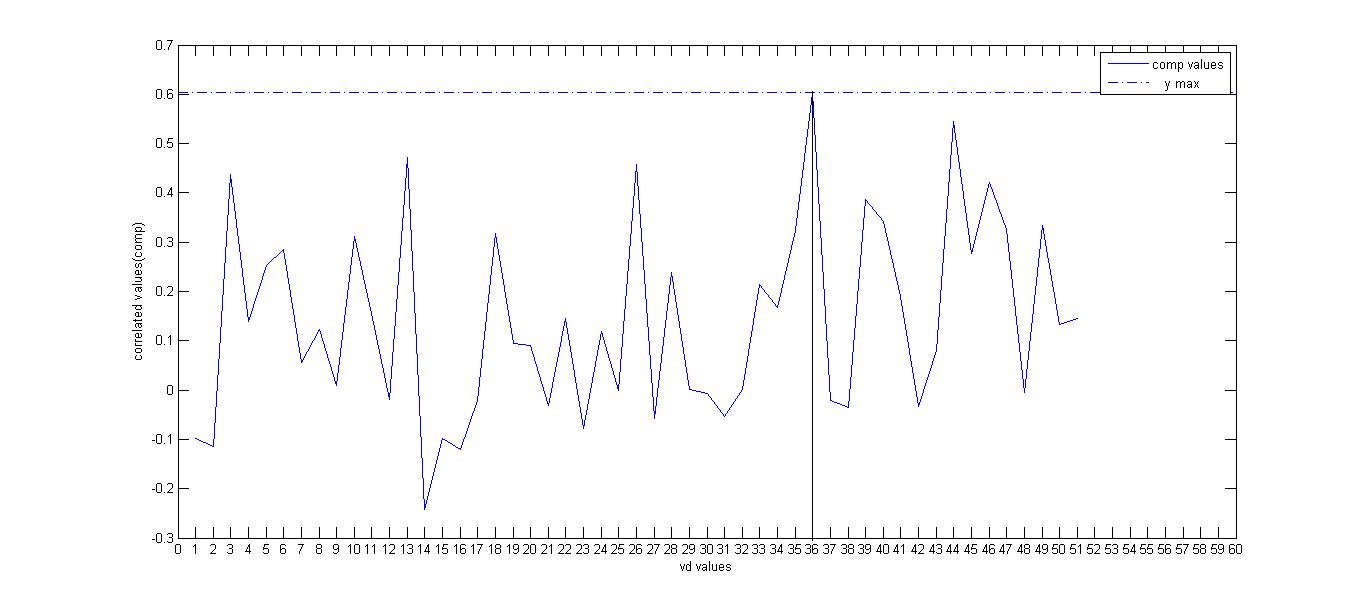

Fig 5.33 Correlated values of fifth segment character with all templates for its recognition(vd=36.’3’)

Fig 5.33 Correlated values of fifth segment character with all templates for its recognition(vd=36.’3’)

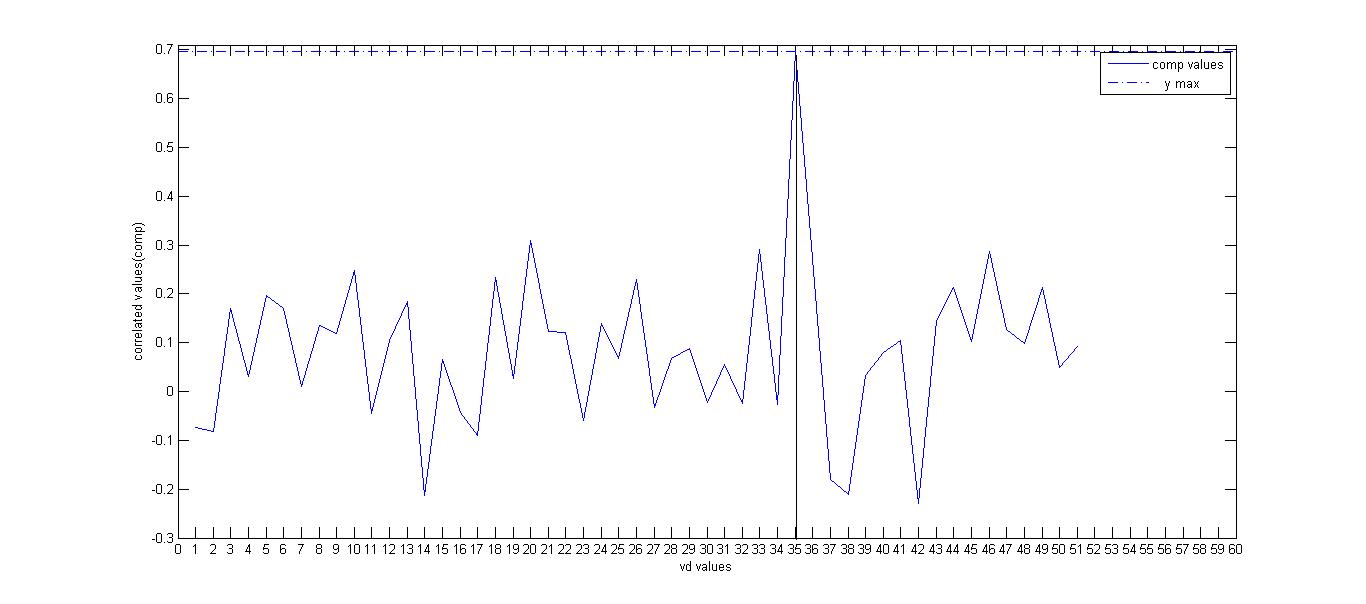

Fig 5.34 Correlated values of sixth segment character with all templates for its recognition(vd=35,’2’)

Fig 5.34 Correlated values of sixth segment character with all templates for its recognition(vd=35,’2’)

Fig 5.35 The characters are extracted in text file

Fig 5.35 The characters are extracted in text file

The characters are finally displayed on the text file thus by converting the image of the car number plate into the ASCII characters which are to be stored in a database for further processing.

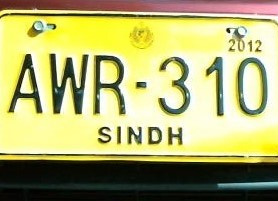

5.2 Experimental Results

TABLE 5.1 Experimental Results

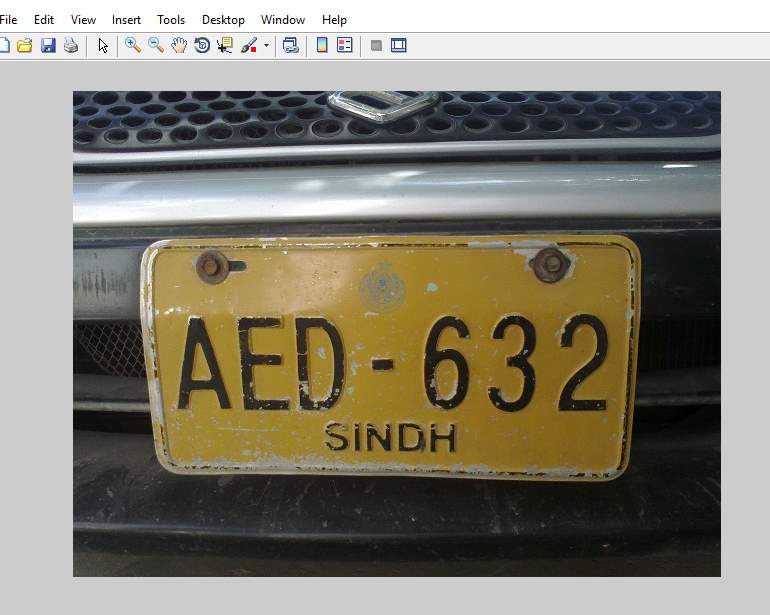

| CAR NUMBER PLATE IMAGE

|

EXTRACTED NUMBER IN TEXT FILE |

|

AKH343 |

|

KPT295 |

|

AED632 |

|

374HQR |

|

AWR310 |

|

AXZ016 |

|

BEA269 |

|

W548W8 |

TABLE 5.2 Experimental MSE Values

| MEAN SQUARE ERROR PARAMETER VALUE | |||

| LISCENCE PLATE

|

Morphological plate | Binarized plate | Localized plate |

|

0.2406

|

0.2914 |

0.3089 |

|

0.3731 |

0.4096 |

0.4239 |

|

0.1376 |

0.1967 |

0.1882 |

|

0.2779 |

0.3723 |

0.4254 |

|

0.4935 |

0.5204 |

0.5674 |

|

0.2943 |

0.3537 |

0.3524 |

|

0.1827

|

0.3107 |

0.3075 |

|

0.1231 |

0.2095 |

0.1515 |

|

TABLE 5.3 Experimental PSNR Values |

|||

| PEAK SIGNAL TO NOISE RATIO PARAMETER VALUE | |||

| LISCENCE PLATE

|

Morphological plate | Binarized plate | Localized plate |

|

54.3175

|

53.4859 |

53.2326 |

|

52.4127 |

52.0068 |

51.8581 |

|

56.7433 |

55.1937 |

55.3853 |

|

53.6927 |

52.4221 |

51.8427 |

|

51.1983 |

50.9672 |

50.5916 |

|

53.4429 |

52.6439 |

52.6602 |

|

55.5144 |

53.2074 |

53.2530 |

|

57.2294 |

54.9181 |

56.3271 |

5.3 Analysis of ResultS

Tests are conducted according to the modular form

5.3.1 Extracting of Individual Digits

Determine the angle of the Vehicle vehicle plate. it’s typically vital once capturing the vehicle image. for example, the figure illustrate below result in wrong recognition of the character

To improve within the cropping of image, we will improve on the accuracy of capturing of car image. Next, we will conjointly embrace AN formula wherever we will modification the image of the four coordinates to a typical parallelogram size or manually crop the image.

5.3.2 Vehicle quantisation and effort

For some rare cases, like terribly dark image, high distinction image, low distinction image, the binarized image don’t permit to form the distinction between the background and therefore the digits. this may result fail recognition of the vehicle vehicle plate.

To improve on the performance of the character recognition, we will build the

difference between the digits and background within the vehicle plate. effort and quantisation permit to get a grey scale image with improve distinction between digit and therefore the background.

5.3.3 Checking and Verification of the data point

the inside of attempting out alternative automotive plate, I actually have expertise loosing out character once activity the extracting method. once a lot of study the MATLAB chest syntax, we’ve managed to form adjustment on the worth and apply the talent of binary space open (bwareaopen) during which facilitate to get rid of the tiny object too. Below illustrate a example of the scenario:

Morphologically open binary image (remove tiny objects)

imagen = bwareaopen(imagen,threshold area);

figure,imshow(imagen)

5.4 Discussion

Although OVRS technology has been promoted as being capable of reading in excess of 3,000 plates per hour, this results recommend that this capability is considerably exaggerated in actual road tests. The technology might have the capability to browse a lot of plates, however traffic volume and also the style of Surrey streets might impede this browse rate. maybe the foremost vital conclusion reached from this study is that the utility of OVRS depends on volume. The initial analysis of automobile parking space information created by Schuurman (2007) instructed that automobile parking space readying was dependent upon the quantity of vehicles in parking tons and, for the foremost half, constant conclusions applied to the current tryout of the technology. In each cases, the a lot of cars scanned, the bigger the quantity of raw hits. significantly, the character of hits was primarily uniform for all of the appointed traffic corridors and also the proportions command once thought of by time of day or day of month. In effect, it had been all concerning the quantity of hits, and, for the foremost half, the frequency of hits exceeded what a typical patrol unit may reply to throughout a shift. As a consequence, the very fact that officers may expect many hits per hour needs the planning of a response priority theme and increase patrol units to manage the accrued employment. However, considering the results of this part of the project, so as to maximize potency, police forces in operation OVRS Technology might want to specialize in high volume traffic corridors throughout the day shift. Given this, it should be doable, as instructed on top of, to coach volunteers United Nations agency will assist the police in filtering through information hits, verifying that calls square measure valid and that should receive priority attention to minimize the burden on patrol officers.

5.5 DIFFICULTIES AND RECOMMENDATIONS

- There ar variety of potential difficulties that the software system should be able to deal with. These include:

- Poor image resolution, actually because the plate is just too far-off however typically ensuing from the utilization of a low-quality camera.

- Blurry pictures, notably motion blur.

- Poor lighting and low distinction as a result of overexposure, reflection or shadows.

- An object obscuring (part of) the plate, very often a tow bar, or dirt on the plate.

- A different font, fashionable for self-importance plates (some countries don’t permit such plates, eliminating the problem).

- Circumvention techniques.

- Lack of coordination between countries or states. 2 cars from totally different|completely different} countries or states will have constant variety however different style of the plate.

- While a number of these issues will be corrected at intervals the software system, it’s primarily left to the hardware facet of the system to figure out solutions to those difficulties. Increasing the peak of the camera could avoid issues with objects (such as different vehicles) obscuring the plate however introduces and will increase different issues, like the adjusting for the redoubled skew of the plate.

- On some cars, tow bars could obscure one or 2 characters of the registration number plate. Bikes on bike racks may obscure the amount plate, tho’ in some countries and jurisdictions, like Victoria, Australia, “bike plates” ar presupposed to be fitted. Some small-scale systems allow some errors within the registration number plate. once used for giving specific vehicles access to a blockaded space, the choice could also be created to own a suitable error rate of 1 character. this can be as a result of the probability of associate degree unauthorized automobile having such an identical registration number plate is seen as quite little.

- When running the most file it’s able to find and acknowledge a number of the pore automobile vehicle registration number plate however fail on the image of cars with headlights.

- For the long run works and suggestion on enhancements, these ar the steps that ar recommended:

- Modification is required to be done on the offset of police work the oblong plate or by applying different technique to the system.

- We solely use the black and white image of the Vehicle registration number plate. For future implementation, we are able to use RGB to HSV or RGB to CMY methodology to subsume other type of colour registration number plate.

- There ought to be improvement on the choice of the rule and ways in which to find error. once the likelihood of recognition guess is correct however falls below the threshold, the recognition system should refuse to make the decision.

CHAPTER 6

CONCLUSION AND FUTURE SCOPE

6.1 Conclusion

In this report, we presented application software package designed for the popularity of automobile car place. first of all we tend to extracted the plate location, then we tend to separated the plate characters on an individual basis by segmentation and eventually applied guide matching with the utilization of correlation for recognition of plate characters. this technique is meant for the identification Indian variety plate|vehicle plate|registration code} and therefore the system is tested over an outsized number of pictures. Finally it’s established to be ninety six for the segmentation of the characters and eightieth for the popularity unit correct, giving the general system performance ninety two.57% recognition rates. this technique is redesigned for international automobile license plates in future studies.

The OVRS offers many edges to police forces. most significantly, OVRS has the power to quickly and expeditiously scan an outsized range of license plates with none officer intervention, like having a politician physically sort in an exceedingly license plate to scan. OVRS additionally offers associate objectiveness that will profit police. only if officers cannot check all the license plates they encounter whereas on shift, they’re compelled to form a series of choices regarding that plates to go looking. whereas officers presently use a collection of indicators, known through expertise, with that to pick those plates that seem additional suspicious, it’s extremely plausible that through this method, officers might miss plates that are, in fact, untoward. the power of OVRS to scan an outsized range of plates permits for additional plates to be scanned quicker and additional expeditiously. the utilization of OVRS technology may additionally lead to safer police driving as officers would not have the additional distraction of rejection from the road sporadically to manually sort in license plates of interest.

Research with OVRS has shown many edges, namely, inflated police potency. With associate inflated range of hits, or winning matching between a scanned plate image and a information of interest, police are higher ready to establish additional persons of interest. This will increase the potential for the recovery of purloined merchandise likewise as convictions. The technology additionally permits the police to spot uninsured vehicles, prohibited drivers, and un authorized drivers way more quickly than previous police ways. There are, however, some limitations inherent within the use of such advanced technology. With the potential for associate inflated range of hits, officers might become swamped by the rise within the range of problematic cars to retort to. analysis within the uk urged that in responding to the sheer range of hits known through OVRS, an officer’s workload substantially increased, impeding their ability to efficiently respond not only to OVRS hits, but to other calls for service. As a consequence, police must develop strategies that enable officers to prioritize their responses. However, as the profile of crime is different in jurisdictions, detachment-specific schemes may need to be developed. In other words, depending on the geographic location of hotspots, the number of officers on patrol, and the specific needs of the community, priority schemes may need to be individualized. Moreover, to better reply to priority hits, police forces might realize it necessary to extend the quantity of officers on the road that, given current financial realities in several detachments in North American country, might not be possible. whereas advances in technology afford the in use of systems, like licence platerecognition, it conjointly provides new ways with that to avoid being screened by such technology. as an example, Gordon and Wolf (2007) reported that since the arrival of OVRS, some firms have begun to sell product to thwart the technology. They noted that one company sells a transparent spray (US $30 per will) that the makers claim can build licence plates invisible once browse by a camera. In effect, because the police develop new technologies, there’ll be those that develop the suggests that to defeat these techniques. within the past, recognition package has made very low in recognition rates (Gordon and Wolf, 2007). Yet, additional recently, analysis steered that the OVRS technology reads plates properly ninety fifth of the time. However, within the event that a plate isn’t browse properly and also the officer deems the plate suspicious, it’s vital that officers to retain the flexibility to analyze the plate additional absolutely and to follow their instincts once perceptive a suspicious vehicle.

The potency of OVRS technology is entirely dependent upon the in coordination of agencies. while not the supply of information by that to match scanned plates, OVRS cannot presumably determine plates of interest. info will either be provided in real time, e.g. lists ar updated as cars or plates ar reported purloined or it will be updated each twenty four hours. Either way, those exploitation OVRS technology should have access to knowledge that details info regarding purloined plates or cars, vehicles that are concerned in alternative criminal activity, drivers that are prohibited or have lost their licence, or drivers WHO ar uninsured . a lot of of the work concerned in implementing OVRS technology involves building these initial relationships between agencies. Again, while not the in coordination between police forces and agencies, like insurance firms, alternative criminal justice agencies, and also the motorcar branch, OVRS technology merely won’t succeed. Lastly, privacy issues are a limitation to the utilization of OVRS technology. involved voters might accuse police or the govt of exploitation the technology to trace law-abiding voters, invasive their right to privacy. voters might equate the utilization of OVRS technology to “fishing expeditionsâ€, wherever police merely scan all plates till they get successful, as hostile specifically looking for explicit plates supported previous intelligence. issues in North American country exist already concerning the utilization of circuit tv (CCTV) systems publically (Schuurman, 2007). Deisman (2003) known that there ar limits to the extent that police in North American country will interact in continuous and non-selective observation of voters. Schuurman (2007) conjointly noted that the Canadian Charter of Rights and Freedoms states that privacy rights of voters ar broken by indiscriminate video police investigation while not cause. primarily, additional analysis has to be conducted so as to work out however OVRS technology will be balanced with relation to voters right for privacy and civil rights. voters may additionally have issues with relation to the upkeep of information in warehouses. voters might concern the potential for breaches in security. additionally, there could also be issues concerning WHO has access to the current knowledge . It is, therefore, very vital that wide thought is given to the safe storage of information and strict rules concerning WHO has access to the databases. In responding to issues of privacy, policies could also be place in situ that regulate the deleting of collected knowledge on a daily, weekly, monthly, or yearly basis.

In conclusion, though any analysis is required to work out the extent to that OVRS will increase the speed of arrest and features a deterrent result, the results of this study recommend that OVRS technology offers many substantial advantages to the police. However, it’s troublesome to assess the impact of OVRS on police resources and workloads, and so build any firm conclusions on its general utility. Still, OVRS will have a particular utility as its strategic readying can assist police departments to additional effectively response to a range of car and driving-related offences. The preponderating good thing about OVRS is that it brings a so much larger range of offenders to the eye of the police, instead of the few offenders the police ar ready to realize throughout their routine activities. a way to adequately reply to this case would require careful thinking and coming up with on the a part of the police.

6.2 Future Scope

Though we have achieved an accuracy of 80% by optimizing various parameters, it is required that for the task as sensitive as tracking stolen vehicles and monitoring vehicles for homeland security an accuracy of 100% cannot be compromised with. Therefore to achieve this, further optimization is required.

Also, the issues like stains, smudges & different font style and sizes are need to be taken care of. This work can be further extended to minimize the errors due to them.

REFERENCES

- Ronak P Patel1 et al. “Automatic Licenses Plate Recognition” IJCSMC, Vol. 2, Issue. 4, April 2013, pg.285 – 294, ISSN 2320–088X.

- Shan Du Et Al. ” Automatic License Plate Recognition (Alpr): A State-Of-The-Art Review” IEEE Transactions On Circuits And Systems For Video Technology, Vol. 23, No. 2, February 2013 311.

- Friedrich, Jehlicka & Schlaich”AUTOMATIC NUMBER PLATE RECOGNITION FOR THE OBSERVANCE OF TRAVEL BEHAVIOUR” 8th International Conference on Survey Methods in Transport, France, May 25-31,2008.

- Zheng.Li, Hel Xi and Le.Yu(2005), “A Comparison of Methods for Character Recognition of Car Number Plates”, Conf. on Computer Vision (VISION’05).

- Patel.Ch, Shah.Di, Patel.A(2013), “Automatic Number Plate Recognition System (ANPR): A Survey” , International Journal of Computer Applications (0975 – 8887) Volume 69– No.9.